文档

- 1: 主页

- 2: 学习shell

- 3: 编写shell脚本

- 3.1: 编写我们的第一个脚本并使其正常工作

- 3.2: 编辑我们已有的脚本

- 3.3: here 脚本

- 3.4: 变量

- 3.5: 命令替换和常量

- 3.6: shell函数

- 3.7: 一些真正的工作

- 3.8: 流程控制 - 第一部分

- 3.9: 远离麻烦

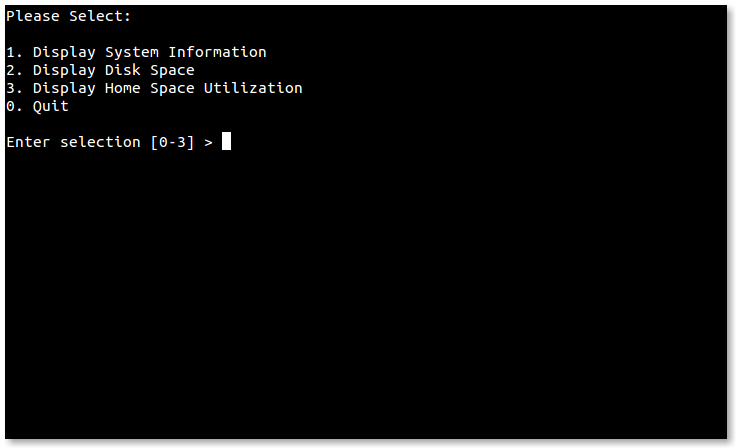

- 3.10: 键盘输入和算术

- 3.11: 流程控制 - 第二部分

- 3.12: 位置参数

- 3.13: 流程控制 - 第三部分

- 3.14: 错误、信号和陷阱(噢,我的天!)- 第一部分

- 3.15: 错误、信号和陷阱(哦,我的天!)- 第二部分

- 4: 参考

- 5: Adventures

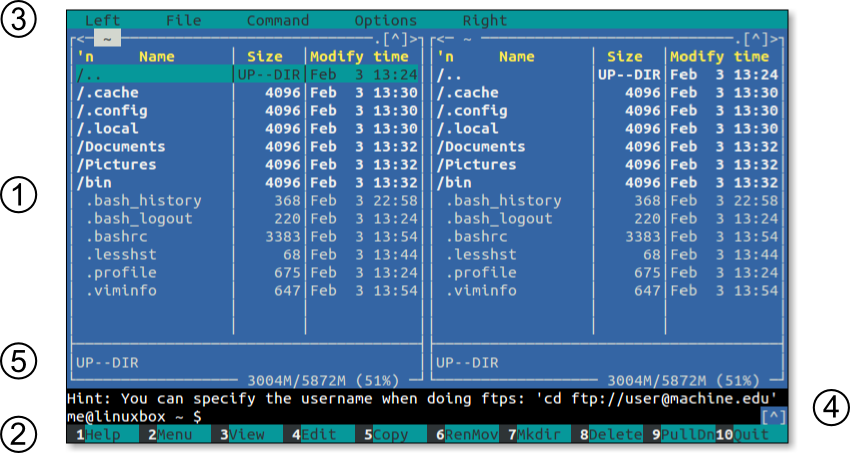

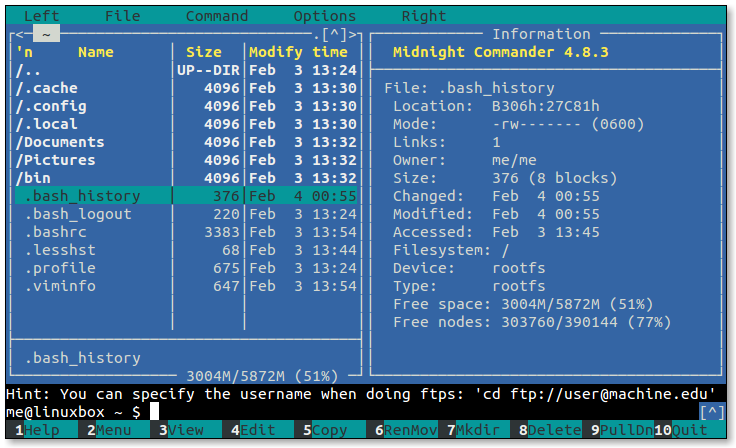

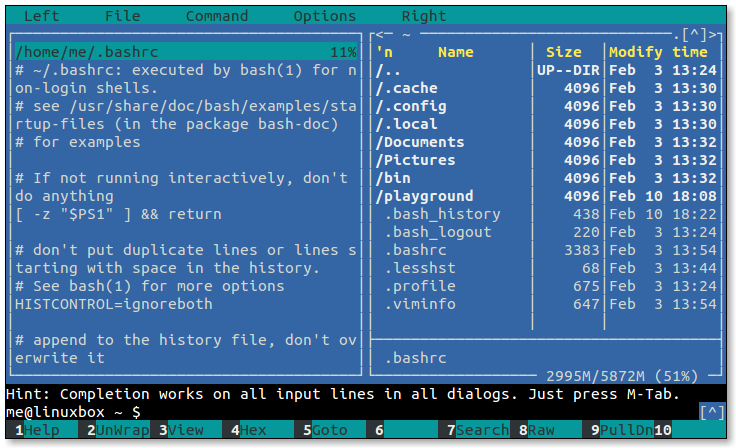

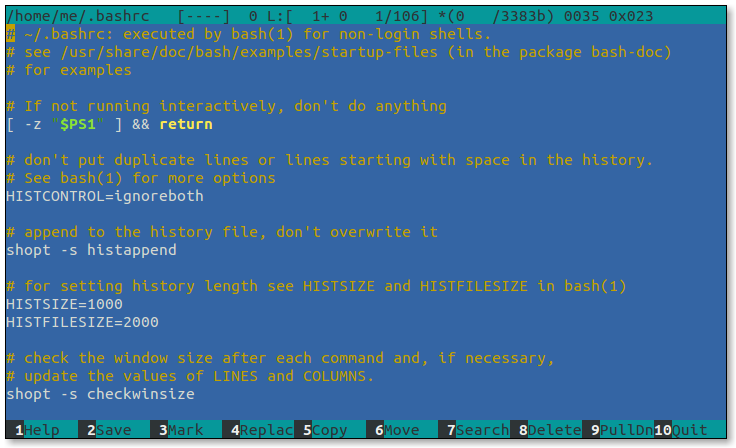

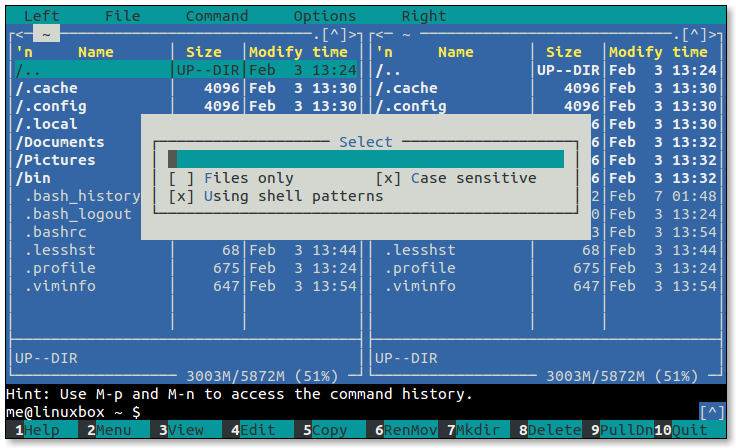

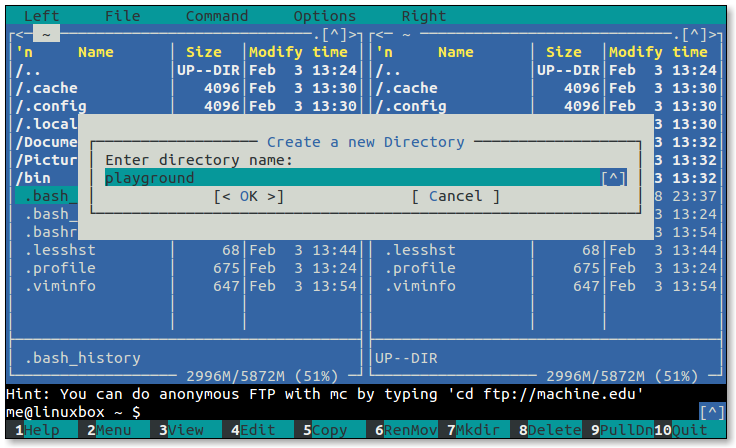

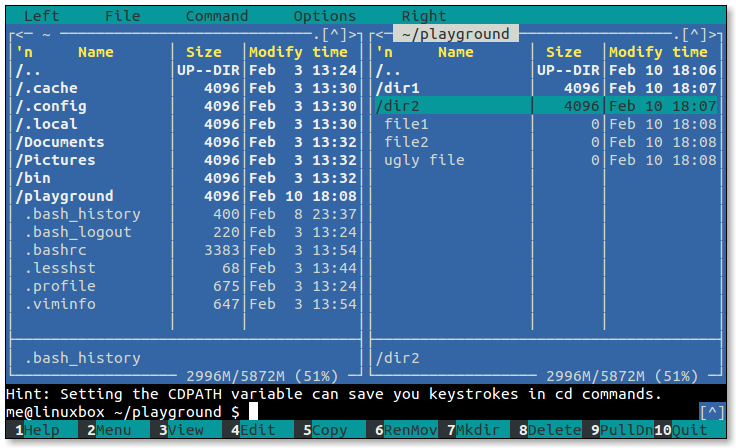

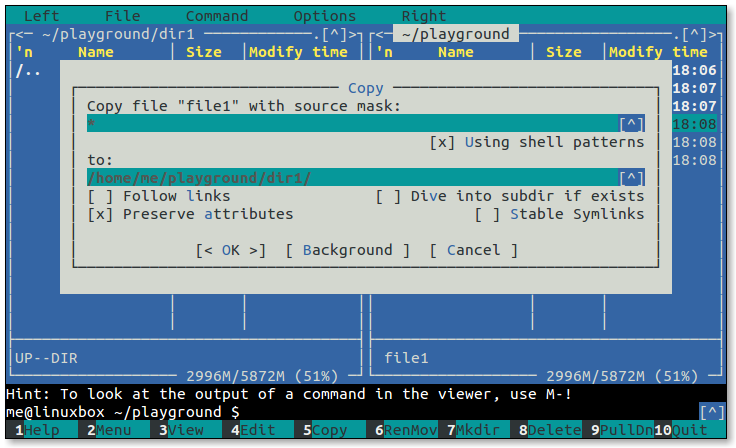

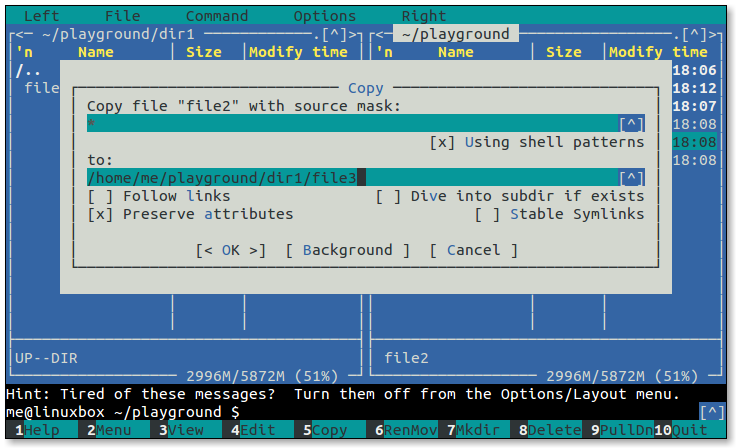

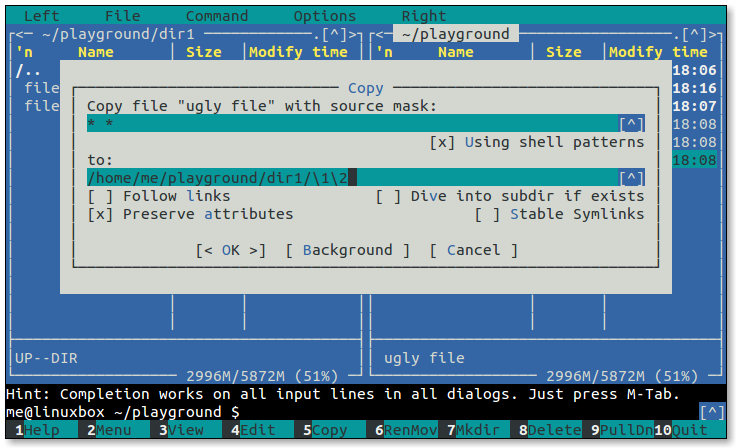

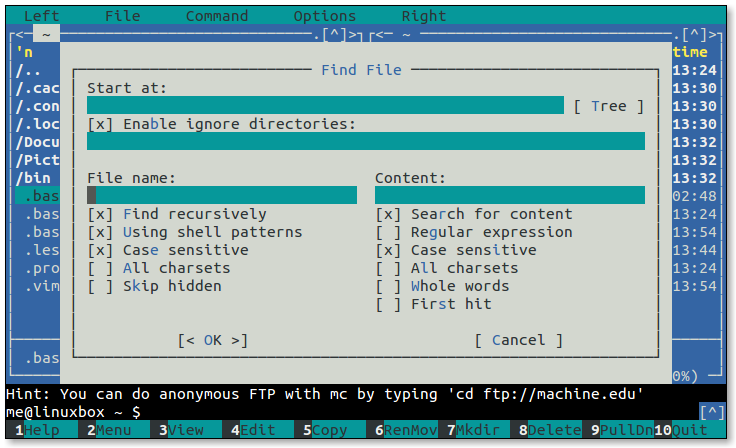

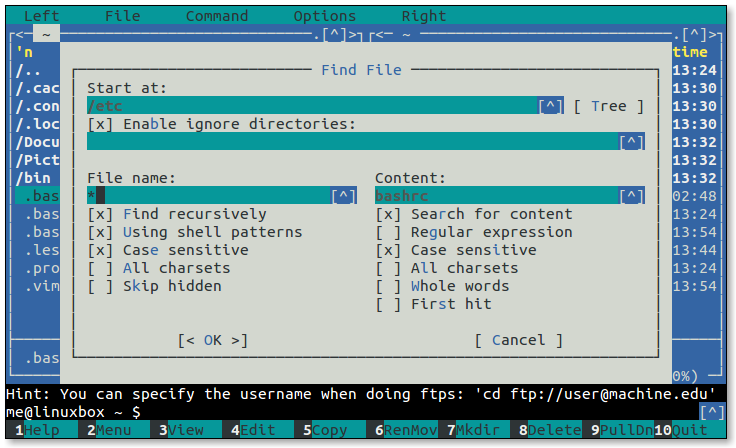

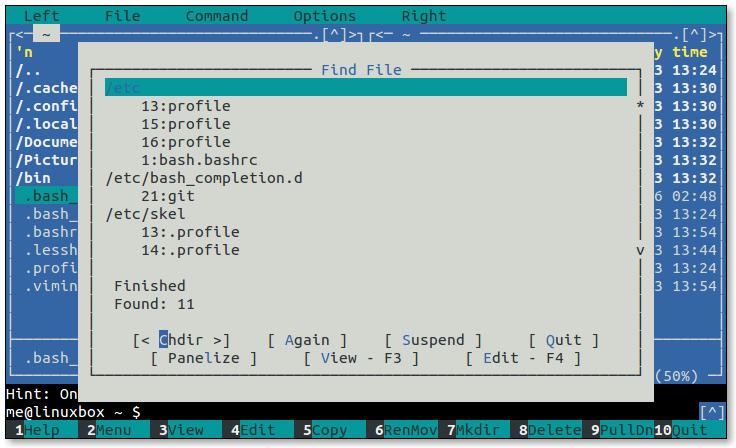

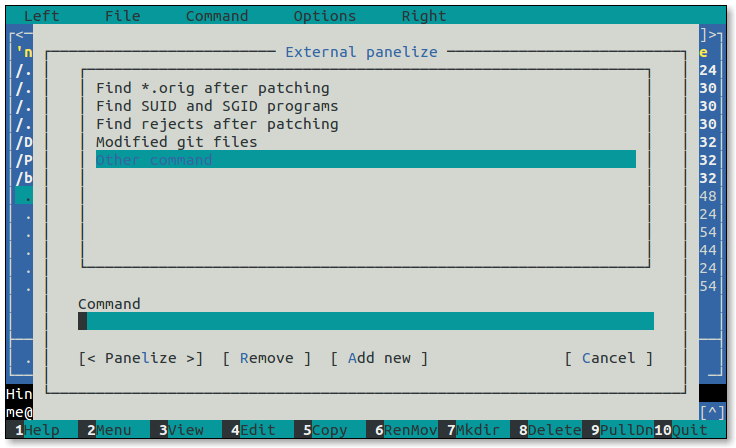

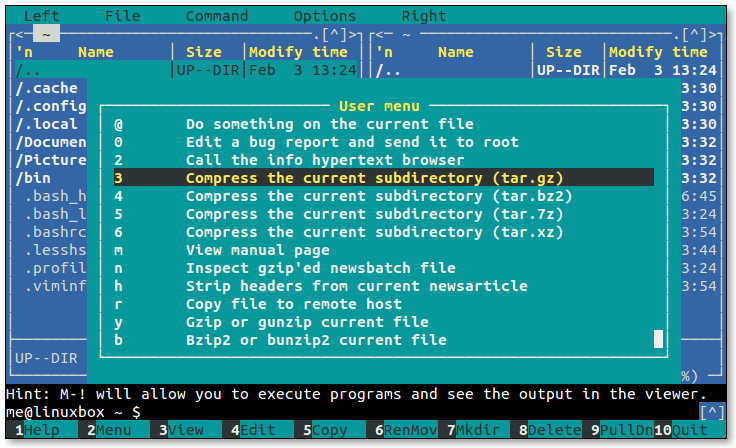

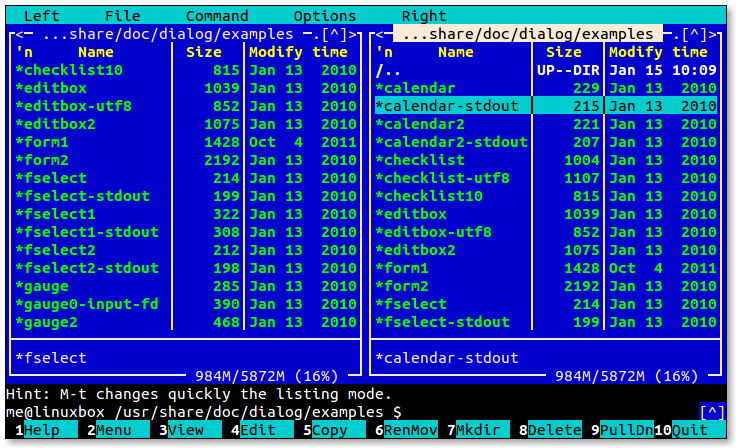

- 5.1: Midnight Commander

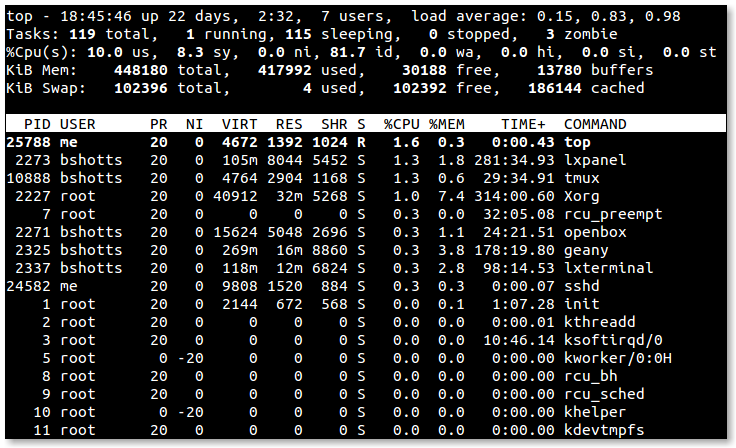

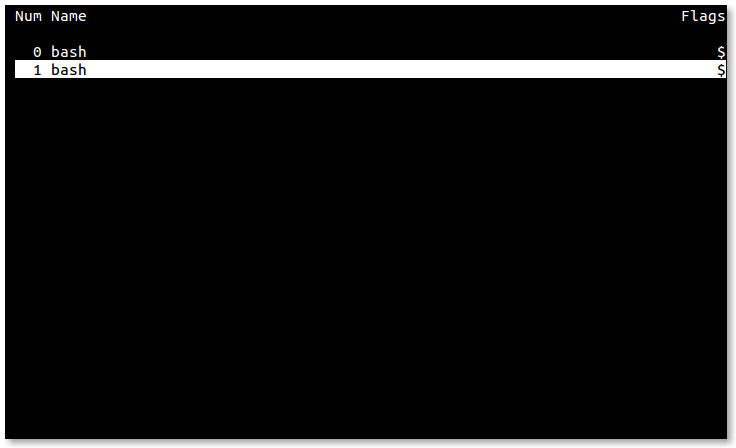

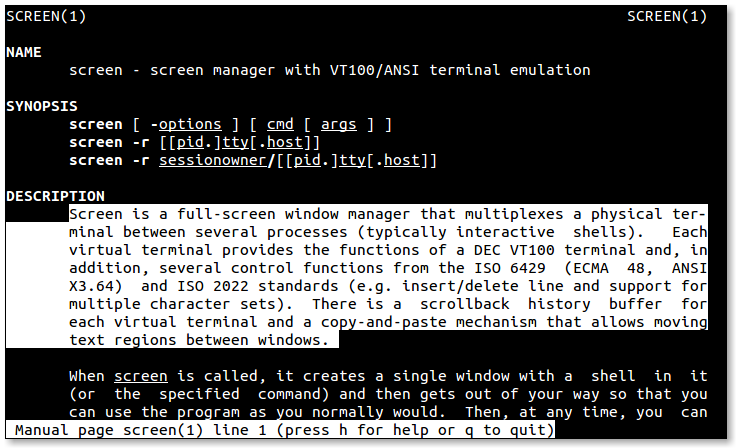

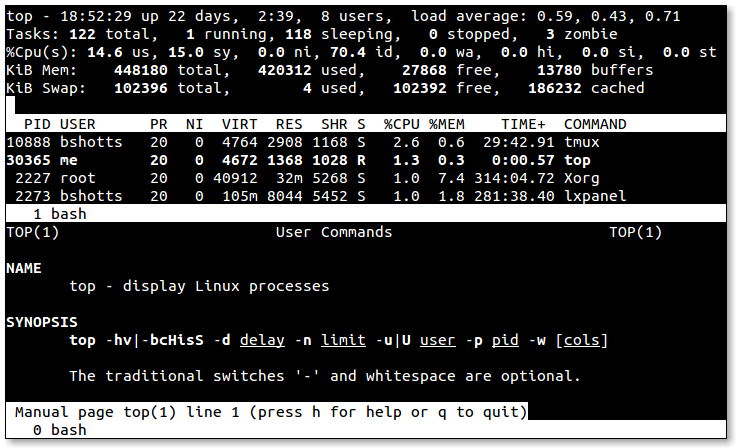

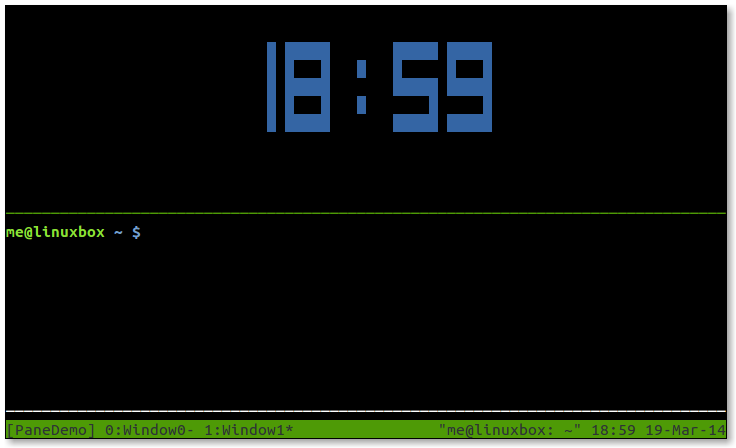

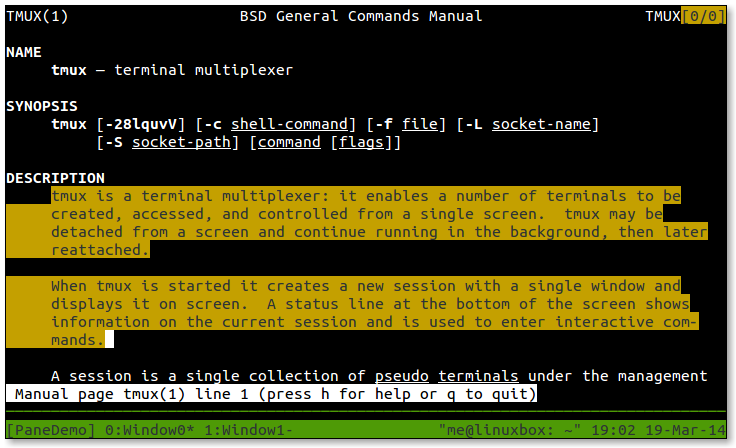

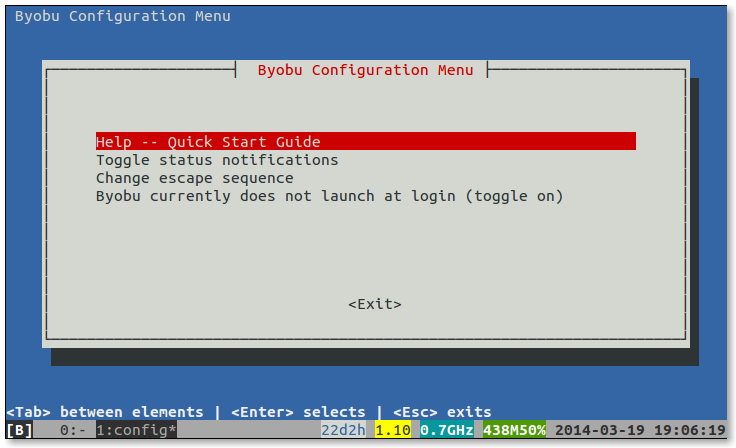

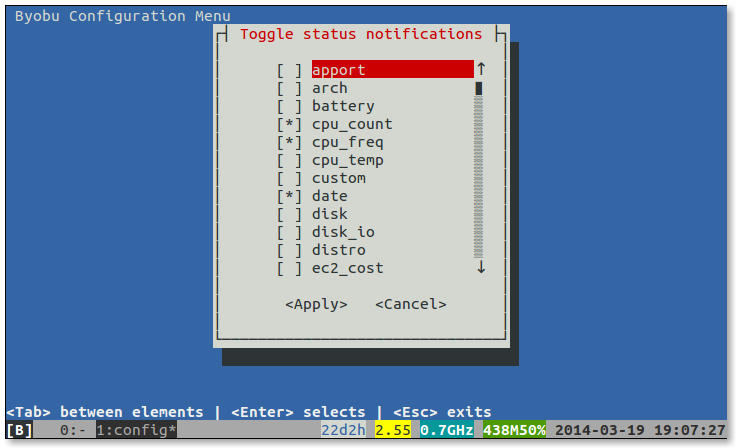

- 5.2: Terminal Multiplexers

- 5.3: Less Typing

- 5.4: More Redirection

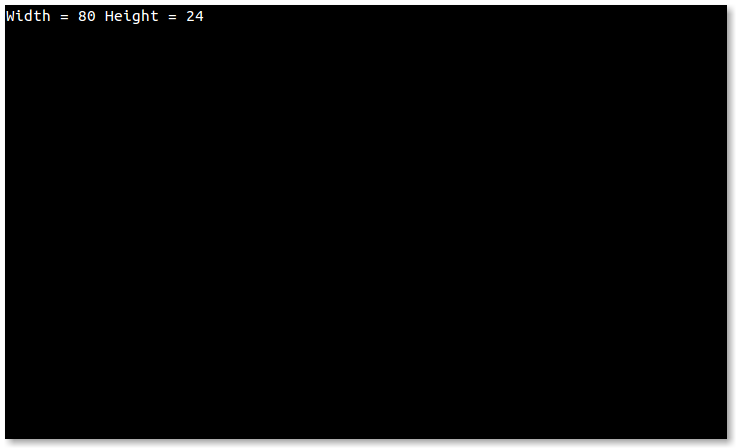

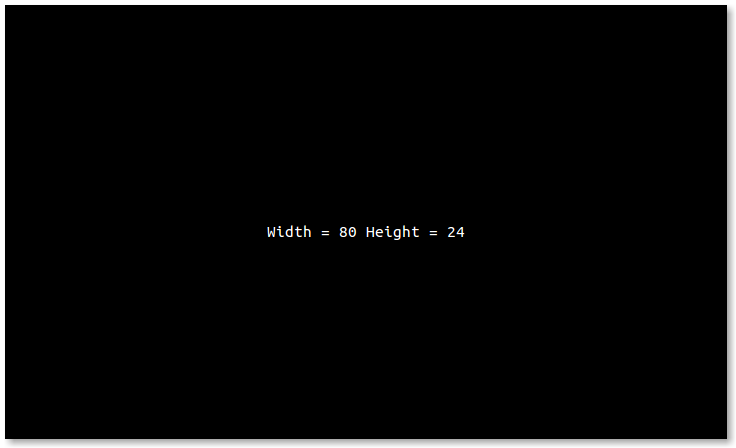

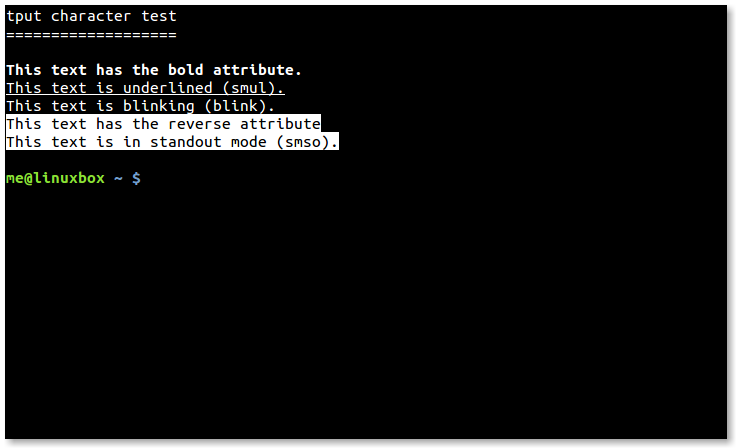

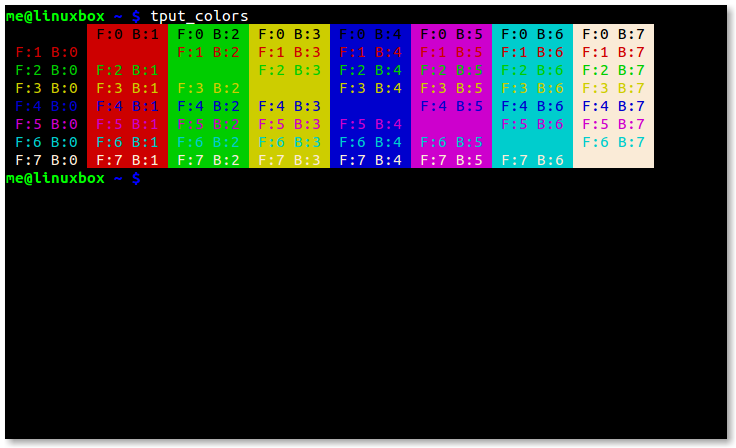

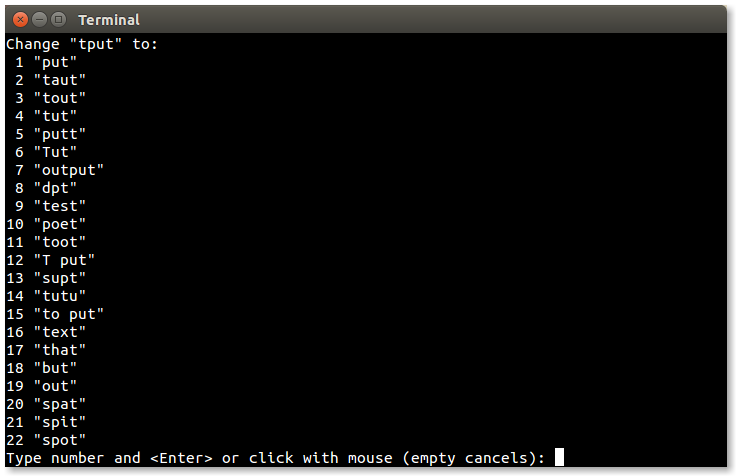

- 5.5: tput

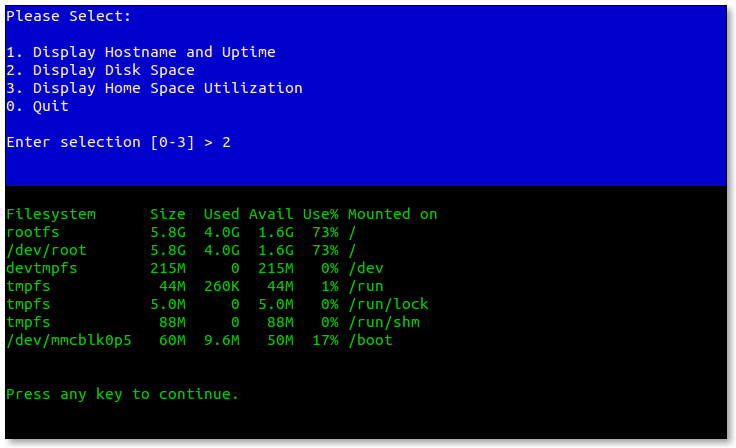

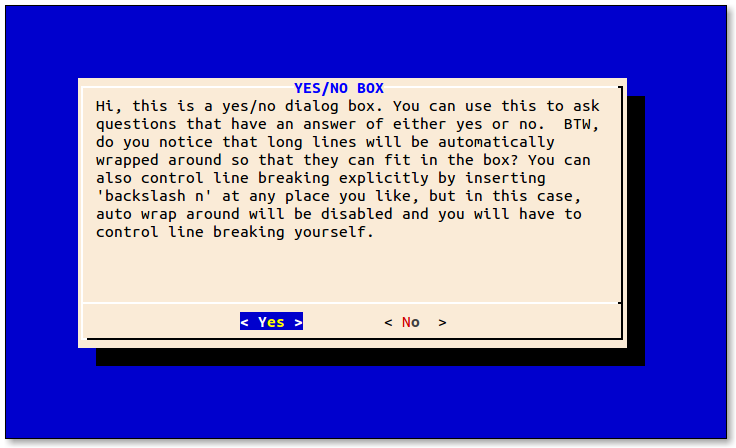

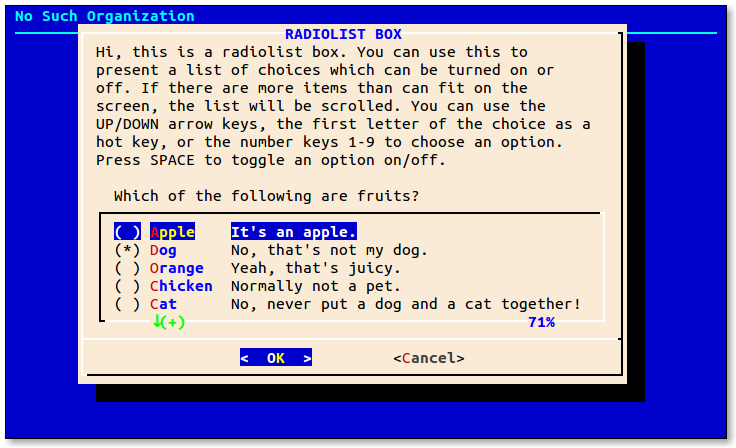

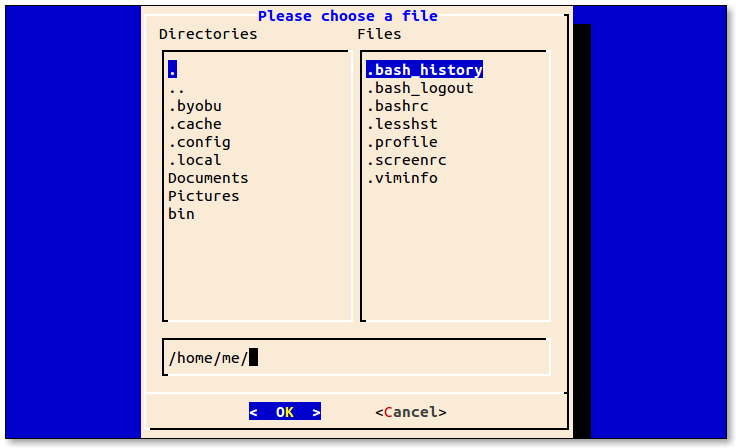

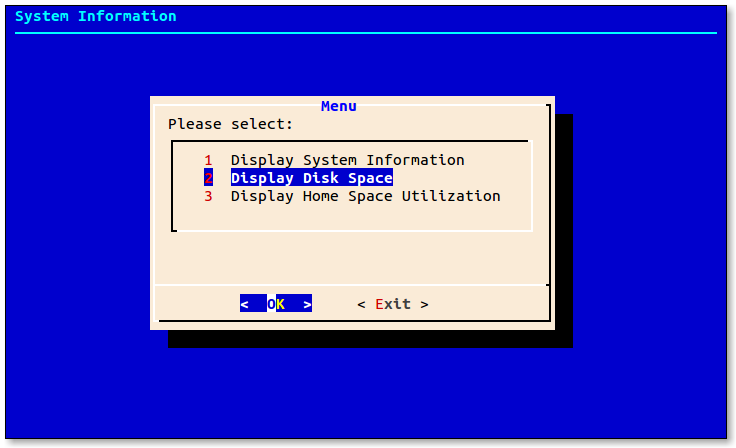

- 5.6: dialog

- 5.7: AWK

- 5.8: Other Shells

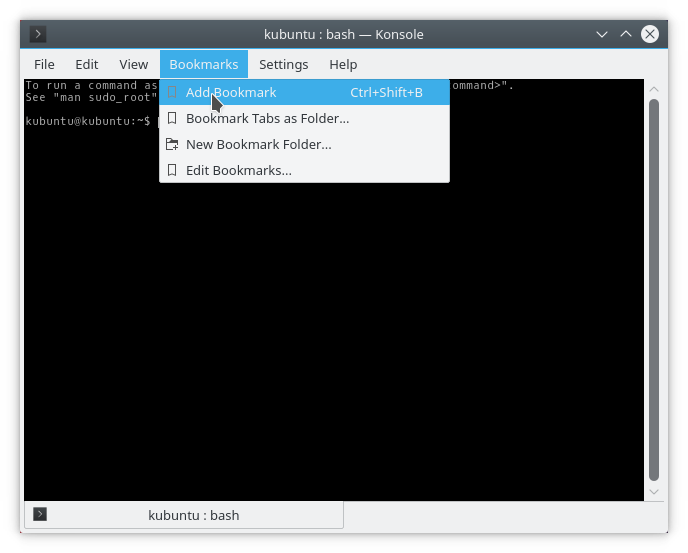

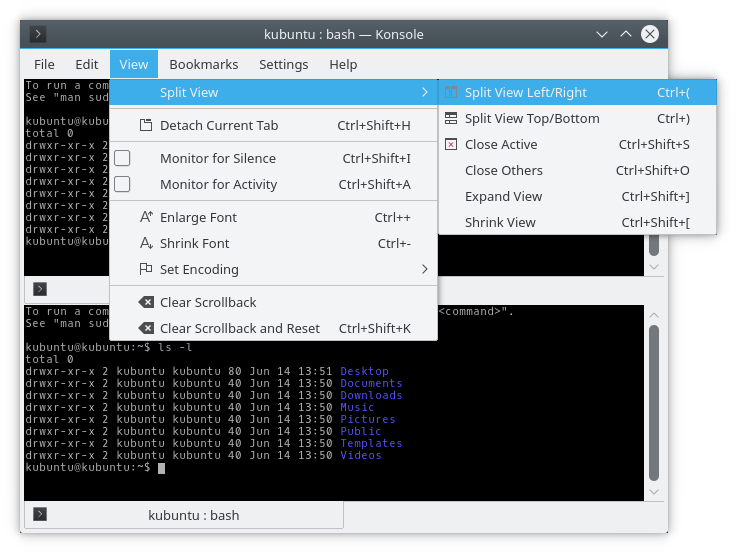

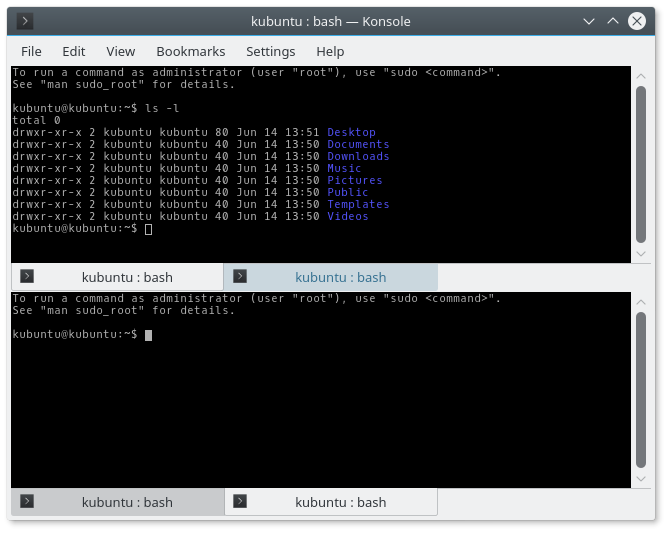

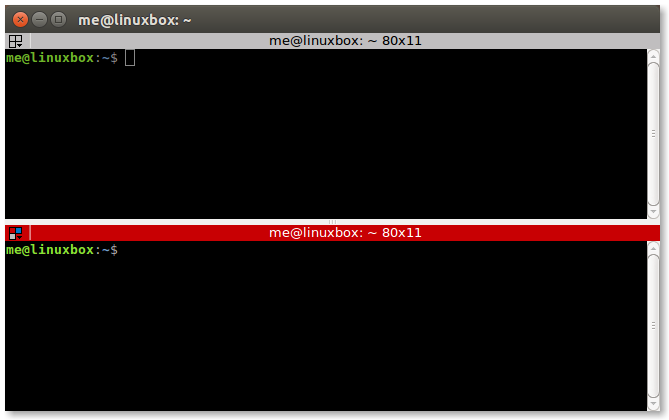

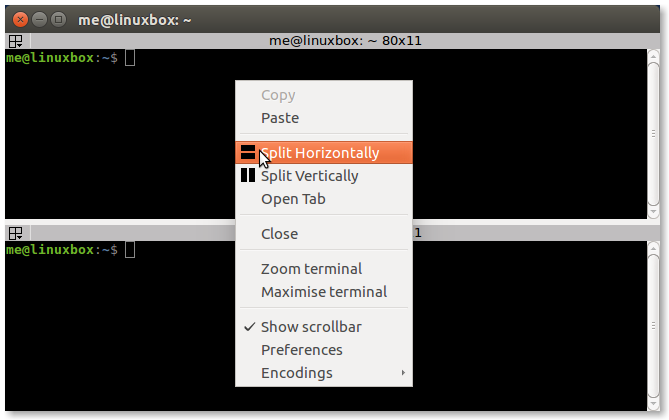

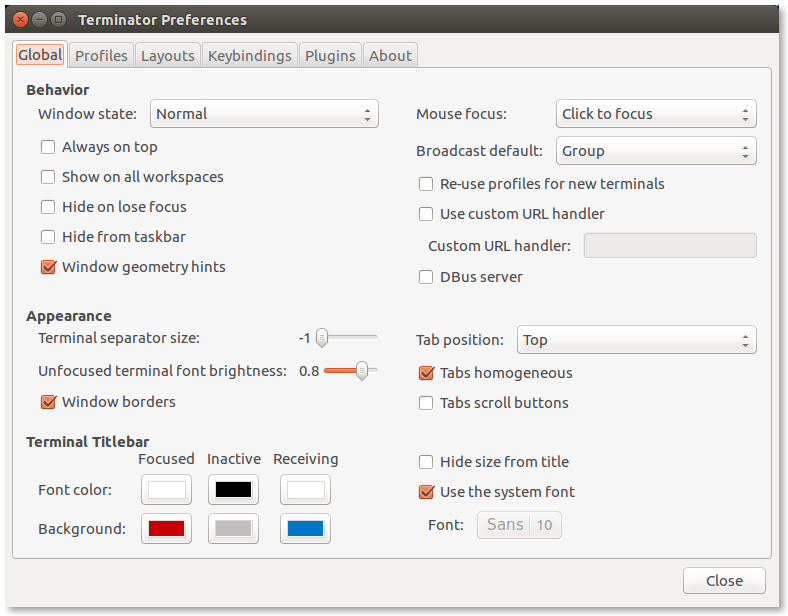

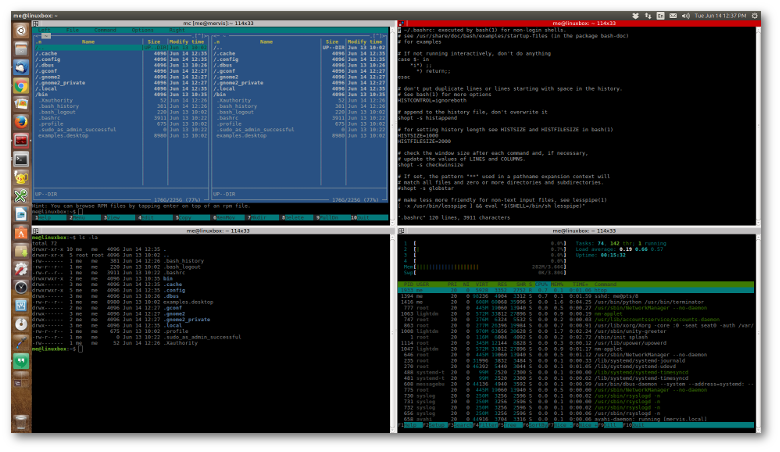

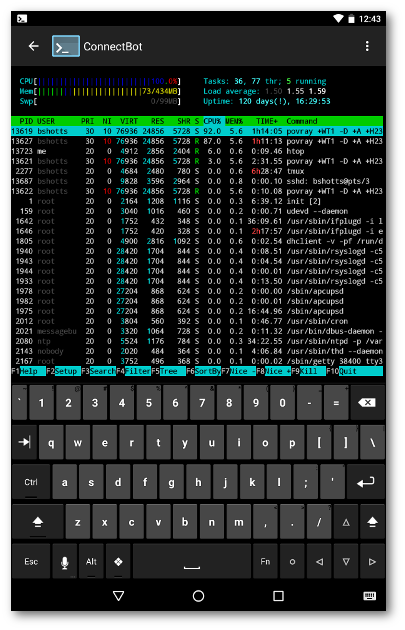

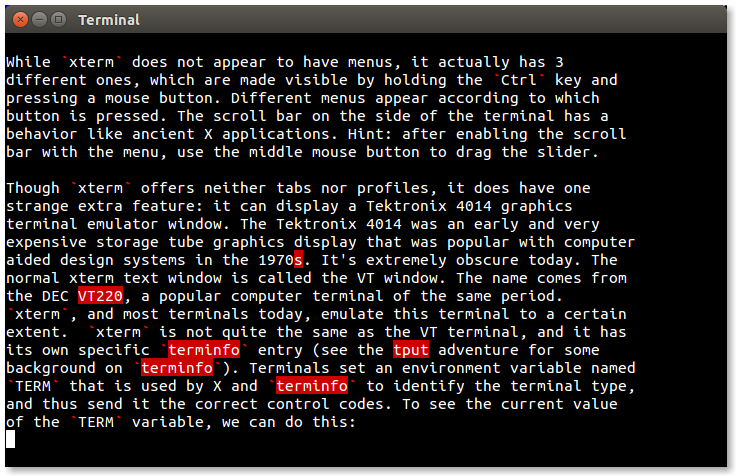

- 5.9: Power Terminals

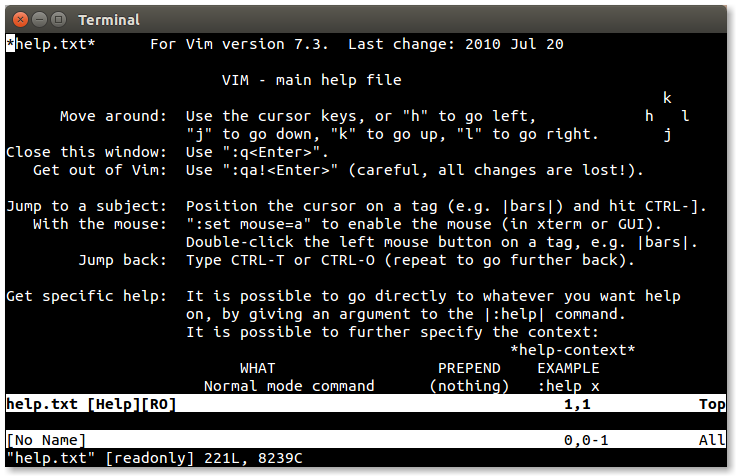

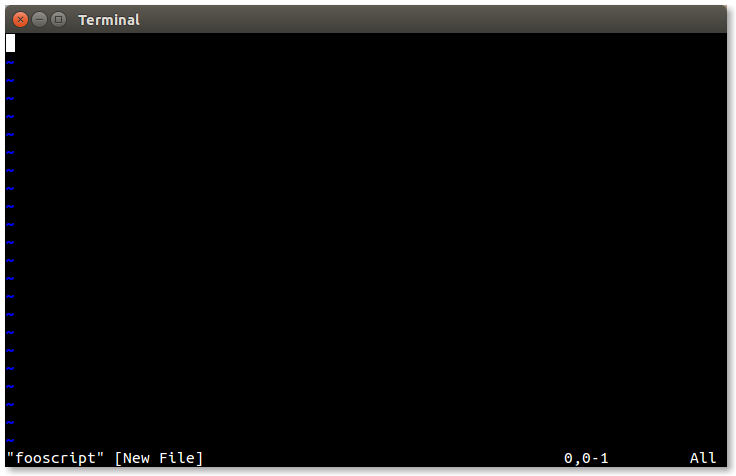

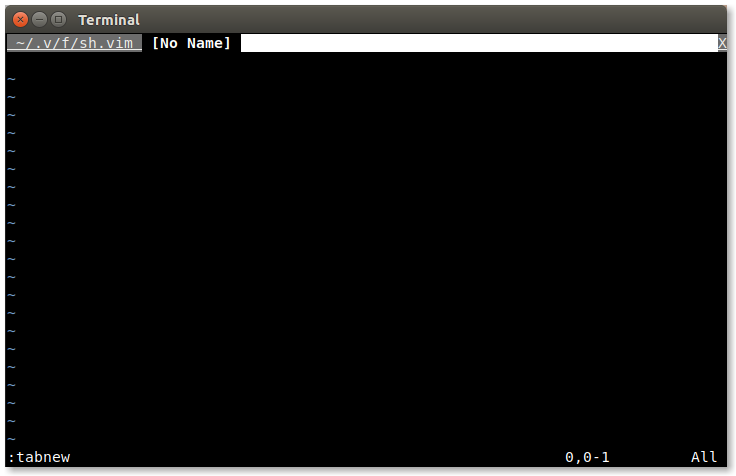

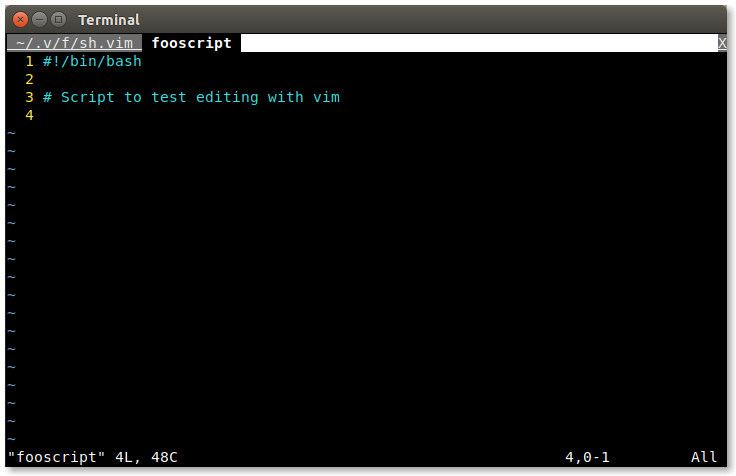

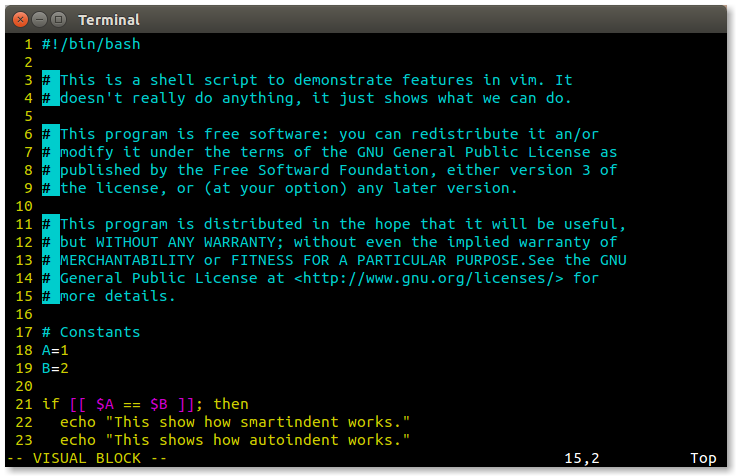

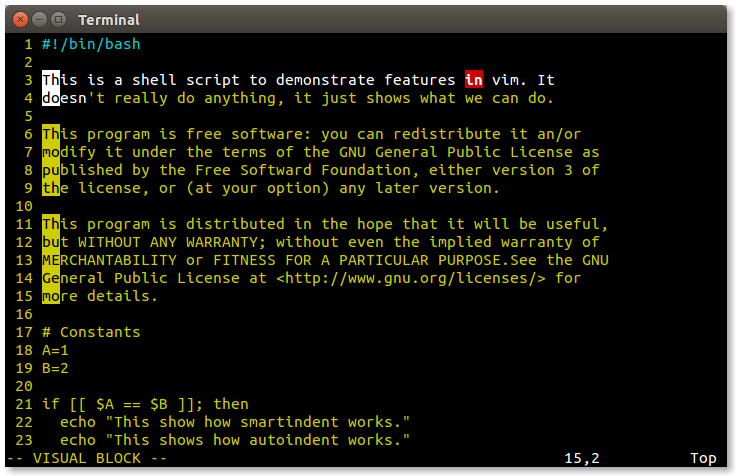

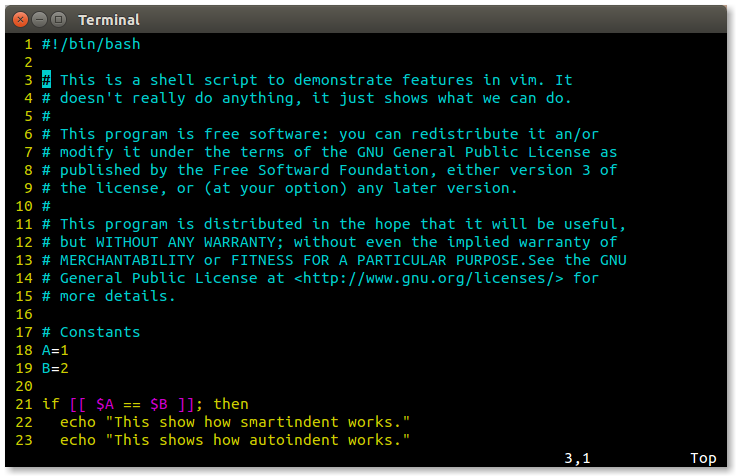

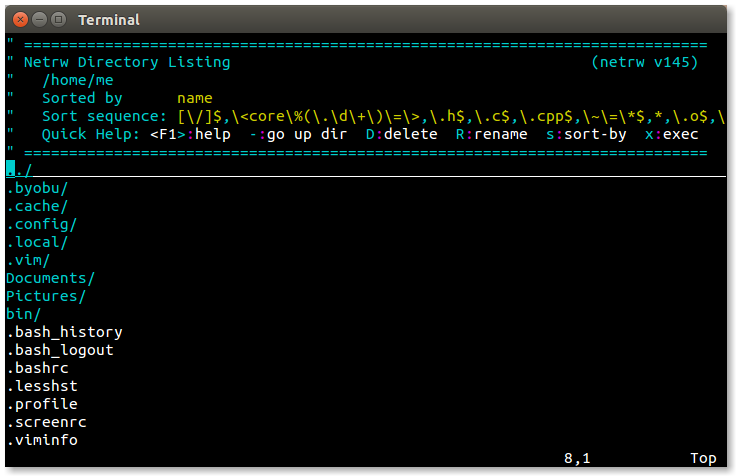

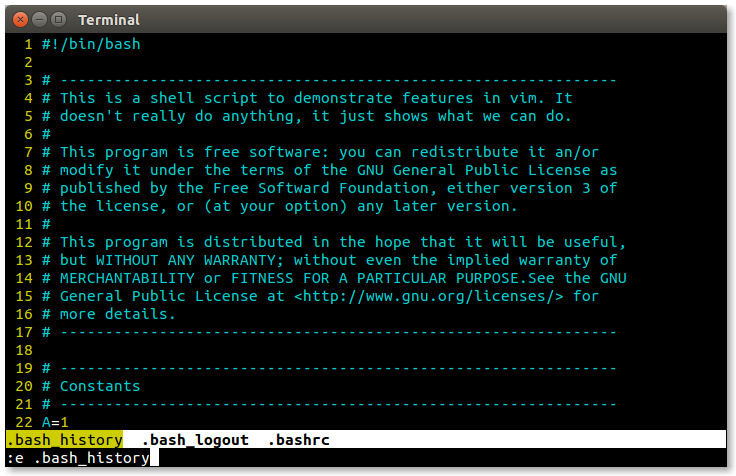

- 5.10: Vim, with Vigor

- 5.11: source

- 5.12: Coding Standards Part 1: Our Own

- 5.13: Coding Standards Part 2: new_script

- 5.14: SQL

- 6: Books

1 - 主页

现在怎么办?Now what?

https://linuxcommand.org/index.php

You have Linux installed and running. The GUI is working fine, but you are getting tired of changing your desktop themes. You keep seeing this “terminal” thing.

你已经安装和运行了Linux。图形用户界面(GUI)运行良好,但你对于更改桌面主题感到厌倦。你一直看到这个"终端"的东西。

Don’t worry, we’ll show you what to do.

别担心,我们会告诉你该做什么。

2 - 学习shell

为什么要费心?Why Bother?

https://linuxcommand.org/lc3_learning_the_shell.php

Why do you need to learn the command line anyway? Well, let me tell you a story. Many years ago we had a problem where I worked. There was a shared drive on one of our file servers that kept getting full. I won’t mention that this legacy operating system did not support user quotas; that’s another story. But the server kept getting full and it stopped people from working. One of our software engineers spent a couple of hours writing a C++ program that would look through all the user’s directories and add up the space they were using and make a listing of the results. Since I was forced to use the legacy OS while I was on the job, I installed a Linux-like command line environment for it. When I heard about the problem, I realized I could perform this task with this single line:

你为什么需要学习命令行?好吧,让我给你讲个故事。很多年前,我工作的地方遇到一个问题。我们的一个文件服务器上有一个共享驱动器,空间总是被占满。我不会提及这个遗留操作系统不支持用户配额的事实,那是另一个故事了。但服务器一直被占满,导致人们无法正常工作。我们的一位软件工程师花了几个小时编写了一个C++程序,用于查找所有用户目录并计算它们使用的空间,并生成一个结果列表。由于我在工作期间被迫使用这个遗留操作系统,我为其安装了一个类似Linux的命令行环境。当我听说这个问题时,我意识到我可以用一行命令来完成这个任务:

| |

Graphical user interfaces (GUIs) are helpful for many tasks, but they are not good for all tasks. I have long felt that most computers today are not powered by electricity. They instead seem to be powered by the “pumping” motion of the mouse. Computers were supposed to free us from manual labor, but how many times have you performed some task you felt sure the computer should be able to do but you ended up doing the work yourself by tediously working the mouse? Pointing and clicking, pointing and clicking.

图形用户界面(GUI)对许多任务很有帮助,但并非对所有任务都适用。我一直觉得现在的大多数计算机不是靠电力驱动,而是靠鼠标的“点击”运动驱动。计算机本应该使我们从体力劳动中解放出来,但你有多少次尝试让计算机完成某项任务,但最终却发现自己不得不费力地操作鼠标来亲自完成工作?指指点点,指指点点。

I once heard an author say that when you are a child you use a computer by looking at the pictures. When you grow up, you learn to read and write. Welcome to Computer Literacy 101. Now let’s get to work.

我曾经听过一位作者说,当你还是个孩子时,你通过看图来使用计算机。当你长大后,你学会了阅读和写作。欢迎来到计算机素养101课程。现在让我们开始工作吧。

2.1 - 什么是 "Shell"?

什么是"Shell"? What is “the Shell”?

https://linuxcommand.org/lc3_lts0010.php

Simply put, the shell is a program that takes commands from the keyboard and gives them to the operating system to perform. In the old days, it was the only user interface available on a Unix-like system such as Linux. Nowadays, we have graphical user interfaces (GUIs) in addition to command line interfaces (CLIs) such as the shell.

简而言之,Shell是一个程序,它从键盘接收命令并将其传递给操作系统执行。在早期,它是类Unix系统(如Linux)上唯一可用的用户界面。如今,除了Shell,我们还有图形用户界面(GUI),例如命令行界面(CLI)。

On most Linux systems a program called bash (which stands for Bourne Again SHell, an enhanced version of the original Unix shell program, sh, written by Steve Bourne) acts as the shell program. Besides bash, there are other shell programs available for Linux systems. These include: ksh, tcsh and zsh.

在大多数Linux系统中,一个名为bash的程序(代表Bourne Again SHell,是原始Unix shell程序sh的增强版本,由Steve Bourne编写)充当Shell程序。除了bash,Linux系统还有其他可用的Shell程序,包括:ksh、tcsh和zsh。

什么是"终端"?What’s a “Terminal?”

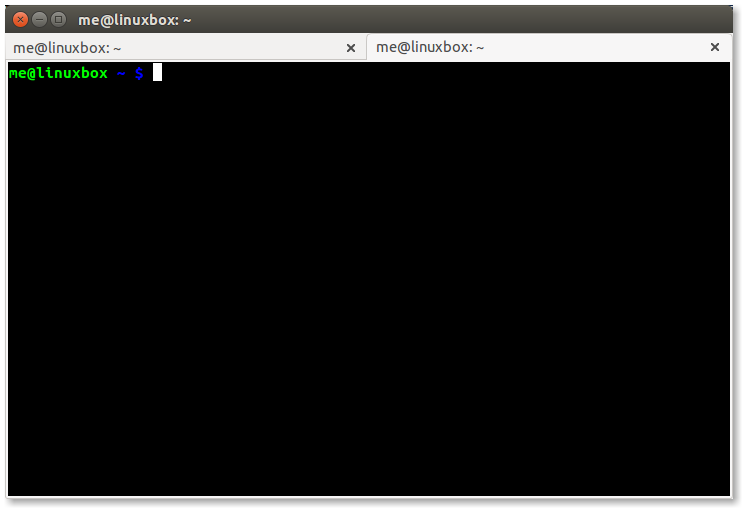

It’s a program called a terminal emulator. This is a program that opens a window and lets you interact with the shell. There are a bunch of different terminal emulators we can use. Some Linux distributions install several. These might include gnome-terminal, konsole, xterm, rxvt, kvt, nxterm, and eterm.

它是一个被称为终端仿真器的程序。它打开一个窗口,让你与Shell进行交互。我们可以使用许多不同的终端仿真器。一些Linux发行版会安装多个终端仿真器。它们可能包括gnome-terminal、konsole、xterm、rxvt、kvt、nxterm和eterm。

启动终端 Starting a Terminal

Window managers usually have a way to launch a terminal from the menu. Look through the list of programs to see if anything looks like a terminal emulator. While there are a number of different terminal emulators, they all do the same thing. They give us access to a shell session. You will probably develop a preference for one, based on the different bells and whistles it provides.

窗口管理器通常有一种从菜单启动终端的方法。浏览程序列表,看看是否有类似终端仿真器的选项。虽然有许多不同的终端仿真器,但它们都具有相同的功能。它们给我们提供了访问Shell会话的途径。你可能会根据它们提供的不同特性和功能,对其中的某个终端仿真器有所偏好。

测试键盘输入 Testing the Keyboard

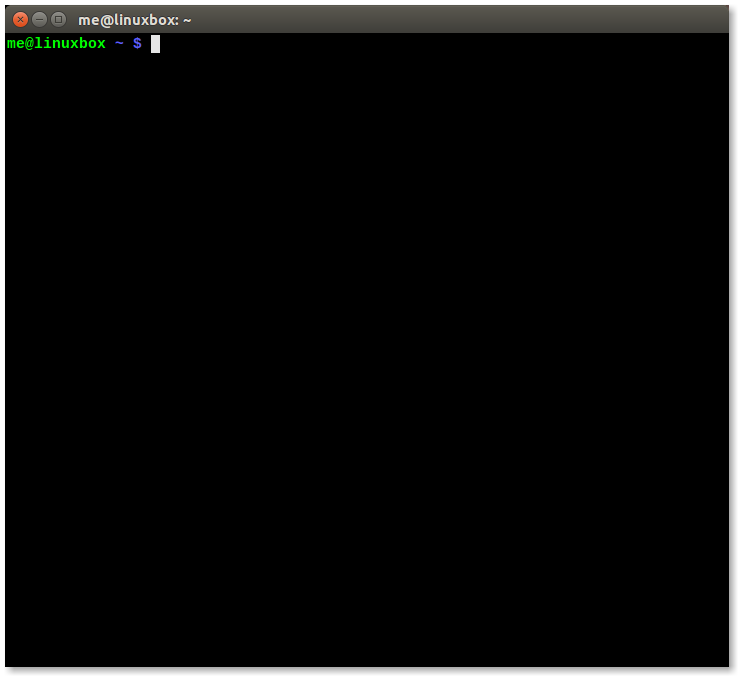

OK, let’s try some typing. Bring up a terminal window. The first thing we should see is a shell prompt that contains our user name and the name of the machine followed by a dollar sign. Something like this:

好的,让我们试着输入一些内容。打开一个终端窗口。我们应该首先看到一个包含我们的用户名和计算机名称后面跟着一个美元符号的Shell提示符。类似这样:

| |

Excellent! Now type some nonsense characters and press the enter key.

太棒了!现在输入一些无意义的字符,然后按回车键。

| |

If all went well, we should have gotten an error message complaining that it cannot understand the command:

如果一切正常,我们应该会收到一个错误消息,说明它无法理解该命令:

| |

Wonderful! Now press the up-arrow key. Watch how our previous command “kdkjflajfks” returns. Yes, we have command history. Press the down-arrow and we get the blank line again.

太好了!现在按向上箭头键。注意我们之前的命令"kdkjflajfks"会重新出现。是的,我们有命令历史记录。按向下箭头键,我们又回到了空白行。

Recall the “kdkjflajfks” command using the up-arrow key if needed. Now, try the left and right-arrow keys. We can position the text cursor anywhere in the command line. This allows us to easily correct mistakes.

如果需要,可以使用向上箭头键来重新调用"kdkjflajfks"命令。现在,试试左右箭头键。我们可以将文本光标定位在命令行的任何位置。这使我们可以轻松纠正错误。

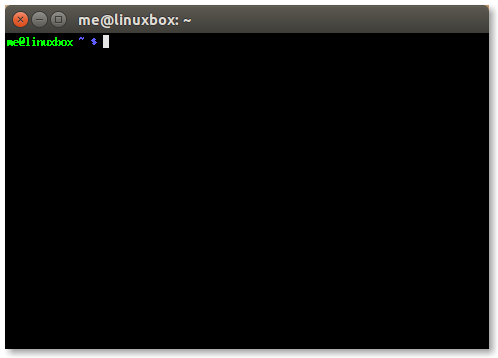

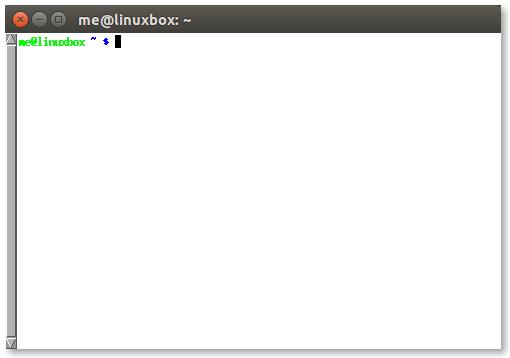

你没有以root用户身份操作,对吗? You’re not operating as root, are you?

If the last character of your shell prompt is # rather than $, you are operating as the superuser. This means that you have administrative privileges. This can be dangerous, since you are able to delete or overwrite any file on the system. Unless you absolutely need administrative privileges, do not operate as the superuser.

如果你的Shell提示符的最后一个字符是

#而不是$,那么你正在以超级用户的身份操作。这意味着你拥有管理员特权。这可能是危险的,因为你可以删除或覆盖系统上的任何文件。除非你绝对需要管理员特权,否则不要以超级用户身份操作。

使用鼠标 Using the Mouse

Even though the shell is a command line interface, the mouse is still handy.

虽然Shell是一个命令行界面,但鼠标仍然很方便。

Besides using the mouse to scroll the contents of the terminal window, we can can use it to copy text. Drag the mouse over some text (for example, “kdkjflajfks” right here on the browser window) while holding down the left button. The text should highlight. Release the left button and move the mouse pointer to the terminal window and press the middle mouse button (alternately, press both the left and right buttons at the same time when working on a touch pad). The text we highlighted in the browser window should be copied into the command line.

除了使用鼠标滚动终端窗口的内容,我们还可以使用它来复制文本。按住左键,拖动鼠标在一些文本上(例如,在浏览器窗口中的"kdkjflajfks"),文本将被突出显示。释放左键,将鼠标指针移动到终端窗口上,并按下中间鼠标按钮(或者在触摸板上同时按下左键和右键)。我们在浏览器窗口中突出显示的文本将被复制到命令行中。

关于焦点的一些说明… A few words about focus…

When you installed your Linux system and its window manager (most likely Gnome or KDE), it was configured to behave in some ways like that legacy operating system.

当你安装Linux系统及其窗口管理器(很可能是Gnome或KDE)时,它会配置成某种程度上与那个遗留操作系统类似的行为。

In particular, it probably has its focus policy set to “click to focus.” This means that in order for a window to gain focus (become active) you have to click in the window. This is contrary to traditional X Window behavior. You should consider setting the focus policy to “focus follows mouse”. You may find it strange at first that windows don’t raise to the front when they get focus (you have to click on the window to do that), but you will enjoy being able to work on more than one window at once without having the active window obscuring the other. Try it and give it a fair trial; I think you will like it. You can find this setting in the configuration tools for your window manager.

特别是,它可能将其焦点策略设置为"点击获取焦点"。这意味着为了使窗口获得焦点(变为活动状态),你必须在窗口中点击。这与传统的X Window行为相反。你应该考虑将焦点策略设置为"焦点跟随鼠标"。起初,窗口不会在获得焦点时自动提到前台可能会让你感到奇怪(你必须点击窗口才能做到),但你将享受到能够同时在多个窗口上工作而不会被活动窗口遮挡的便利。尝试一下并进行充分试用;我相信你会喜欢它的。你可以在窗口管理器的配置工具中找到此设置。

进一步阅读 Further Reading

- The Wikipedia entry for Steve Bourne, developer of the original Bourne shell

- The Wikipedia article on the Unix shell, the place where all this fun got started

- The “Power Terminals” Adventure

- Steve Bourne的维基百科页面,原始Bourne shell的开发者

- Unix Shell的维基百科文章,这就是所有这些有趣的东西开始的地方

- “Power Terminals” 探险

2.2 - 导航

导航 Navigation

https://linuxcommand.org/lc3_lts0020.php

In this lesson, we will introduce our first three commands: pwd (print working directory), cd (change directory), and ls (list files and directories).

在本课程中,我们将介绍我们的前三个命令:pwd(打印当前工作目录)、cd(切换目录)和ls(列出文件和目录)。

Those new to the command line will need to pay close attention to this lesson since the concepts will take some getting used to.

对于命令行不熟悉的人来说,需要特别注意这节课,因为这些概念需要一些时间来适应。

文件系统组织 File System Organization

Like Windows, the files on a Linux system are arranged in what is called a hierarchical directory structure. This means that they are organized in a tree-like pattern of directories (called folders in other systems), which may contain files and subdirectories. The first directory in the file system is called the root directory. The root directory contains files and subdirectories, which contain more files and subdirectories and so on and so on.

与Windows一样,Linux系统上的文件以所谓的分层目录结构进行排列。这意味着它们以一种类似树状的模式组织在目录中(其他系统中称为文件夹),这些目录中可能包含文件和子目录。文件系统中的第一个目录称为根目录。根目录包含文件和子目录,这些子目录又包含更多的文件和子目录,以此类推。

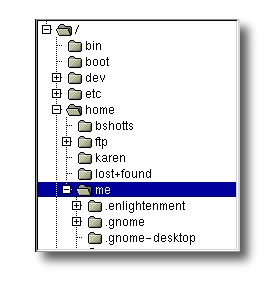

Most graphical environments include a file manager program used to view and manipulate the contents of the file system. Often we will see the file system represented like this:

大多数图形界面都包含一个文件管理器程序,用于查看和操作文件系统的内容。通常,我们会看到文件系统以这样的方式表示:

One important difference between Windows and Unix-like operating systems such as Linux is that Linux does not employ the concept of drive letters. While Windows drive letters split the file system into a series of different trees (one for each device), Linux always has a single tree. Different storage devices may be different branches of the tree, but there is always just a single tree.

Windows和Linux等类Unix操作系统之间一个重要的区别是,Linux不使用驱动器字母的概念。而Windows的驱动器字母将文件系统分割为一系列不同的树(每个设备对应一个树),Linux始终只有一棵树。不同的存储设备可能是树的不同分支,但始终只有一棵树。

pwd

Since the command line interface cannot provide graphic pictures of the file system structure, we must have a different way of representing it. To do this, think of the file system tree as a maze, and that we are standing in it. At any given moment, we are located in a single directory. Inside that directory, we can see its files and the pathway to its parent directory and the pathways to the subdirectories of the directory in which we are standing.

由于命令行界面无法提供文件系统结构的图形图片,我们必须采用不同的方式来表示它。为此,将文件系统树视为一个迷宫,并且我们正在其中。在任何给定的时刻,我们位于单个目录中。在该目录中,我们可以看到其文件以及到其父目录和所处目录的子目录的路径。

The directory we are standing in is called the working directory. To see the name of the working directory, we use the pwd command.

我们所处的目录称为工作目录。要查看工作目录的名称,我们使用pwd命令。

| |

When we first log on to our Linux system, the working directory is set to our home directory. This is where we put our files. On most systems, the home directory will be called /home/user_name, but it can be anything according to the whims of the system administrator.

当我们首次登录到Linux系统时,工作目录设置为我们的主目录。这是我们放置文件的地方。在大多数系统上,主目录将被称为/home/user_name,但根据系统管理员的喜好,它可以是任何名称。

To list the files in the working directory, we use the ls command.

要列出工作目录中的文件,我们使用ls命令。

| |

We will come back to ls in the next lesson. There are a lot of fun things you can do with it, but we have to talk about pathnames and directories a bit first.

我们将在下一课中回到ls命令。你可以用它做很多有趣的事情,但首先我们需要讨论路径名和目录的一些内容。

cd

To change the working directory (where we are standing in the maze) we use the cd command. To do this, we type cd followed by the pathname of the desired working directory. A pathname is the route we take along the branches of the tree to get to the directory we want. Pathnames can be specified two different ways; absolute pathnames or relative pathnames. Let’s look with absolute pathnames first.

要更改工作目录(我们在迷宫中的位置),我们使用cd命令。为此,我们输入cd,然后输入所需工作目录的路径名。路径名是我们沿树的分支行进以到达所需目录或文件的路径。路径名可以用两种不同的方式指定:绝对路径名或相对路径名。让我们首先看一下绝对路径名。

An absolute pathname begins with the root directory and follows the tree branch by branch until the path to the desired directory or file is completed. For example, there is a directory on your system in which most programs are installed. The pathname of the directory is /usr/bin. This means from the root directory (represented by the leading slash in the pathname) there is a directory called “usr” which contains a directory called “bin”.

绝对路径名以根目录开始,沿树的每个分支一直到完成到达所需目录或文件的路径。例如,你的系统上有一个大多数程序安装在其中的目录。该目录的路径名为/usr/bin。这意味着从根目录(路径名中的前导斜杠表示根目录)开始,有一个名为"usr"的目录,其中包含一个名为"bin"的目录。

Let’s try this out:

让我们试一下:

| |

Now we can see that we have changed the current working directory to /usr/bin and that it is full of files. Notice how the shell prompt has changed? As a convenience, it is usually set up to display the name of the working directory.

现在我们可以看到我们已经将当前工作目录更改为/usr/bin,而且它充满了文件。注意到shell提示符已经改变了吗?作为方便起见,它通常设置为显示工作目录的名称。

Where an absolute pathname starts from the root directory and leads to its destination, a relative pathname starts from the working directory. To do this, it uses a couple of special notations to represent relative positions in the file system tree. These special notations are “.” (dot) and “..” (dot dot).

绝对路径名从根目录开始并导向目标目录,而相对路径名从工作目录开始。为此,它使用两个特殊符号来表示文件系统树中的相对位置。这些特殊符号是"."(点)和".."(点点)。

The “.” notation refers to the working directory itself and the “..” notation refers to the working directory’s parent directory. Here is how it works. Let’s change the working directory to /usr/bin again:

“.“符号表示工作目录本身,”..“符号表示工作目录的父目录。以下是它的工作原理。让我们再次将工作目录更改为/usr/bin:

| |

O.K., now let’s say that we wanted to change the working directory to the parent of /usr/bin which is /usr. We could do that two different ways. First, with an absolute pathname:

好了,现在假设我们想将工作目录更改为/usr/bin的父目录,即/usr。我们可以用两种不同的方式来做到这一点。首先,使用绝对路径名:

| |

Or, with a relative pathname:

或者,使用相对路径名:

| |

Two different methods with identical results. Which one should we use? The one that requires the least typing!

两种方法得到相同的结果。我们应该使用哪个方法呢?使用输入最少的那个!

Likewise, we can change the working directory from /usr to /usr/bin in two different ways. First using an absolute pathname:

同样地,我们可以用两种不同的方式将工作目录从/usr更改为/usr/bin。首先使用绝对路径名:

| |

Or, with a relative pathname:

或者,使用相对路径名:

| |

Now, there is something important that we must point out here. In most cases, we can omit the “./”. It is implied. Typing:

现在,这里有一些重要的事情要指出。在大多数情况下,我们可以省略”./",它会被默认添加。输入:

| |

would do the same thing. In general, if we do not specify a pathname to something, the working directory will be assumed. There is one important exception to this, but we won’t get to that for a while.

将会产生相同的效果。通常情况下,如果我们没有指定路径名,那么将假定为工作目录。但有一个重要的例外,不过我们要等一会儿再讨论它。

一些快捷方式 A Few Shortcuts

If we type cd followed by nothing, cd will change the working directory to our home directory.

如果我们只输入cd而不跟任何内容,cd命令将会将工作目录切换为我们的主目录。

A related shortcut is to type cd ~user_name. In this case, cd will change the working directory to the home directory of the specified user.

相关的快捷方式是输入cd ~user_name。这种情况下,cd将会将工作目录切换为指定用户的主目录。

Typing cd - changes the working directory to the previous one.

输入cd -将工作目录切换为上一个目录。

文件名的重要事实 Important facts about file names

- File names that begin with a period character are hidden. This only means that

lswill not list them unless we sayls -a. When your account was created, several hidden files were placed in your home directory to configure things for your account. Later on we will take a closer look at some of these files to see how you can customize our environment. In addition, some applications will place their configuration and settings files in your home directory as hidden files. - 以句点字符开头的文件名是隐藏的。这仅意味着除非我们使用

ls -a命令,否则ls不会列出它们。在创建您的帐户时,系统会在主目录中放置一些隐藏文件来为您的帐户配置一些东西。稍后我们将仔细查看其中的一些文件,了解如何自定义我们的环境。此外,一些应用程序会将它们的配置和设置文件作为隐藏文件放置在您的主目录中。 - File names in Linux, like Unix, are case sensitive. The file names “File1” and “file1” refer to different files.

- Linux中的文件名(如Unix)区分大小写。文件名"File1"和"file1"表示不同的文件。

- Linux has no concept of a “file extension” like Windows systems. You may name files any way you like. However, while Linux itself does not care about file extensions, many application programs do.

- Linux没有类似于Windows系统的"文件扩展名"的概念。您可以按任意方式命名文件。但是,尽管Linux本身不关心文件扩展名,但许多应用程序程序则关心。

- Though Linux supports long file names which may contain embedded spaces and punctuation characters, limit the punctuation characters to period, dash, and underscore. Most importantly, do not embed spaces in file names. If you want to represent spaces between words in a file name, use underscore characters. You will thank yourself later.

- 虽然Linux支持长文件名,可以包含嵌入的空格和标点符号字符,但标点符号字符应限制为句点、破折号和下划线。最重要的是,不要在文件名中嵌入空格。如果要表示文件名中的单词之间的空格,请使用下划线字符。以后您会感谢自己这么做。

2.3 - 四处浏览

四处浏览 Looking Around

https://linuxcommand.org/lc3_lts0030.php

Now that we know how to move from working directory to working directory, we’re going to take a tour of our Linux system and, along the way, learn some things about what makes it tick. But before we begin, we have to learn about some tools that will come in handy during our journey. These are:

现在我们知道如何从一个工作目录移动到另一个工作目录了,我们将在 Linux 系统中进行一次浏览,并在此过程中了解一些关于 Linux 运行的原理的知识。但在开始之前,我们必须学习一些在我们的旅程中会派上用场的工具。它们是:

ls(list files and directories)less(view text files)file(classify a file’s contents)ls(列出文件和目录)less(查看文本文件)file(对文件进行分类)

ls

The ls command is used to list the contents of a directory. It is probably the most commonly used Linux command. It can be used in a number of different ways. Here are some examples:

ls 命令用于列出目录的内容。它可能是最常用的 Linux 命令之一。它可以以多种不同的方式使用。以下是一些示例:

| 命令 Command | 结果 Result |

|---|---|

ls | 列出当前工作目录中的文件 List the files in the working directory |

ls /bin | 列出 /bin 目录中的文件(或其他任何我们关心的目录)List the files in the /bin directory (or any other directory we care to specify) |

ls -l | 以长格式列出当前工作目录中的文件 List the files in the working directory in long format |

ls -l /etc /bin | 以长格式列出 /bin 目录和 /etc 目录中的文件List the files in the /bin directory and the /etc directory in long format |

ls -la .. | 以长格式列出父目录中的所有文件(甚至是以句点字符开头的文件,通常是隐藏的) List all files (even ones with names beginning with a period character, which are normally hidden) in the parent of the working directory in long format |

These examples also point out an important concept about commands. Most commands operate like this:

这些示例还指出了关于命令的一个重要概念。大多数命令的操作方式类似于:

command -options arguments

where command is the name of the command, -options is one or more adjustments to the command’s behavior, and arguments is one or more “things” upon which the command operates.

其中 command 是命令的名称,-options 是对命令行为进行的一个或多个调整,arguments 是命令操作的一个或多个"东西"。

In the case of ls, we see that ls is the name of the command, and that it can have one or more options, such as -a and -l, and it can operate on one or more files or directories.

在 ls 的情况下,我们可以看到 ls 是命令的名称,它可以有一个或多个选项,比如 -a 和 -l,并且可以操作一个或多个文件或目录。

更详细的长格式 A Closer Look at Long Format

If we use the -l option with ls, you will get a file listing that contains a wealth of information about the files being listed. Here’s an example:

如果我们在 ls 命令中使用 -l 选项,将会得到一个包含有关所列出文件的丰富信息的文件列表。这里有一个示例:

-rw------- 1 me me 576 Apr 17 2019 weather.txt

drwxr-xr-x 6 me me 1024 Oct 9 2019 web_page

-rw-rw-r-- 1 me me 276480 Feb 11 20:41 web_site.tar

-rw------- 1 me me 5743 Dec 16 2018 xmas_file.txt

---------- ------- ------- -------- ------------ -------------

| | | | | |

| | | | | File Name

| | | | |

| | | | +--- Modification Time

| | | |

| | | +------------- Size (in bytes)

| | |

| | +----------------------- Group

| |

| +-------------------------------- Owner

|

+---------------------------------------------- File Permissions

文件名 File Name

The name of the file or directory.

文件或目录的名称。

修改时间 Modification Time

The last time the file was modified. If the last modification occurred more than six months in the past, the date and year are displayed. Otherwise, the time of day is shown.

文件最后一次修改的时间。如果最后一次修改是在六个月以上之前,将显示日期和年份。否则,显示具体时间。

大小 Size

The size of the file in bytes.

文件的大小(以字节为单位)。

组 Group

The name of the group that has file permissions in addition to the file’s owner.

除了文件所有者外,具有文件权限的组的名称。

所有者 Owner

The name of the user who owns the file.

文件的所有者的用户名。

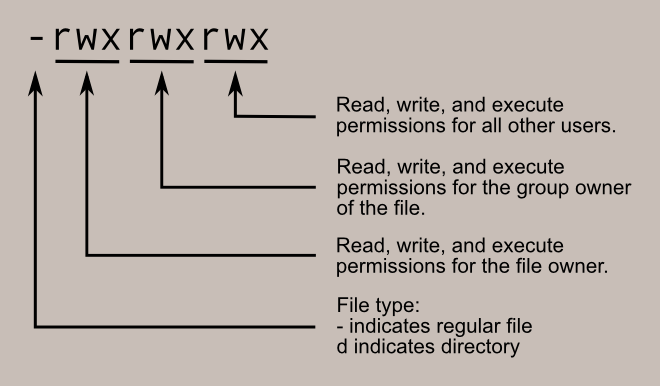

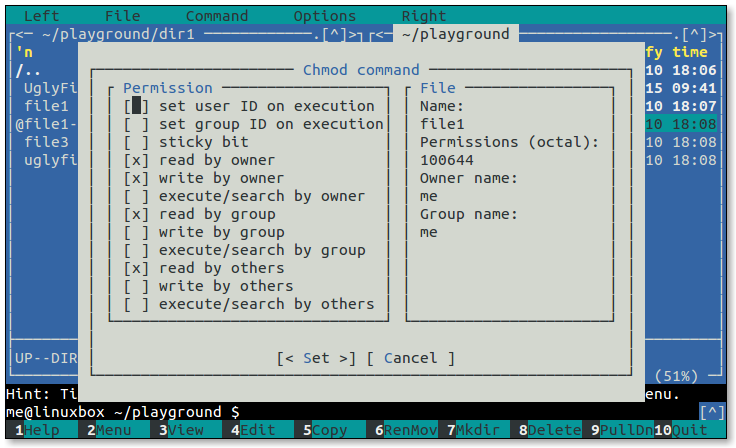

文件权限 File Permissions

A representation of the file’s access permissions. The first character is the type of file. A “-” indicates a regular (ordinary) file. A “d” indicates a directory. The second set of three characters represent the read, write, and execution rights of the file’s owner. The next three represent the rights of the file’s group, and the final three represent the rights granted to everybody else. We’ll discuss this in more detail in a later lesson.

文件访问权限的表示形式。第一个字符表示文件的类型。"-" 表示普通文件,“d” 表示目录。接下来的三个字符表示文件所有者的读取、写入和执行权限。接下来的三个字符表示文件所属组的权限,最后的三个字符表示授予其他人的权限。我们将在以后的课程中详细讨论这个。

less

less is a program that lets us view text files. This is very handy since many of the files used to control and configure Linux are human readable.

less 是一个用于查看文本文件的程序。这非常方便,因为用于控制和配置 Linux 的许多文件都是可读的。

什么是"文本"? What is “text”?

There are many ways to represent information on a computer. All methods involve defining a relationship between the information and some numbers that will be used to represent it. Computers, after all, only understand numbers and all data is converted to numeric representation.

在计算机上表示信息有许多方式。所有的方法都涉及将信息与一些数字之间的关系定义起来,这些数字将用于表示它。毕竟,计算机只能理解数字,所有的数据都被转换为数字表示。

Some of these representation systems are very complex (such as compressed multimedia files), while others are rather simple. One of the earliest and simplest is called ASCII text. ASCII (pronounced “As-Key”) is short for American Standard Code for Information Interchange. This is a simple encoding scheme that was first used on Teletype machines to map keyboard characters to numbers.

其中一些表示系统非常复杂(例如压缩的多媒体文件),而其他一些则相当简单。其中一种最早和最简单的表示系统被称为 ASCII 文本。ASCII(发音为"As-Key")是美国信息交换标准代码的缩写。这是一种简单的编码方案,最初在电传打字机上用于将键盘字符映射到数字。

Text is a simple one-to-one mapping of characters to numbers. It is very compact. Fifty characters of text translates to fifty bytes of data. Throughout a Linux system, many files are stored in text format and there are many Linux tools that work with text files. Even Windows systems recognize the importance of this format. The well-known NOTEPAD.EXE program is an editor for plain ASCII text files.

文本是字符与数字之间的简单一对一映射。它非常紧凑。五十个字符的文本转换为五十个字节的数据。在 Linux 系统中,许多文件都以文本格式存储,并且有许多 Linux 工具可以处理文本文件。即使在 Windows 系统中,也认识到这种格式的重要性。著名的 NOTEPAD.EXE 程序就是用于编辑纯 ASCII 文本文件的编辑器。

The less program is invoked by simply typing:

通过简单地键入以下命令即可调用 less 程序:

less text_file

This will display the file.

这将显示文件内容。

控制 less - Controlling less

Once started, less will display the text file one page at a time. We can use the Page Up and Page Down keys to move through the text file. To exit less, we type “q”. Here are some commands that less will accept:

启动后,less 将逐页显示文本文件。我们可以使用 Page Up 和 Page Down 键在文本文件中进行导航。要退出 less,我们输入 “q”。以下

| 命令 Command | 操作 Action |

|---|---|

| Page Up or b | 向上滚动一页 Scroll back one page |

| Page Down or space | 向下滚动一页 Scroll forward one page |

| G | 转到文本文件的末尾 Go to the end of the text file |

| 1G | 转到文本文件的开头 Go to the beginning of the text file |

| /characters | 在文本文件中向前搜索指定的 characters Search forward in the text file for an occurrence of the specified characters |

| n | 重复上一次搜索 Repeat the previous search |

| h | 显示完整的 less 命令和选项列表Display a complete list less commands and options |

| q | 退出 lessQuit |

file

As we wander around our Linux system, it is helpful to determine what kind of data a file contains before we try to view it. This is where the file command comes in. file will examine a file and tell us what kind of file it is.

在我们浏览 Linux 系统时,在尝试查看文件之前,确定文件包含的数据类型很有帮助。这就是 file 命令的用处。file 命令将检查一个文件并告诉我们它是什么类型的文件。

To use the file program, we just type:

要使用 file 程序,只需键入:

file name_of_file

The file program can recognize most types of files, such as:

file 程序可以识别大多数类型的文件,例如:

| 文件类型 File Type | 描述 Description | 可作为文本查看?Viewable as text? |

|---|---|---|

| ASCII text | 名称已经说得很清楚了 The name says it all | yes |

| Bourne-Again shell script text | 一个 bash 脚本A bash script | yes |

| ELF 64-bit LSB executable | 可执行二进制程序 An executable binary program | no |

| ELF 64-bit LSB shared object | 共享库 A shared library | no |

| GNU tar archive | 录音带存档文件。一种常见的存储文件组的方式 A tape archive file. A common way of storing groups of files. | no, use tar tvf to view listing. |

| gzip compressed data | 用 gzip 压缩的归档An archive compressed with gzip | no |

| HTML document text | 网页 A web page | yes |

| JPEG image data | 压缩的 JPEG 图像 A compressed JPEG image | no |

| PostScript document text | 一个 PostScript 文件 A PostScript file | yes |

| Zip archive data | 用 zip 压缩的归档An archive compressed with zip | no |

While it may seem that most files cannot be viewed as text, a surprising number can be. This is especially true of the important configuration files. During our adventure we will see that many features of the operating system are controlled by text configuration files and shell scripts. In Linux, there are no secrets!

虽然看起来大多数文件都不能作为文本查看,但实际上有相当多的文件可以。这在重要的配置文件中尤其如此。在我们的探险中,我们将看到操作系统的许多功能都由文本配置文件和 shell 脚本控制。在 Linux 中,没有秘密!

2.4 - 导览

导览 - A Guided Tour

https://linuxcommand.org/lc3_lts0040.php

It’s time to take our tour. The table below lists some interesting places to explore. This is by no means a complete list, but it should prove to be an interesting adventure. For each of the directories listed below, do the following:

是时候开始我们的导览了。下面的表格列出了一些有趣的探索地点。这并不是一个完整的列表,但应该会是一次有趣的冒险。对于下面列出的每个目录,执行以下操作:

cdinto each directory.- 使用

cd进入每个目录。 - Use

lsto list the contents of the directory. - 使用

ls列出目录的内容。 - If there is an interesting file, use the

filecommand to determine its contents. - 如果有一个有趣的文件,请使用

file命令确定其内容。 - For text files, use

lessto view them. - 对于文本文件,使用

less查看它们。

| 目录 Directory | 描述 Description |

|---|---|

/ | 文件系统开始的根目录。根目录可能只包含子目录。 The root directory where the file system begins. The root directory will probably contain only subdirectories. |

/boot | Linux 内核和引导加载程序文件存放的地方。内核是一个名为 vmlinuz 的文件。This is where the Linux kernel and boot loader files are kept. The kernel is a file called vmlinuz. |

/etc | /etc 目录包含系统的配置文件。/etc 中的所有文件都应该是文本文件。一些感兴趣的地方有:/etc/passwd passwd 文件包含每个用户的基本信息。这是定义用户帐户的地方。/etc/fstab fstab 文件包含系统引导时挂载的设备表。此文件定义系统的磁盘驱动器。/etc/hosts 此文件列出系统内在本质上已知的网络主机名和 IP 地址。/etc/init.d 此目录包含在引导时启动各种系统服务的脚本。The /etc directory contains the configuration files for the system. All of the files in /etc should be text files. Some points of interest are:/etc/passwdThe passwd file contains the essential information for each user. This is where user accounts are defined./etc/fstabThe fstab file contains a table of devices that get mounted when the system boots. This file defines the system’s disk drives./etc/hostsThis file lists the network host names and IP addresses that are intrinsically known to the system./etc/init.dThis directory contains the scripts that start various system services at boot time. |

/bin, /usr/bin | 这两个目录包含系统的大多数程序。/bin 目录包含系统运行所需的基本程序,而 /usr/bin 包含系统用户的应用程序。These two directories contain most of the programs for the system. The /bin directory has the essential programs that the system requires to operate, while /usr/bin contains applications for the system’s users. |

/sbin, /usr/sbin | sbin 目录包含系统管理的程序,主要供超级用户使用。The sbin directories contain programs for system administration, mostly for use by the superuser. |

/usr | /usr 目录包含支持用户应用程序的各种内容。一些亮点:/usr/share/X11 X Window 系统的支持文件。/usr/share/dict 拼写检查器的词典。是的,Linux 自带拼写检查器。参见 look 和 aspell。/usr/share/doc 各种格式的文档文件。/usr/share/man 手册页保存在这里。The /usr directory contains a variety of things that support user applications. Some highlights:/usr/share/X11Support files for the X Window system/usr/share/dictDictionaries for the spelling checker. Yes, Linux comes with a spelling checker. See look and aspell./usr/share/docVarious documentation files in a variety of formats./usr/share/manThe man pages are kept here. |

/usr/local | /usr/local 及其子目录用于安装本地机器上的软件和其他文件。这实际上意味着不是官方发行版的软件(通常放在 /usr/bin 中)放在这里。当您找到有趣的程序要安装到系统上时,它们应该安装在其中一个 /usr/local 目录中。最常选择的目录是 /usr/local/bin。/usr/local and its subdirectories are used for the installation of software and other files for use on the local machine. What this really means is that software that is not part of the official distribution (which usually goes in /usr/bin) goes here. When you find interesting programs to install on your system, they should be installed in one of the /usr/local directories. Most often, the directory of choice is /usr/local/bin. |

/var | /var 目录包含随系统运行而发生变化的文件。包括:/var/log 包含日志文件的目录。这些文件在系统运行时更新。定期查看此目录中的文件是监视系统健康状况的一个好办法。/var/spool 此目录用于保存排队等待某些进程处理的文件,例如邮件和打印作业。当用户的邮件首次到达本地系统时(假设它具有本地邮件,在现代非邮件服务器的计算机上很少见),消息首先存储在 /var/spool/mail 中。The /var directory contains files that change as the system is running. This includes:/var/logDirectory that contains log files. These are updated as the system runs. It’s a good idea to view the files in this directory from time to time, to monitor the health of your system./var/spoolThis directory is used to hold files that are queued for some process, such as mail messages and print jobs. When a user’s mail first arrives on the local system (assuming it has local mail, a rare occurrence on modern machines that are not mail servers), the messages are first stored in /var/spool/mail |

/lib | 共享库(类似于其他操作系统中的 DLL)存放在这里。 The shared libraries (similar to DLLs in that other operating system) are kept here. |

/home | /home 是用户存储个人工作的地方。通常情况下,这是用户唯一被允许写入文件的地方。这使得事情保持整洁 :-)/home is where users keep their personal work. In general, this is the only place users are allowed to write files. This keeps things nice and clean :-) |

/root | 超级用户的主目录。 This is the superuser’s home directory. |

/tmp | /tmp 是程序可以写入其临时文件的目录。/tmp is a directory in which programs can write their temporary files. |

/dev | /dev 目录是一个特殊目录,因为它并不真正包含通常意义上的文件。相反,它包含系统可用的设备。在 Linux(类似于 Unix)中,设备被视为文件。您可以像处理文件一样读取和写入设备。例如 /dev/fd0 是第一个软盘驱动器,/dev/sda 是第一个硬盘驱动器。内核理解的所有设备都在这里表示。The /dev directory is a special directory, since it does not really contain files in the usual sense. Rather, it contains devices that are available to the system. In Linux (like Unix), devices are treated like files. You can read and write devices as though they were files. For example /dev/fd0 is the first floppy disk drive, /dev/sda is the first hard drive. All the devices that the kernel understands are represented here. |

/proc | /proc 目录也是特殊的。这个目录不包含文件。事实上,这个目录根本不存在。它完全是虚拟的。/proc 目录包含了对内核本身的小窥视孔。这个目录中有一组编号的条目,对应着系统上运行的所有进程。此外,还有许多命名条目允许访问当前系统配置。可以查看其中的许多条目。尝试查看 /proc/cpuinfo。这个条目将告诉您内核对系统的 CPU 的看法。The /proc directory is also special. This directory does not contain files. In fact, this directory does not really exist at all. It is entirely virtual. The /proc directory contains little peep holes into the kernel itself. There are a group of numbered entries in this directory that correspond to all the processes running on the system. In addition, there are a number of named entries that permit access to the current configuration of the system. Many of these entries can be viewed. Try viewing /proc/cpuinfo. This entry will tell you what the kernel thinks of the system’s CPU. |

/media | 最后,我们来到 /media,这是一个普通的目录,以特殊的方式使用。/media 目录用于 挂载点。就像我们在第二课中学到的那样,不同的物理存储设备(如硬盘驱动器)附加到文件系统树的不同位置。将设备附加到树上的过程称为 挂载。要使设备可用,必须首先将其挂载。当您的系统启动时,它会读取 /etc/fstab 文件中的挂载指令列表,该文件描述了哪个设备挂载在目录树的哪个挂载点上。这处理了硬盘驱动器,但我们还可以拥有被认为是临时的设备,如光盘和 USB 存储设备。由于这些是可移动的,它们不会一直保持挂载状态。/media 目录由现代面向桌面的 Linux 发行版中的自动设备挂载机制使用。要查看使用的设备和挂载点,请键入 mount。Finally, we come to /media, a normal directory which is used in a special way. The /media directory is used for mount points. As we learned in the second lesson, the different physical storage devices (like hard disk drives) are attached to the file system tree in various places. This process of attaching a device to the tree is called mounting. For a device to be available, it must first be mounted. When your system boots, it reads a list of mounting instructions in the /etc/fstab file, which describes which device is mounted at which mount point in the directory tree. This takes care of the hard drives, but we may also have devices that are considered temporary, such as optical disks and USB storage devices. Since these are removable, they do not stay mounted all the time. The /media directory is used by the automatic device mounting mechanisms found in modern desktop oriented Linux distributions. To see what devices and mount points are used, type mount. |

A weird kind of file…

一种奇怪的文件…

During your tour, you probably noticed a strange kind of directory entry, particularly in the

/libdirectory. When listed withls -l, you might have seen something like this: 在你的导览中,你可能注意到了一种奇怪的目录条目,尤其是在

/lib目录中。当用ls -l列出时,你可能会看到像这样的东西:lrwxrwxrwx 25 Jul 3 16:42 System.map -> /boot/System.map-4.0.36-3 -rw-r--r-- 105911 Oct 13 2018 System.map-4.0.36-0.7 -rw-r--r-- 105935 Dec 29 2018 System.map-4.0.36-3 -rw-r--r-- 181986 Dec 11 2019 initrd-4.0.36-0.7.img -rw-r--r-- 182001 Dec 11 2019 initrd-4.0.36.img lrwxrwxrwx 26 Jul 3 16:42 module-info -> /boot/module-info-4.0.36-3 -rw-r--r-- 11773 Oct 13 2018 module-info-4.0.36-0.7 -rw-r--r-- 11773 Dec 29 2018 module-info-4.0.36-3 lrwxrwxrwx 16 Dec 11 2019 vmlinuz -> vmlinuz-4.0.36-3 -rw-r--r-- 454325 Oct 13 2018 vmlinuz-4.0.36-0.7 -rw-r--r-- 454434 Dec 29 2018 vmlinuz-4.0.36-3Notice the files,

System.map, module-infoandvmlinuz. See the strange notation after the file names?注意文件

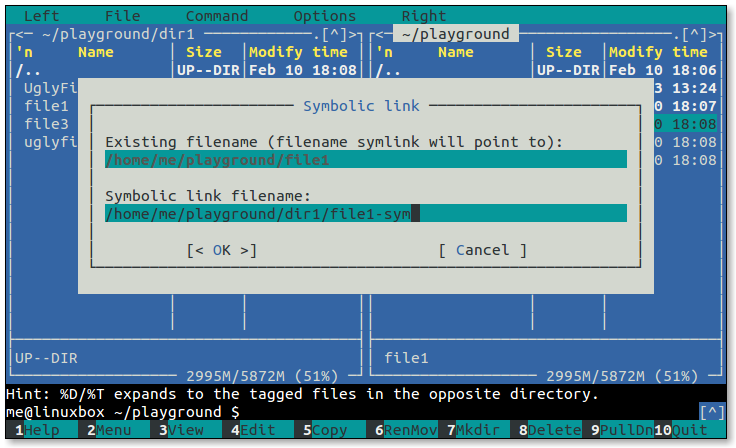

System.map、module-info和vmlinuz。看到文件名后面的奇怪符号了吗?Files such as this are called symbolic links. Symbolic links are a special type of file that points to another file. With symbolic links, it is possible for a single file to have multiple names. Here’s how it works: Whenever the system is given a file name that is a symbolic link, it transparently maps it to the file it is pointing to.

此类文件称为 符号链接。符号链接是一种特殊类型的文件,它指向另一个文件。通过符号链接,一个文件可以有多个名称。它的工作原理如下:每当系统获得一个符号链接的文件名时,它会自动地将其映射到指向的文件。

Just what is this good for? This is a very handy feature. Let’s consider the directory listing above (which is the

/bootdirectory of an old system). This system has had multiple versions of the Linux kernel installed. We can see this from the filesvmlinuz-4.0.36-0.7andvmlinuz-4.0.36-3. These file names suggest that both version 4.0.36-0.7 and 4.0.36-3 are installed. Because the file names contain the version it is easy to see the differences in the directory listing. However, this would be confusing to programs that rely on a fixed name for the kernel file. These programs might expect the kernel to simply be called"vmlinuz". Here is where the beauty of the symbolic link comes in. By creating a symbolic link calledvmlinuzthat points tovmlinuz-4.0.36-3, we have solved the problem. 这有什么用呢?这是一个非常方便的功能。让我们来看看上面的目录列表(这是一个旧系统的

/boot目录)。该系统安装了多个版本的 Linux 内核,我们可以从文件vmlinuz-4.0.36-0.7和vmlinuz-4.0.36-3看出这一点。这些文件名表明安装了版本 4.0.36-0.7 和 4.0.36-3。由于文件名包含版本信息,因此很容易在目录列表中看到它们之间的差异。然而,对于依赖于固定名称的内核文件的程序来说,这可能会造成困惑。这些程序可能期望内核简单地称为"vmlinuz"。这就是符号链接的优点所在。通过创建一个名为vmlinuz的符号链接,它指向vmlinuz-4.0.36-3,我们解决了这个问题。To create symbolic links, we use the

lncommand. 要创建符号链接,我们使用

ln命令。

进一步阅读 Further Reading

- To learn more about the organization of the Linux filesystem, consult the Filesystem Hierarchy Standard

- 要了解更多关于 Linux 文件系统组织的信息,请参阅文件系统层次结构标准(Filesystem Hierarchy Standard)。

2.5 - 操作文件

操作文件 Manipulating Files

https://linuxcommand.org/lc3_lts0050.php

This lesson will introduce the following commands:

本课程将介绍以下命令:

cp- copy files and directoriesmv- move or rename files and directoriesrm- remove files and directoriesmkdir- create directoriescp- 复制文件和目录mv- 移动或重命名文件和目录rm- 删除文件和目录mkdir- 创建目录

These four commands are among the most frequently used Linux commands. They are the basic commands for manipulating both files and directories.

这四个命令是最常用的 Linux 命令之一。它们是用于操作文件和目录的基本命令。

Now, to be frank, some of the tasks performed by these commands are more easily done with a graphical file manager. With a file manager, you can drag and drop a file from one directory to another, cut and paste files, delete files, etc. So why use these old command line programs?

说实话,其中一些命令执行的任务可以更容易地通过图形文件管理器完成。使用文件管理器,您可以将文件从一个目录拖放到另一个目录,剪切和粘贴文件,删除文件等等。那么为什么要使用这些老旧的命令行程序呢?

The answer is power and flexibility. While it is easy to perform simple file manipulations with a graphical file manager, complicated tasks can be easier with the command line programs. For example, how would you copy all the HTML files from one directory to another, but only copy files that did not exist in the destination directory or were newer than the versions in the destination directory? Pretty hard with with a file manager. Pretty easy with the command line:

答案是权力和灵活性。虽然使用图形文件管理器可以执行简单的文件操作,但使用命令行程序可以更容易地处理复杂的任务。例如,如何将一个目录中的所有 HTML 文件复制到另一个目录,但只复制目标目录中不存在或比目标目录中的版本更新的文件?使用文件管理器可能会很困难,但使用命令行却很简单:

| |

通配符 Wildcards

Before we begin with our commands, we’ll first look at a shell feature that makes these commands so powerful. Since the shell uses filenames so much, it provides special characters to help you rapidly specify groups of filenames. These special characters are called wildcards. Wildcards allow you to select filenames based on patterns of characters. The table below lists the wildcards and what they select:

在开始使用这些命令之前,我们首先来看一下使这些命令如此强大的一个 shell 特性。由于 shell 经常使用文件名,它提供了一些特殊字符,可以帮助您快速指定文件名的组。这些特殊字符称为 通配符。通配符允许您根据字符模式选择文件名。下表列出了通配符及其选择的内容:

| 通配符 Wildcard | 含义 Meaning |

|---|---|

| * | 匹配任意字符 Matches any characters |

| ? | 匹配任意单个字符 Matches any single character |

| [characters] | 匹配字符集中的任意字符。字符集也可以表示为 POSIX 字符类,如下所示:POSIX 字符类 [:alnum:] 字母数字字符 [:alpha:] 字母字符 [:digit:] 数字字符 [:upper:] 大写字母字符 [:lower:] 小写字母字符 Matches any character that is a member of the set characters. The set of characters may also be expressed as a POSIX character class such as one of the following:POSIX Character Classes [:alnum:] Alphanumeric characters [:alpha:]Alphabetic characters [:digit:] Numerals [:upper:] Uppercase alphabetic characters [:lower:] Lowercase alphabetic characters |

| [!characters] | 匹配不属于字符集中的任意字符 Matches any character that is not a member of the set characters |

Using wildcards, it is possible to construct very sophisticated selection criteria for filenames. Here are some examples of patterns and what they match:

使用通配符,可以构造非常复杂的文件名选择条件。以下是一些模式示例及其匹配的内容:

| 模式 Pattern | 匹配 Matches |

|---|---|

* | 所有文件名 All filenames |

g* | 以字母 “g” 开头的所有文件名 All filenames that begin with the character “g” |

b*.txt | 以字母 “b” 开头且以 “.txt” 结尾的所有文件名 All filenames that begin with the character “b” and end with the characters “.txt” |

Data??? | 以 “Data” 开头后面紧跟着恰好 3 个字符的文件名 Any filename that begins with the characters “Data” followed by exactly 3 more characters |

[abc]* | 以 “a”、“b” 或 “c” 开头后面跟着任意其他字符的文件名 Any filename that begins with “a” or “b” or “c” followed by any other characters |

[[:upper:]]* | 以大写字母开头的任何文件名。这是字符类的一个示例。 Any filename that begins with an uppercase letter. This is an example of a character class. |

BACKUP.[[:digit:]][[:digit:]] | 另一个字符类的示例。此模式匹配以 “BACKUP.” 开头后面紧跟着恰好两个数字的文件名。 Another example of character classes. This pattern matches any filename that begins with the characters “BACKUP.” followed by exactly two numerals. |

*[![:lower:]] | 不以小写字母结尾的任何文件名 Any filename that does not end with a lowercase letter. |

We can use wildcards with any command that accepts filename arguments.

我们可以在接受文件名参数的任何命令中使用通配符。

cp

The cp program copies files and directories. In its simplest form, it copies a single file:

cp 程序用于复制文件和目录。在最简单的形式中,它可以复制单个文件:

| |

It can also be used to copy multiple files (and/or directories) to a different directory:

它还可以用于将多个文件(和/或目录)复制到不同的目录:

| |

A note on notation: … signifies that an item can be repeated one or more times.

关于符号的说明: … 表示一个项目可以重复一次或多次。

Other useful examples of cp and its options include:

cp 和其选项的其他有用示例包括:

| 命令 Command | 结果 Results |

|---|---|

cp file1 file2 | 将 file1 的内容复制到 file2。如果 file2 不存在,则创建它;否则,file2 将被 file1 的内容静默覆盖。 Copies the contents of file1 into file2. If file2 does not exist, it is created; otherwise, file2 is silently overwritten with the contents of file1. |

cp -i file1 file2 | 与上面相同,然而,由于指定了 “-i”(交互式)选项,如果 file2 存在,则在覆盖它之前会提示用户。 Like above however, since the “-i” (interactive) option is specified, if file2 exists, the user is prompted before it is overwritten with the contents of file1. |

cp file1 dir1 | 将 file1 的内容(命名为 file1)复制到目录 dir1 内。 Copy the contents of file1 (into a file named file1) inside of directory dir1. |

cp -R dir1 dir2 | 复制目录 dir1 的内容。如果目录 dir2 不存在,则创建它。否则,它在目录 dir2 中创建一个名为 dir1 的目录。 Copy the contents of the directory dir1. If directory dir2 does not exist, it is created. Otherwise, it creates a directory named dir1 within directory dir2. |

mv

The mv command moves or renames files and directories depending on how it is used. It will either move one or more files to a different directory, or it will rename a file or directory. To rename a file, it is used like this:

mv 命令根据使用方式的不同,可以移动或重命名文件和目录。要重命名文件,可以使用以下方式:

| |

To move files (and/or directories) to a different directory:

要将文件(和/或目录)移动到另一个目录:

| |

Examples of mv and its options include:

mv 和其选项的示例包括:

| 命令 Command | 结果 Results |

|---|---|

mv file1 file2 | 如果 file2 不存在,则将 file1 重命名为 file2。如果 file2存在,则它的内容将被 file1 的内容静默替换。 If file2 does not exist, then file1 is renamed file2. If file2 exists, its contents are silently replaced with the contents of file1. |

mv -i file1 file2 | 与上面相同,然而,由于指定了 “-i”(交互式)选项,如果 file2 存在,则在覆盖它之前会提示用户。 Like above however, since the “-i” (interactive) option is specified, if file2 exists, the user is prompted before it is overwritten with the contents of file1. |

mv file1 file2 dir1 | 将文件 file1 和 file2 移动到目录 dir1。如果 dir1 不存在,mv 将报错退出。The files file1 and file2 are moved to directory dir1. If dir1 does not exist, mv will exit with an error. |

mv dir1 dir2 | 如果 dir2 不存在,则将 dir1 重命名为 dir2。如果 dir2 存在,则将目录 dir1 移动到目录 dir2 中。 If dir2 does not exist, then dir1 is renamed dir2. If dir2 exists, the directory dir1 is moved within directory dir2. |

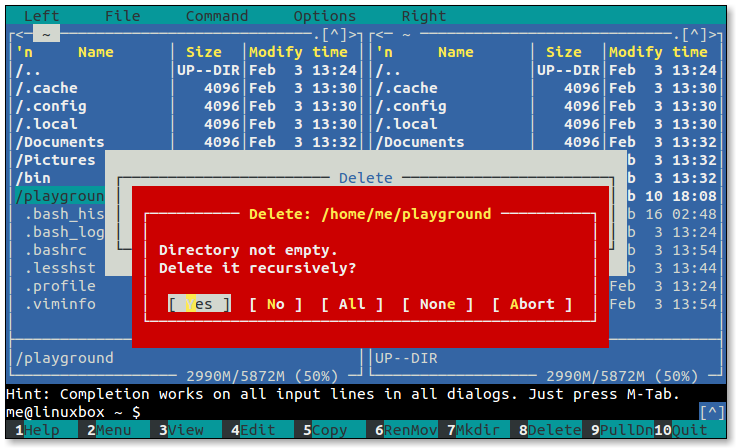

rm

The rm command removes (deletes) files and directories.

rm 命令用于删除文件和目录。

| |

Using the recursive option (-r), rm can also be used to delete directories:

使用递归选项(-r),rm 还可以用于删除目录:

| |

Examples of rm and its options include:

rm 和其选项的示例包括:

| 命令 Command | 结果 Results |

|---|---|

rm file1 file2 | 删除 file1 和 file2。 Delete file1 and file2. |

rm -i file1 file2 | 与上面相同,然而,由于指定了 “-i”(交互式)选项,每个文件被删除之前会提示用户。 Like above however, since the “-i” (interactive) option is specified, the user is prompted before each file is deleted. |

rm -r dir1 dir2 | 删除目录 dir1 和 dir2 及其所有内容。 Directories dir1 and dir2 are deleted along with all of their contents. |

在使用 rm 时要小心! Be careful with rm!

Linux does not have an undelete command. Once you delete something with rm, it’s gone. You can inflict terrific damage on your system with rm if you are not careful, particularly with wildcards.

Linux 没有恢复命令。一旦使用 rm 删除了某个东西,它就消失了。如果不小心使用 rm,特别是使用通配符,可能会对系统造成严重的破坏。

*Before you use rm with wildcards, try this helpful trick:* construct your command using ls instead. By doing this, you can see the effect of your wildcards before you delete files. After you have tested your command with ls, recall the command with the up-arrow key and then substitute rm for ls in the command.

在使用带有通配符的 rm 之前,试试这个有用的技巧: 使用 ls 构建命令。这样,您可以在删除文件之前查看通配符的效果。在使用 ls 测试过命令后,可以使用上箭头键召回命令,然后在命令中将 ls 替换为 rm。

mkdir

The mkdir command is used to create directories. To use it, you simply type:

mkdir 命令用于创建目录。只需键入:

| |

使用通配符的命令 Using Commands with Wildcards

Since the commands we have covered here accept multiple file and directories names as arguments, you can use wildcards to specify them. Here are a few examples:

由于我们在这里介绍的命令接受多个文件和目录名称作为参数,您可以使用通配符来指定它们。以下是一些示例:

| 命令 Command | 结果 Results |

|---|---|

cp *.txt text_files | 将当前工作目录中以 “.txt” 结尾的所有文件复制到名为 “text_files” 的现有目录中。 Copy all files in the current working directory with names ending with the characters “.txt” to an existing directory named text_files. |

mv dir1 ../*.bak dir2 | 将子目录 “dir1” 和当前工作目录的父目录中以 “.bak” 结尾的所有文件移动到名为 “dir2” 的现有目录中。 Move the subdirectory dir1 and all the files ending in “.bak” in the current working directory’s parent directory to an existing directory named dir2. |

rm *~ | 删除当前工作目录中以字符 “~” 结尾的所有文件。某些应用程序使用此命名方案创建备份文件。使用此命令将清理目录中的备份文件。 Delete all files in the current working directory that end with the character “~”. Some applications create backup files using this naming scheme. Using this command will clean them out of a directory. |

进一步阅读 Further Reading

- Chapter 4 of The Linux Command Line covers this topic in more detail

- Linux 命令行第 4 章对这个主题进行了更详细的讨论。

2.6 - 使用命令

使用命令 - Working with Commands

https://linuxcommand.org/lc3_lts0060.php

Up until now, we have seen a number of commands and their mysterious options and arguments. In this lesson, we will try to remove some of that mystery. We will introduce the following commands.

到目前为止,我们已经看到了许多命令及其神秘的选项和参数。在本课程中,我们将尝试消除其中的一些神秘感。我们将介绍以下命令。

type- Display information about command typewhich- Locate a commandhelp- Display reference page for shell builtinman- Display an on-line command referencetype- 显示命令类型的信息which- 定位一个命令help- 显示内置shell命令的参考页面man- 显示在线命令参考

什么是"命令"? What are “Commands?”

Commands can be one of 4 different kinds:

命令可以是以下4种不同类型之一:

- An executable program like all those files we saw in /usr/bin. Within this category, programs can be compiled binaries such as programs written in C and C++, or programs written in scripting languages such as the shell, Perl, Python, Ruby, etc.

- 可执行程序,如我们在 /usr/bin 中看到的所有文件。在此类别中,程序可以是编译的二进制文件,例如用 C 和 C++ 编写的程序,或者是用脚本语言(如Shell、Perl、Python、Ruby等)编写的程序。

- A command built into the shell itself. bash provides a number of commands internally called shell builtins. The

cdcommand, for example, is a shell builtin. - 内置于shell本身的命令。bash 提供了许多称为shell内置命令的内部命令。例如,

cd命令就是一个内置命令。 - A shell function. These are miniature shell scripts incorporated into the environment. We will cover configuring the environment and writing shell functions in later lessons, but for now, just be aware that they exist.

- shell函数。这些是嵌入到环境中的小型shell脚本。我们将在后面的课程中介绍配置环境和编写shell函数,但现在只需要知道它们的存在即可。

- An alias. Commands that we can define ourselves, built from other commands. This will be covered in a later lesson.

- 别名。我们可以自定义的由其他命令构建的命令。这将在后面的课程中介绍。

识别命令 Identifying Commands

It is often useful to know exactly which of the four kinds of commands is being used and Linux provides a couple of ways to find out.

通常,了解正在使用的四种命令类型中的哪一种非常有用,Linux 提供了几种方法来找到答案。

type

The type command is a shell builtin that displays the kind of command the shell will execute, given a particular command name. It works like this:

type 命令是一个内置于shell的命令,它显示给定命令名称的命令类型。它的使用方法如下:

| |

where “command” is the name of the command we want to examine. Here are some examples:

其中 “command” 是我们想要检查的命令的名称。下面是一些示例:

| |

Here we see the results for three different commands. Notice that the one for ls and how the ls command is actually an alias for the ls command with the “– color=auto” option added. Now we know why the output from ls is displayed in color!

这里我们看到了三个不同命令的结果。注意 ls 命令的结果,ls 实际上是 ls 命令的一个别名,并添加了 --color=auto 选项。现在我们知道为什么 ls 命令的输出显示为彩色了!

which

Sometimes there is more than one version of an executable program installed on a system. While this is not very common on desktop systems, it’s not unusual on large servers. To determine the exact location of a given executable, the which command is used:

有时在系统上安装了一个可执行程序的多个版本。尽管在桌面系统上这种情况并不常见,但在大型服务器上并不罕见。为了确定给定可执行程序的确切位置,可以使用 which 命令:

| |

which only works for executable programs, not builtins nor aliases that are substitutes for actual executable programs.

which 仅适用于可执行程序,而不适用于内置命令或替代实际可执行程序的别名。

获取命令文档 Getting Command Documentation

With this knowledge of what a command is, we can now search for the documentation available for each kind of command.

有了对命令的了解,我们现在可以搜索每种类型命令可用的文档。

help

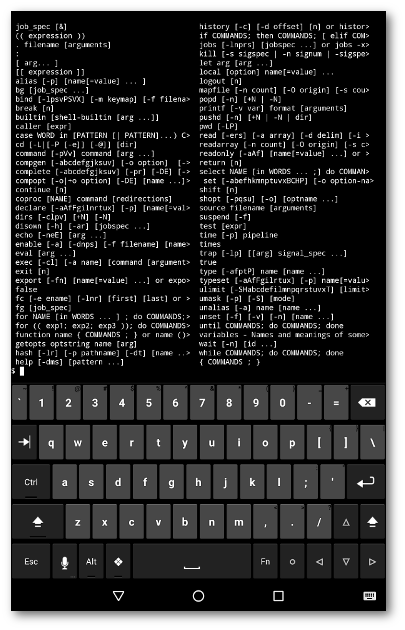

bash has a built-in help facility available for each of the shell builtins. To use it, type “help” followed by the name of the shell builtin. Optionally, we can add the -m option to change the format of the output. For example:

bash 为每个shell内置命令提供了一个内置的帮助功能。要使用它,键入“help”,然后是shell内置命令的名称。我们还可以添加 -m 选项以更改输出的格式。例如:

| |

A note on notation: When square brackets appear in the description of a command’s syntax, they indicate optional items. A vertical bar character indicates mutually exclusive items. In the case of the cd command above:

关于符号的说明: 当命令语法的描述中出现方括号时,表示这些是可选项。竖线字符表示互斥的选项。对于上面的 cd 命令:

| |

This notation says that the command cd may be followed optionally by either a “-L” or a “-P” and further, optionally followed by the argument “dir”.

这个符号说明表示 cd 命令后面可以选择跟着“-L”或者“-P”,然后再可选地跟着参数“dir”。

--help

Many executable programs support a “--help” option that displays a description of the command’s supported syntax and options. For example:

许多可执行程序支持“--help”选项,它会显示命令的支持语法和选项的描述。例如:

| |

Some programs don’t support the “–help” option, but try it anyway. Often it results in an error message that will reveal similar usage information.

有些程序不支持“--help”选项,但还是可以尝试一下。通常会显示出一个错误消息,其中包含类似的使用信息。

man

Most executable programs intended for command line use provide a formal piece of documentation called a manual or man page. A special paging program called man is used to view them. It is used like this:

大多数用于命令行的可执行程序都提供了一份正式的文档,称为手册或man页。用于查看它们的特殊分页程序称为 man。使用方法如下:

| |

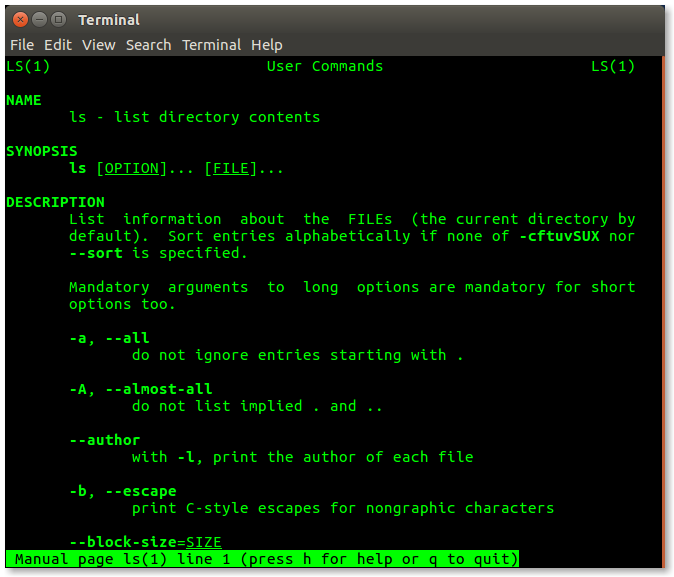

where “program” is the name of the command to view. Man pages vary somewhat in format but generally contain a title, a synopsis of the command’s syntax, a description of the command’s purpose, and a listing and description of each of the command’s options. Man pages, however, do not usually include examples, and are intended as a reference, not a tutorial. Let’s try viewing the man page for the ls command:

其中“program”是要查看的命令的名称。Man页的格式有所不同,但通常包含标题、命令语法的概述、命令目的的描述以及每个命令选项的列表和描述。不过,Man页通常不包含示例,并且旨在作为参考而不是教程。让我们尝试查看 ls 命令的 man 页:

| |

On most Linux systems, man uses less to display the manual page, so all of the familiar less commands work while displaying the page.

在大多数Linux系统上,man 使用 less 来显示手册页,因此在显示页面时可以使用所有熟悉的 less 命令。

README和其他文档文件 - README and Other Documentation Files

Many software packages installed on your system have documentation files residing in the /usr/share/doc directory. Most of these are stored in plain text format and can be viewed with less. Some of the files are in HTML format and can be viewed with a web browser. We may encounter some files ending with a “.gz” extension. This indicates that they have been compressed with the gzip compression program. The gzip package includes a special version of less called zless that will display the contents of gzip-compressed text files.

安装在系统上的许多软件包在 /usr/share/doc 目录中有文档文件。其中大多数以纯文本格式存储,并可以使用 less 查看。其中一些文件是HTML格式的,可以使用Web浏览器查看。可能会遇到一些以“.gz”扩展名结尾的文件。这表示它们已使用gzip压缩程序进行了压缩。gzip软件包包含一个名为zless的特殊版本的less,可以显示gzip压缩的文本文件的内容。

2.7 - 输入/输出重定向

输入/输出重定向 - I/O Redirection

https://linuxcommand.org/lc3_lts0070.php

In this lesson, we will explore a powerful feature used by command line programs called input/output redirection. As we have seen, many commands such as ls print their output on the display. This does not have to be the case, however. By using some special notations we can redirect the output of many commands to files, devices, and even to the input of other commands.

在这个课程中,我们将探索命令行程序中使用的一项强大功能,称为输入/输出重定向。正如我们所见,许多命令(如 ls)将它们的输出打印到显示器上。但是,情况并非总是如此。通过使用一些特殊的符号,我们可以将许多命令的输出重定向到文件、设备,甚至其他命令的输入。

标准输出 Standard Output

Most command line programs that display their results do so by sending their results to a facility called standard output. By default, standard output directs its contents to the display. To redirect standard output to a file, the “>” character is used like this:

大多数命令行程序将它们的结果显示出来是通过将结果发送到一个称为标准输出的设备。默认情况下,标准输出将其内容定向到显示器上。要将标准输出重定向到文件,可以使用 “>” 符号,像这样:

| |

In this example, the ls command is executed and the results are written in a file named file_list.txt. Since the output of ls was redirected to the file, no results appear on the display.

在这个例子中,执行了 ls 命令,并将结果写入名为 file_list.txt 的文件中。由于 ls 的输出被重定向到文件,所以在显示器上没有显示任何结果。

Each time the command above is repeated, file_list.txt is overwritten from the beginning with the output of the command ls. To have the new results appended to the file instead, we use “>>” like this:

每次重复执行上述命令时,file_list.txt 将被从头开始覆盖为 ls 命令的输出。如果要将新结果追加到文件而不是覆盖,可以使用 “>>",像这样:

| |

When the results are appended, the new results are added to the end of the file, thus making the file longer each time the command is repeated. If the file does not exist when we attempt to append the redirected output, the file will be created.

当结果被追加时,新的结果会添加到文件的末尾,从而使得文件每次重复执行命令时都会变长。如果在尝试追加重定向输出时文件不存在,文件将会被创建。

标准输入 Standard Input

Many commands can accept input from a facility called standard input. By default, standard input gets its contents from the keyboard, but like standard output, it can be redirected. To redirect standard input from a file instead of the keyboard, the “<” character is used like this:

许多命令可以从一个称为标准输入的设备接受输入。默认情况下,标准输入从键盘获取内容,但是像标准输出一样,它也可以被重定向。要将标准输入从文件而不是键盘重定向,可以使用 “<” 符号,像这样:

| |

In the example above, we used the sort command to process the contents of file_list.txt. The results are output on the display since the standard output was not redirected. We could redirect standard output to another file like this:

在上面的例子中,我们使用了 sort 命令来处理 file_list.txt 的内容。由于没有重定向标准输出,结果会输出到显示器上。我们可以像这样将标准输出重定向到另一个文件:

| |

As we can see, a command can have both its input and output redirected. Be aware that the order of the redirection does not matter. The only requirement is that the redirection operators (the “<” and “>”) must appear after the other options and arguments in the command.

如我们所见,一个命令可以同时重定向其输入和输出。需要注意的是,重定向的顺序不重要。唯一的要求是重定向操作符("<” 和 “>")必须出现在命令中其他选项和参数之后。

管道 Pipelines

The most useful and powerful thing we can do with I/O redirection is to connect multiple commands together to form what are called pipelines. With pipelines, the standard output of one command is fed into the standard input of another. Here is a very useful example:

使用I/O重定向最有用和强大的功能之一是将多个命令连接在一起,形成所谓的管道。通过管道,一个命令的标准输出被发送到另一个命令的标准输入。下面是一个非常有用的例子:

| |

In this example, the output of the ls command is fed into less. By using this "| less" trick, we can make any command have scrolling output.

在这个例子中,ls 命令的输出被发送到 less 命令。通过使用这个 "| less" 的技巧,我们可以使任何命令都具有滚动输出。

By connecting commands together, we can accomplish amazing feats. Here are some examples to try:

通过将命令连接在一起,我们可以完成令人惊奇的任务。以下是一些可以尝试的例子:

| 命令 Command | What it does |

|---|---|

ls -lt | head | 显示当前目录中最新的10个文件。 Displays the 10 newest files in the current directory. |

du | sort -nr | 显示一个目录列表,以及它们所占用的空间大小,从最大到最小排序。 Displays a list of directories and how much space they consume, sorted from the largest to the smallest. |

find . -type f -print | wc -l | 显示当前工作目录及其所有子目录中的文件总数。 Displays the total number of files in the current working directory and all of its subdirectories. |

过滤器 Filters

One kind of program frequently used in pipelines is called a filter. Filters take standard input and perform an operation upon it and send the results to standard output. In this way, they can be combined to process information in powerful ways. Here are some of the common programs that can act as filters:

在管道中经常使用的一种程序称为过滤器。过滤器接受标准输入并对其进行操作,然后将结果发送到标准输出。通过这种方式,它们可以结合在一起以强大的方式处理信息。以下是一些常见的可以作为过滤器的程序:

| 程序 Program | What it does |

|---|---|

sort | 对标准输入进行排序,然后将排序结果输出到标准输出。 Sorts standard input then outputs the sorted result on standard output. |

uniq | 对排序后的标准输入数据流进行操作,删除重复的数据行(确保每行都是唯一的)。 Given a sorted stream of data from standard input, it removes duplicate lines of data (i.e., it makes sure that every line is unique). |

grep | 检查从标准输入接收到的每一行数据,并输出包含指定字符模式的每一行。 Examines each line of data it receives from standard input and outputs every line that contains a specified pattern of characters. |

fmt | 从标准输入读取文本,然后在标准输出上输出格式化后的文本。 Reads text from standard input, then outputs formatted text on standard output. |

pr | 从标准输入接收文本输入,将数据分页并准备好打印的页眉、页脚和分页符。 Takes text input from standard input and splits the data into pages with page breaks, headers and footers in preparation for printing. |

head | 输出其输入的前几行。可用于获取文件的标题。 Outputs the first few lines of its input. Useful for getting the header of a file. |

tail | 输出其输入的最后几行。可用于获取日志文件中的最新条目等。 Outputs the last few lines of its input. Useful for things like getting the most recent entries from a log file. |

tr | 字符转换。可用于执行诸如大小写转换或更改行终止字符类型(例如,将DOS文本文件转换为Unix样式的文本文件)等任务。 Translates characters. Can be used to perform tasks such as upper/lowercase conversions or changing line termination characters from one type to another (for example, converting DOS text files into Unix style text files). |

sed | 流编辑器。可执行比tr更复杂的文本转换操作。Stream editor. Can perform more sophisticated text translations than tr. |

awk | 一种专为构建过滤器而设计的完整编程语言。非常强大。 An entire programming language designed for constructing filters. Extremely powerful. |

使用管道执行任务 Performing tasks with pipelines

Printing from the command line. Linux provides a program called

lprthat accepts standard input and sends it to the printer. It is often used with pipes and filters. Here are a couple of examples:从命令行打印。 Linux提供了一个名为

lpr的程序,可以接受标准输入并将其发送到打印机。它经常与管道和过滤器一起使用。以下是一些示例:1 2 3cat poorly_formatted_report.txt | fmt | pr | lpr cat unsorted_list_with_dupes.txt | sort | uniq | pr | lprIn the first example, we use

catto read the file and output it to standard output, which is piped into the standard input offmt. fmtformats the text into neat paragraphs and outputs it to standard output, which is piped into the standard input ofpr. prsplits the text neatly into pages and outputs it to standard output, which is piped into the standard input oflpr. lprtakes its standard input and sends it to the printer.在第一个示例中,我们使用

cat读取文件并将其输出到标准输出,然后通过管道将其传递给fmt的标准输入。fmt将文本格式化为整齐的段落,并将其输出到标准输出,然后通过管道将其传递给pr的标准输入。pr将文本整齐地分页,并将其输出到标准输出,然后通过管道将其传递给lpr的标准输入。lpr接受标准输入并将其发送到打印机。The second example starts with an unsorted list of data with duplicate entries. First,

catsends the list intosortwhich sorts it and feeds it intouniqwhich removes any duplicates. Nextprandlprare used to paginate and print the list.第二个示例以一个包含重复条目的未排序数据列表开始。首先,

cat将列表发送到sort,sort对其进行排序并将其传递给uniq,uniq删除任何重复项。然后使用pr和lpr进行分页和打印列表。Viewing the contents of tar files Often you will see software distributed as a gzipped tar file. This is a traditional Unix style tape archive file (created with

tar) that has been compressed withgzip. You can recognize these files by their traditional file extensions, “.tar.gz” or “.tgz”. You can use the following command to view the directory of such a file on a Linux system:查看tar文件的内容 经常会看到软件以gzipped tar文件的形式分发。这是一个传统的Unix风格的磁带归档文件(使用

tar创建),经过gzip压缩。你可以通过文件的传统扩展名”.tar.gz"或".tgz"来识别这些文件。你可以使用以下命令在Linux系统上查看此类文件的目录:1tar tzvf name_of_file.tar.gz | less

进一步阅读 Further Reading

- Chapter 6 of The Linux Command Line covers this topic in more detail.

- The Linux Command Line的第6章更详细地介绍了这个主题。

- Chapters 19 through 21 of The Linux Command Line provide an in-depth look at the text processing tools available in Linux.

- 《The Linux Command Line》的第19至21章深入介绍了Linux中可用的文本处理工具。

- To learn more about the AWK programming language, consider the AWK adventure.

- 如果想更多了解AWK编程语言,请参考AWK adventure。

2.8 - 扩展

扩展 Expansion

https://linuxcommand.org/lc3_lts0080.php

Each time we type a command line and press the enter key, bash performs several processes upon the text before it carries out our command. We have seen a couple of cases of how a simple character sequence, for example “*”, can have a lot of meaning to the shell. The process that makes this happen is called expansion. With expansion, we type something and it is expanded into something else before the shell acts upon it. To demonstrate what we mean by this, let’s take a look at the echo command. echo is a shell builtin that performs a very simple task. It prints out its text arguments on standard output:

每次我们在命令行中输入命令并按下回车键时,bash在执行我们的命令之前会对文本进行几个处理过程。我们已经看到了一些简单字符序列(例如"*")对于shell来说具有很多意义的情况。使这种情况发生的过程被称为扩展。通过扩展,我们在键入某些内容时,shell会在对其执行操作之前将其扩展为其他内容。为了演示我们所说的,让我们来看一下echo命令。echo是一个shell内置命令,执行一个非常简单的任务,即在标准输出上打印出其文本参数:

| |

That’s pretty straightforward. Any argument passed to echo gets displayed. Let’s try another example:

这很简单明了。任何传递给echo的参数都会被显示出来。让我们尝试另一个示例:

| |

So what just happened? Why didn’t echo print “*”? As we recall from our work with wildcards, the “*“character means match any characters in a filename, but what we didn’t see in our original discussion was how the shell does that. The simple answer is that the shell expands the “*” into something else (in this instance, the names of the files in the current working directory) before the echo command is executed. When the enter key is pressed, the shell automatically expands any qualifying characters on the command line before the command is carried out, so the echo command never saw the “*”, only its expanded result. Knowing this, we can see that echo behaved as expected.

发生了什么?为什么echo没有打印出”*"?从我们之前使用通配符的工作中,我们记得”*“字符表示匹配文件名中的任意字符,但是我们在原始讨论中没有看到shell是如何实现的。简单的答案是,shell在执行echo命令之前将”*“扩展为其他内容(在这种情况下是当前工作目录中文件的名称)。当按下回车键时,shell会自动在执行命令之前展开命令行上的任何限定字符,因此echo命令从未看到”*",只看到其扩展后的结果。了解这一点,我们可以看到echo的行为符合预期。

路径名扩展 Pathname Expansion

The mechanism by which wildcards work is called pathname expansion. If we try some of the techniques that we employed in our earlier lessons, we will see that they are really expansions. Given a home directory that looks like this:

通配符起作用的机制被称为路径名扩展。如果我们尝试一些我们之前在课程中使用的技术,我们会发现它们实际上是扩展。给定一个如下所示的主目录:

| |

we could carry out the following expansions:

我们可以进行以下扩展:

| |

and:

以及:

| |

or even:

甚至是:

| |

and looking beyond our home directory:

并且可以查看超出主目录的内容:

| |

波浪号扩展 Tilde Expansion

As we recall from our introduction to the cd command, the tilde character ("~") has a special meaning. When used at the beginning of a word, it expands into the name of the home directory of the named user, or if no user is named, the home directory of the current user:

正如我们在介绍cd命令时所记得的,波浪号("~")具有特殊的含义。当在单词的开头使用时,它会扩展为命名用户的主目录,如果没有指定用户,则扩展为当前用户的主目录:

| |

If user “foo” has an account, then:

如果用户"foo"有一个帐户,则:

| |

算术扩展 Arithmetic Expansion

The shell allows arithmetic to be performed by expansion. This allow us to use the shell prompt as a calculator:

Shell允许通过扩展执行算术运算。这使我们可以将Shell提示符用作计算器:

| |

Arithmetic expansion uses the form:

算术扩展使用以下形式:

| |

where expression is an arithmetic expression consisting of values and arithmetic operators.

其中,表达式是由值和算术运算符组成的算术表达式。

Arithmetic expansion only supports integers (whole numbers, no decimals), but can perform quite a number of different operations.

算术扩展仅支持整数(无小数),但可以执行许多不同的操作。

Spaces are not significant in arithmetic expressions and expressions may be nested. For example, to multiply five squared by three:

在算术表达式中,空格不重要,表达式可以嵌套。例如,要将五的平方乘以三:

| |

Single parentheses may be used to group multiple subexpressions. With this technique, we can rewrite the example above and get the same result using a single expansion instead of two:

可以使用单括号来分组多个子表达式。使用这种技术,我们可以重写上面的例子,并使用单个扩展而不是两个来获得相同的结果:

| |

Here is an example using the division and remainder operators. Notice the effect of integer division:

下面是一个使用除法和取余运算符的示例。注意整数除法的效果:

| |

大括号扩展 Brace Expansion

Perhaps the strangest expansion is called brace expansion. With it, we can create multiple text strings from a pattern containing braces. Here’s an example:

也许最奇怪的扩展被称为大括号扩展。使用它,我们可以从包含大括号的模式创建多个文本字符串。以下是一个示例:

| |

Patterns to be brace expanded may contain a leading portion called a preamble and a trailing portion called a postscript. The brace expression itself may contain either a comma-separated list of strings, or a range of integers or single characters. The pattern may not contain embedded whitespace. Here is an example using a range of integers:

要扩展的模式可以包含称为preamble的前导部分和称为postscript的尾随部分。大括号表达式本身可以包含逗号分隔的字符串列表,或者是整数或单个字符的范围。模式不得包含嵌入的空格。以下是使用整数范围的示例:

| |

A range of letters in reverse order:

以相反顺序的字母范围:

| |

Brace expansions may be nested:

大括号扩展可以嵌套:

| |

So what is this good for? The most common application is to make lists of files or directories to be created. For example, if we were a photographer and had a large collection of images we wanted to organize into years and months, the first thing we might do is create a series of directories named in numeric “Year-Month” format. This way, the directory names will sort in chronological order. we could type out a complete list of directories, but that’s a lot of work and it’s error-prone too. Instead, we could do this:

那么这有什么好处?最常见的应用是创建要创建的文件或目录的列表。例如,如果我们是摄影师,有很多图像要按年份和月份组织,我们可能首先要做的是创建以数字"年份-月份"格式命名的一系列目录。这样,目录名称将按照时间顺序排序。我们可以输入完整的目录列表,但那是很麻烦的工作,而且容易出错。相反,我们可以这样做:

| |

Pretty slick!

很漂亮!

参数扩展 Parameter Expansion

We’re only going to touch briefly on parameter expansion in this lesson, but we’ll be covering it more later. It’s a feature that is more useful in shell scripts than directly on the command line. Many of its capabilities have to do with the system’s ability to store small chunks of data and to give each chunk a name. Many such chunks, more properly called variables, are available for our examination. For example, the variable named “USER” contains our user name. To invoke parameter expansion and reveal the contents of USER we would do this:

在本课程中,我们只会简要涉及参数扩展,但我们将在后面对其进行更详细的介绍。这是一个在Shell脚本中比在命令行直接使用更有用的功能。它的许多功能与系统存储小数据块和为每个块命名有关。我们可以检查许多这样的块,更准确地称为变量。例如,名为"USER"的变量包含我们的用户名。要调用参数扩展并显示USER的内容,我们可以这样做:

| |

To see a list of available variables, try this:

要查看可用变量的列表,请尝试以下命令:

| |

With other types of expansion, if we mistype a pattern, the expansion will not take place and the echo command will simply display the mistyped pattern. With parameter expansion, if we misspell the name of a variable, the expansion will still take place, but will result in an empty string:

与其他类型的扩展不同,如果我们拼写错误的模式,扩展将不会发生,echo命令将只显示错误拼写的模式。但是,对于参数扩展,如果我们拼写变量名称错误,扩展仍将发生,但结果将为空字符串:

| |

命令替换 Command Substitution

Command substitution allows us to use the output of a command as an expansion:

命令替换 允许我们将命令的输出作为扩展使用:

| |

A clever one goes something like this:

一个巧妙的例子如下:

| |

Here we passed the results of which cp as an argument to the ls command, thereby getting the listing of of the cp program without having to know its full pathname. We are not limited to just simple commands. Entire pipelines can be used (only partial output shown):

在这里,我们将 which cp 的结果作为 ls 命令的参数传递,从而获取 cp 程序的列表,而无需知道其完整路径名。我们不仅仅限于简单的命令,还可以使用整个流水线(仅显示部分输出):

| |

In this example, the results of the pipeline became the argument list of the file command. There is an alternate syntax for command substitution in older shell programs which is also supported in bash. It uses back-quotes instead of the dollar sign and parentheses:

在此示例中,流水线的结果成为 file 命令的参数列表。在旧的 shell 程序中,还有一种替代的命令替换语法,也在 bash 中支持。它使用反引号而不是美元符号和括号:

| |

引号 Quoting

Now that we’ve seen how many ways the shell can perform expansions, it’s time to learn how we can control it. Take for example:

现在我们已经了解了 shell 执行扩展的多种方式,是时候学习如何控制它了。例如:

| |

or:

或者:

| |

In the first example, word-splitting by the shell removed extra whitespace from the echo command’s list of arguments. In the second example, parameter expansion substituted an empty string for the value of “$1” because it was an undefined variable. The shell provides a mechanism called quoting to selectively suppress unwanted expansions.

在第一个示例中,Shell 的词分割功能删除了 echo 命令参数列表中的额外空格。在第二个示例中,参数扩展将一个空字符串替换为"$1“的值,因为它是一个未定义的变量。Shell 提供了一种称为引号的机制,可以选择性地抑制不需要的扩展。

双引号 Double Quotes

The first type of quoting we will look at is double quotes. If we place text inside double quotes, all the special characters used by the shell lose their special meaning and are treated as ordinary characters. The exceptions are “$”, “\” (backslash), and “`” (back- quote). This means that word-splitting, pathname expansion, tilde expansion, and brace expansion are suppressed, but parameter expansion, arithmetic expansion, and command substitution are still carried out. Using double quotes, we can cope with filenames containing embedded spaces. Imagine we were the unfortunate victim of a file called two words.txt. If we tried to use this on the command line, word-splitting would cause this to be treated as two separate arguments rather than the desired single argument:

我们将首先看一下的引号类型是双引号。如果我们将文本放在双引号内,Shell 中使用的所有特殊字符都失去了它们的特殊含义,被视为普通字符。例外的是 “$"、"\"(反斜杠)和 “`"(反引号)。这意味着词分割、路径名扩展、波浪线扩展和大括号扩展被抑制,但参数扩展、算术扩展和命令替换仍然会进行。使用双引号,我们可以处理包含空格的文件名。假设我们是一个不幸的two words.txt文件的受害者。如果我们尝试在命令行上使用它,词分割将使其被视为两个独立的参数,而不是所需的单个参数:

| |

By using double quotes, we can stop the word-splitting and get the desired result; further, we can even repair the damage:

通过使用双引号,我们可以阻止词分割并获得所需的结果;此外,我们甚至可以修复损坏:

| |

There! Now we don’t have to keep typing those pesky double quotes. Remember, parameter expansion, arithmetic expansion, and command substitution still take place within double quotes:

现在!我们不再需要不断输入那些烦人的双引号。请记住,参数扩展、算术扩展和命令替换仍然会在双引号内进行:

| |

We should take a moment to look at the effect of double quotes on command substitution. First let’s look a little deeper at how word splitting works. In our earlier example, we saw how word-splitting appears to remove extra spaces in our text:

我们应该花点时间来看一下双引号对命令替换的影响。首先,让我们更深入地了解词分割是如何工作的。在我们之前的示例中,我们看到词分割似乎删除了文本中的额外空格:

| |

By default, word-splitting looks for the presence of spaces, tabs, and newlines (linefeed characters) and treats them as delimiters between words. This means that unquoted spaces, tabs, and newlines are not considered to be part of the text. They only serve as separators. Since they separate the words into different arguments, our example command line contains a command followed by four distinct arguments. If we add double quotes:

默认情况下,词分割会查找空格、制表符和换行符(换行字符),并将它们视为单词之间的分隔符。这意味着未加引号的空格、制表符和换行符不被视为文本的一部分。它们只是分隔符。由于它们将单词分隔为不同的参数,我们的示例命令行包含一个命令和四个不同的参数。如果我们添加双引号:

| |

word-splitting is suppressed and the embedded spaces are not treated as delimiters, rather they become part of the argument. Once the double quotes are added, our command line contains a command followed by a single argument. The fact that newlines are considered delimiters by the word-splitting mechanism causes an interesting, albeit subtle, effect on command substitution. Consider the following:

词分割被抑制,嵌入的空格不再被视为分隔符,而是成为参数的一部分。一旦添加了双引号,我们的命令行包含一个命令和一个单独的参数。换行符被词分割机制视为分隔符,对命令替换产生了一个有趣但微妙的影响。考虑以下示例:

| |

In the first instance, the unquoted command substitution resulted in a command line containing thirty-eight arguments. In the second, a command line with one argument that includes the embedded spaces and newlines.

在第一个示例中,未加引号的命令替换导致一个包含三十八个参数的命令行。在第二个示例中,命令行只有一个参数,其中包含嵌入的空格和换行符。

单引号 Single Quotes

When we need to suppress all expansions, we use single quotes. Here is a comparison of unquoted, double quotes, and single quotes:

当我们需要抑制所有扩展时,我们使用单引号。以下是未加引号、双引号和单引号的比较:

| |

As we can see, with each succeeding level of quoting, more and more of the expansions are suppressed.

正如我们所看到的,随着引号层次的增加,越来越多的扩展被抑制。

转义字符 Escaping Characters

Sometimes we only want to quote a single character. To do this, we can precede a character with a backslash, which in this context is called the escape character. Often this is done inside double quotes to selectively prevent an expansion:

有时我们只想引用一个单个字符。为此,我们可以在字符前加上反斜杠,这在这个上下文中称为转义字符。通常,这是在双引号内部选择性地阻止扩展的方法:

| |

It is also common to use escaping to eliminate the special meaning of a character in a filename. For example, it is possible to use characters in filenames that normally have special meaning to the shell. These would include “$”, “!”, “&”, " “, and others. To include a special character in a filename we can to this:

在文件名中,使用转义通常是为了消除字符的特殊含义。例如,可以在文件名中使用通常对shell具有特殊含义的字符,包括”$"、"!"、"&"、” “和其他字符。要在文件名中包含特殊字符,我们可以这样做:

| |

To allow a backslash character to appear, escape it by typing “\”. Note that within single quotes, the backslash loses its special meaning and is treated as an ordinary character.

要允许反斜杠字符出现,通过键入”\“来转义它。请注意,在单引号内部,反斜杠失去了其特殊含义,被视为普通字符。

更多反斜杠技巧 More Backslash Tricks

If we look at the man pages for any program written by the GNU project, we will see that in addition to command line options consisting of a dash and a single letter, there are also long option names that begin with two dashes. For example, the following are equivalent:

如果我们查看由GNU项目编写的任何程序的man页,我们会看到除了由破折号和单个字母组成的命令行选项外,还有以两个破折号开头的长选项名。例如,下面两者是等价的:

| |

Why do they support both? The short form is for lazy typists on the command line and the long form is mostly for scripts though some options may only be available in long form. Sometimes it is better to use a long option when the option is obscure or we want to document more clearly what an option is. This is especially useful when writing scripts where maximum readability is desired, and besides, anytime we can save ourselves a trip to the man page is a good thing.

为什么它们都被支持?短格式适用于命令行上的懒惰打字者,而长格式主要用于脚本,尽管某些选项可能仅在长格式中可用。当选项晦涩难懂或者我们想更清楚地记录选项是什么时,使用长选项可能更好。这在编写希望最大可读性的脚本时特别有用,而且,任何时候我们能够节省一次访问man页都是一件好事。

As we might suspect, using the long form options can make a single command line very long. To combat this problem, we can use a backslash to get the shell to ignore a newline character like this:

正如我们所猜测的,使用长格式选项可能会使单个命令行非常长。为了解决这个问题,我们可以使用反斜杠来使shell忽略换行符,像这样:

| |

Using the backslash in this way allows us to embed newlines in our command. Note that for this trick to work, the newline must be typed immediately after the backslash. If we put a space after the backslash, the space will be ignored, not the newline. Backslashes are also used to insert special characters into our text. These are called backslash escape characters. Here are the common ones:

以这种方式使用反斜杠可以在命令中插入换行符。请注意,为使此技巧生效,换行符必须紧跟在反斜杠后面输入。如果在反斜杠后面加上空格,则空格将被忽略,而不是换行符。反斜杠还用于在文本中插入特殊字符。这些特殊字符称为反斜杠转义字符。以下是常见的转义字符:

| 转义字符 Escape Character | 名称 Name | 可能的用途 Possible Uses |

|---|---|---|

| \n | newline | 在文本中添加空行 Adding blank lines to text |

| \t | tab | 在文本中插入水平制表符 Inserting horizontal tabs to text |

| \a | alert | 让我们的终端发出警报 Makes our terminal beep |

| \ | backslash | 插入一个反斜杠 Inserts a backslash |

| \f | formfeed | 将其发送给打印机以弹出页面 Sending this to our printer ejects the page |

The use of the backslash escape characters is very common. This idea first appeared in the C programming language. Today, the shell, C++, Perl, python, awk, tcl, and many other programming languages use this concept. Using the echo command with the -e option will allow us to demonstrate:

使用反斜杠转义字符非常常见。这个概念最初出现在C编程语言中。如今,Shell、C++、Perl、Python、Awk、Tcl和许多其他编程语言都使用了这个概念。使用带有-e选项的echo命令可以让我们进行演示:

| |

2.9 - 权限

权限 Permissions

https://linuxcommand.org/lc3_lts0090.php

The Unix-like operating systems, such as Linux differ from other computing systems in that they are not only multitasking but also multi-user.

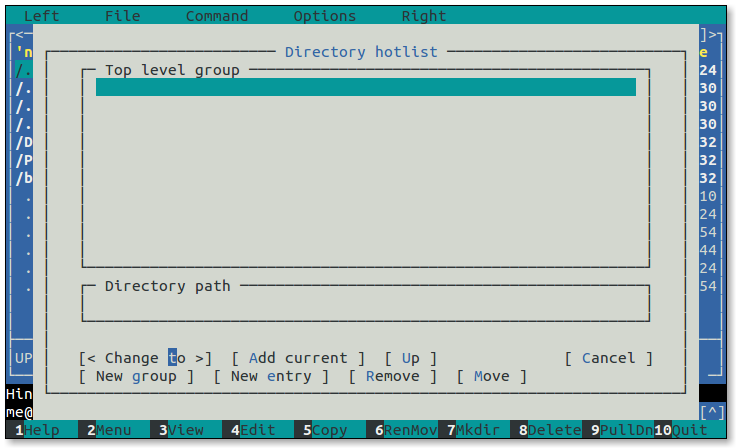

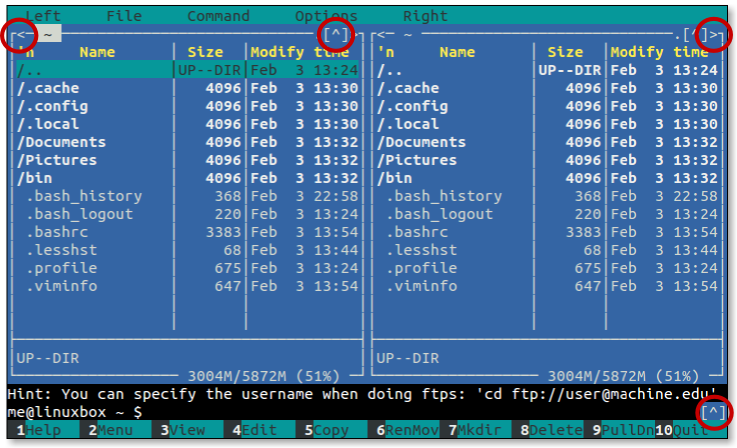

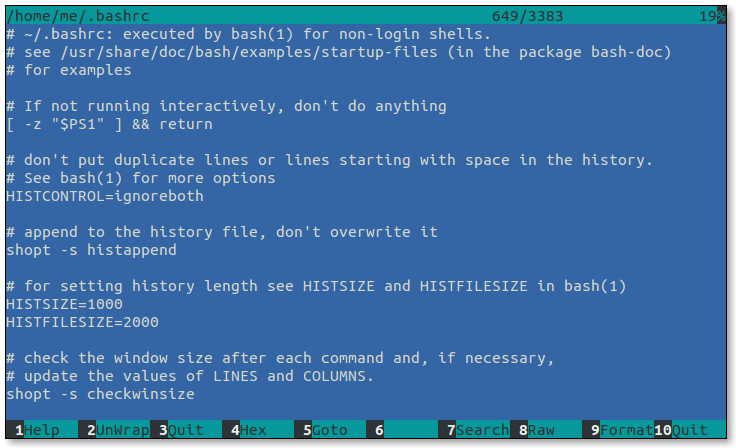

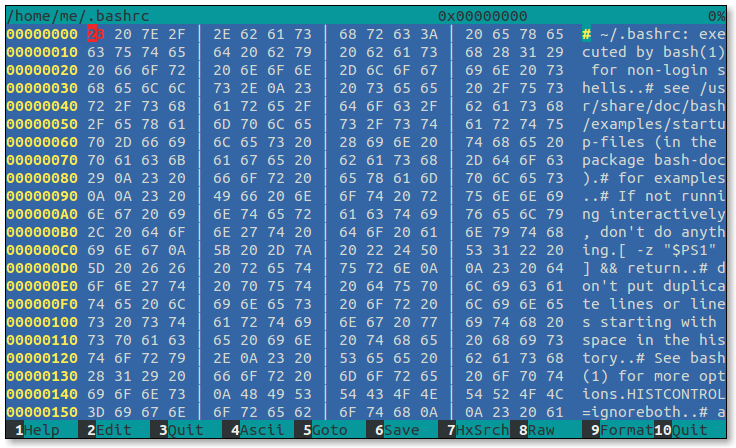

类Unix操作系统(如Linux)与其他计算系统的不同之处在于它们不仅支持多任务,还支持多用户。