这是本节的多页打印视图。

点击此处打印.

返回本页常规视图.

指南

Guides 指南

Task-oriented walkthroughs of common use cases

常见用例的任务导向式演示

The documentation covers the following techniques:

文档涵盖以下技术:

An overview of gRPC authentication, including built-in auth mechanisms, and how to plug in your own authentication systems.

gRPC 认证的概述,包括内置认证机制以及如何插入自己的认证系统。

gRPC is designed to support high-performance open-source RPCs in many languages. This page describes performance benchmarking tools, scenarios considered by tests, and the testing infrastructure.

gRPC 旨在支持多种语言中的高性能开源 RPC。该页面介绍了性能基准测试工具、测试考虑的场景以及测试基础架构。

How to compress the data sent over the wire while using gRPC.

如何在使用 gRPC 时压缩在传输中发送的数据。

Explains how custom load balancing policies can help optimize load balancing under unique circumstances.

解释了如何使用自定义负载均衡策略来优化特定情况下的负载均衡。

Explains how deadlines can be used to effectively deal with unreliable backends.

解释了如何使用超时处理来有效处理不可靠的后端。

How gRPC deals with errors, and gRPC error codes.

gRPC 如何处理错误以及 gRPC 错误代码。

Explains what flow control is and how you can manually control it.

解释了流量控制是什么以及如何手动控制它。

How to use HTTP/2 PING-based keepalives in gRPC.

如何在 gRPC 中使用基于 HTTP/2 PING 的保持活动状态。

A user guide of both general and language-specific best practices to improve performance.

通用和特定语言的性能最佳实践用户指南。

Explains how to configure RPCs to wait for the server to be ready before sending the request.

解释了如何配置 RPC,在发送请求之前等待服务器准备就绪。

1 - 认证

Authentication 认证

https://grpc.io/docs/guides/auth/

An overview of gRPC authentication, including built-in auth mechanisms, and how to plug in your own authentication systems.

gRPC 认证概述,包括内置的认证机制以及如何插入自己的认证系统。

Overview 概述

gRPC is designed to work with a variety of authentication mechanisms, making it easy to safely use gRPC to talk to other systems. You can use our supported mechanisms - SSL/TLS with or without Google token-based authentication - or you can plug in your own authentication system by extending our provided code.

gRPC 设计用于与各种认证机制配合工作,使得通过 gRPC 安全地与其他系统进行通信变得容易。您可以使用我们支持的机制 - SSL/TLS(可选使用 Google 基于令牌的认证) - 或者通过扩展我们提供的代码来插入自己的认证系统。

gRPC also provides a simple authentication API that lets you provide all the necessary authentication information as Credentials when creating a channel or making a call.

gRPC 还提供了一个简单的认证 API,允许您在创建通道或进行调用时提供所有必要的认证信息作为 Credentials。

Supported auth mechanisms 支持的认证机制

The following authentication mechanisms are built-in to gRPC:

以下认证机制已内置到 gRPC 中:

- SSL/TLS: gRPC has SSL/TLS integration and promotes the use of SSL/TLS to authenticate the server, and to encrypt all the data exchanged between the client and the server. Optional mechanisms are available for clients to provide certificates for mutual authentication.

- SSL/TLS: gRPC 具有 SSL/TLS 集成,并推广使用 SSL/TLS 对服务器进行身份验证,并对客户端和服务器之间交换的所有数据进行加密。对于客户端提供证书以进行互相认证,可提供可选机制。

- ALTS: gRPC supports ALTS as a transport security mechanism, if the application is running on Google Cloud Platform (GCP). For details, see one of the following language-specific pages: ALTS in C++, ALTS in Go, ALTS in Java, ALTS in Python.

- ALTS: 如果应用程序在 Google Cloud Platform (GCP) 上运行,gRPC 支持 ALTS 作为传输安全机制。有关详细信息,请参阅以下特定语言的页面:C++ 中的 ALTS,Go 中的 ALTS,Java 中的 ALTS,Python 中的 ALTS。

- Token-based authentication with Google: gRPC provides a generic mechanism (described below) to attach metadata based credentials to requests and responses. Additional support for acquiring access tokens (typically OAuth2 tokens) while accessing Google APIs through gRPC is provided for certain auth flows: you can see how this works in our code examples below. In general this mechanism must be used as well as SSL/TLS on the channel - Google will not allow connections without SSL/TLS, and most gRPC language implementations will not let you send credentials on an unencrypted channel.

- 使用 Google 的基于令牌的身份验证: gRPC 提供了一种通用机制(下文描述),用于将基于元数据的凭据附加到请求和响应中。在通过 gRPC 访问 Google API 时,还提供了获取访问令牌(通常是 OAuth2 令牌)的其他支持,您可以在下面的代码示例中查看其工作原理。通常情况下,此机制必须与通道上的 SSL/TLS 一起使用 - Google 不允许在没有 SSL/TLS 的情况下建立连接,大多数 gRPC 语言实现也不允许您在未加密的通道上发送凭据。

Warning 警告

Google credentials should only be used to connect to Google services. Sending a Google issued OAuth2 token to a non-Google service could result in this token being stolen and used to impersonate the client to Google services.

Google 凭据应仅用于连接到 Google 服务。将由 Google 发行的 OAuth2 令牌发送到非 Google 服务可能导致该令牌被窃取并用于冒充客户端访问 Google 服务。

Authentication API 认证 API

gRPC provides a simple authentication API based around the unified concept of Credentials objects, which can be used when creating an entire gRPC channel or an individual call.

gRPC 提供了一个简单的认证 API,基于 Credentials 对象的统一概念,可以在创建整个 gRPC 通道或单个调用时使用。

Credential types 凭据类型

Credentials can be of two types:

凭据可以分为两种类型:

- Channel credentials, which are attached to a

Channel, such as SSL credentials. - Call credentials, which are attached to a call (or

ClientContext in C++). - 通道凭据,附加到

Channel 上的凭据,例如 SSL 凭据。 - 调用凭据,附加到调用(或 C++ 中的

ClientContext)上的凭据。

You can also combine these in a CompositeChannelCredentials, allowing you to specify, for example, SSL details for the channel along with call credentials for each call made on the channel. A CompositeChannelCredentials associates a ChannelCredentials and a CallCredentials to create a new ChannelCredentials. The result will send the authentication data associated with the composed CallCredentials with every call made on the channel.

您还可以将它们组合在 CompositeChannelCredentials 中,允许您为通道指定 SSL 详细信息以及每个在通道上进行的调用的调用凭据,例如。CompositeChannelCredentials 将 ChannelCredentials 和 CallCredentials 关联起来,以创建新的 ChannelCredentials。结果将使用与组合的 CallCredentials 相关联的身份验证数据发送到通道上进行的每个调用。

For example, you could create a ChannelCredentials from an SslCredentials and an AccessTokenCredentials. The result when applied to a Channel would send the appropriate access token for each call on this channel.

例如,您可以使用 SslCredentials 和 AccessTokenCredentials 创建一个 ChannelCredentials。将其应用于通道时,结果将为该通道上的每个调用发送适当的访问令牌。

Individual CallCredentials can also be composed using CompositeCallCredentials. The resulting CallCredentials when used in a call will trigger the sending of the authentication data associated with the two CallCredentials.

还可以使用 CompositeCallCredentials 组合单独的 CallCredentials。在调用中使用生成的 CallCredentials 将触发与这两个 CallCredentials 相关联的身份验证数据的发送。

Using client-side SSL/TLS 使用客户端 SSL/TLS

Now let’s look at how Credentials work with one of our supported auth mechanisms. This is the simplest authentication scenario, where a client just wants to authenticate the server and encrypt all data. The example is in C++, but the API is similar for all languages: you can see how to enable SSL/TLS in more languages in our Examples section below.

现在让我们看一下 Credentials 如何与我们支持的一种认证机制配合工作。这是最简单的认证场景,其中客户端只想对服务器进行认证并加密所有数据。以下示例是用 C++ 编写的,但 API 在所有语言中的用法类似:您可以在下面的示例部分中查看如何在其他语言中启用 SSL/TLS。

1

2

3

4

5

6

7

8

| // Create a default SSL ChannelCredentials object. 创建一个默认的 SSL ChannelCredentials 对象。

auto channel_creds = grpc::SslCredentials(grpc::SslCredentialsOptions());

// Create a channel using the credentials created in the previous step. 使用前面创建的凭据创建一个通道。

auto channel = grpc::CreateChannel(server_name, channel_creds);

// Create a stub on the channel. 在通道上创建一个存根。

std::unique_ptr<Greeter::Stub> stub(Greeter::NewStub(channel));

// Make actual RPC calls on the stub. 在存根上进行实际的 RPC 调用。

grpc::Status s = stub->sayHello(&context, *request, response);

|

For advanced use cases such as modifying the root CA or using client certs, the corresponding options can be set in the SslCredentialsOptions parameter passed to the factory method.

对于诸如修改根 CA 或使用客户端证书之类的高级用例,可以在传递给工厂方法的 SslCredentialsOptions 参数中设置相应的选项。

Note 注意

Non-POSIX-compliant systems (such as Windows) need to specify the root certificates in SslCredentialsOptions, since the defaults are only configured for POSIX filesystems.

非 POSIX 兼容的系统(例如 Windows)需要在 SslCredentialsOptions 中指定根证书,因为默认值仅针对 POSIX 文件系统进行了配置。

Using Google token-based authentication 使用基于 Google 令牌的身份验证

gRPC applications can use a simple API to create a credential that works for authentication with Google in various deployment scenarios. Again, our example is in C++ but you can find examples in other languages in our Examples section.

gRPC 应用程序可以使用简单的 API 创建适用于在各种部署场景下与 Google 进行身份验证的凭据。以下示例再次以 C++ 为例,但您可以在我们的示例部分中找到其他语言的示例。

1

2

3

4

5

| auto creds = grpc::GoogleDefaultCredentials();

// Create a channel, stub and make RPC calls (same as in the previous example) 创建一个通道、存根并进行 RPC 调用(与前面的示例相同)

auto channel = grpc::CreateChannel(server_name, creds);

std::unique_ptr<Greeter::Stub> stub(Greeter::NewStub(channel));

grpc::Status s = stub->sayHello(&context, *request, response);

|

This channel credentials object works for applications using Service Accounts as well as for applications running in Google Compute Engine (GCE). In the former case, the service account’s private keys are loaded from the file named in the environment variable GOOGLE_APPLICATION_CREDENTIALS. The keys are used to generate bearer tokens that are attached to each outgoing RPC on the corresponding channel.

此通道凭据对象适用于使用服务账号以及在 Google Compute Engine (GCE) 上运行的应用程序。对于前一种情况,服务账号的私钥将从环境变量 GOOGLE_APPLICATION_CREDENTIALS 中指定的文件中加载。这些密钥用于生成附加到相应通道上的每个传出 RPC 的承载令牌。

For applications running in GCE, a default service account and corresponding OAuth2 scopes can be configured during VM setup. At run-time, this credential handles communication with the authentication systems to obtain OAuth2 access tokens and attaches them to each outgoing RPC on the corresponding channel.

对于在 GCE 上运行的应用程序,可以在虚拟机设置期间配置默认服务账号和相应的 OAuth2 范围。在运行时,该凭据与身份验证系统进行通信,获取 OAuth2 访问令牌,并将其附加到相应通道上的每个传出 RPC。

Extending gRPC to support other authentication mechanisms 扩展 gRPC 支持其他身份验证机制

The Credentials plugin API allows developers to plug in their own type of credentials. This consists of:

Credentials 插件 API 允许开发人员插入自己的凭据类型。这包括以下内容:

- The

MetadataCredentialsPlugin abstract class, which contains the pure virtual GetMetadata method that needs to be implemented by a sub-class created by the developer. - The

MetadataCredentialsFromPlugin function, which creates a CallCredentials from the MetadataCredentialsPlugin. MetadataCredentialsPlugin 抽象类,其中包含由开发人员创建的子类需要实现的纯虚拟 GetMetadata 方法。MetadataCredentialsFromPlugin 函数,从 MetadataCredentialsPlugin 创建一个 CallCredentials。

Here is example of a simple credentials plugin which sets an authentication ticket in a custom header.

以下是一个简单的凭据插件示例,它在自定义标头中设置身份验证票据。

1

2

3

4

5

6

7

8

9

10

11

12

13

14

15

16

17

18

19

| class MyCustomAuthenticator : public grpc::MetadataCredentialsPlugin {

public:

MyCustomAuthenticator(const grpc::string& ticket) : ticket_(ticket) {}

grpc::Status GetMetadata(

grpc::string_ref service_url, grpc::string_ref method_name,

const grpc::AuthContext& channel_auth_context,

std::multimap<grpc::string, grpc::string>* metadata) override {

metadata->insert(std::make_pair("x-custom-auth-ticket", ticket_));

return grpc::Status::OK;

}

private:

grpc::string ticket_;

};

auto call_creds = grpc::MetadataCredentialsFromPlugin(

std::unique_ptr<grpc::MetadataCredentialsPlugin>(

new MyCustomAuthenticator("super-secret-ticket")));

|

A deeper integration can be achieved by plugging in a gRPC credentials implementation at the core level. gRPC internals also allow switching out SSL/TLS with other encryption mechanisms.

通过在核心级别插入 gRPC 凭据实现,可以实现更深入的集成。gRPC 内部还允许将 SSL/TLS 替换为其他加密机制。

Examples 示例

These authentication mechanisms will be available in all gRPC’s supported languages. The following sections demonstrate how authentication and authorization features described above appear in each language: more languages are coming soon.

这些身份验证机制将适用于所有 gRPC 支持的语言。以下部分展示了每种语言中上述身份验证和授权功能的示例:更多语言即将推出。

Go

Base case - no encryption or authentication 基本情况 - 无加密或身份验证

Client:

1

2

3

4

| conn, _ := grpc.Dial("localhost:50051", grpc.WithTransportCredentials(insecure.NewCredentials()))

// error handling omitted

client := pb.NewGreeterClient(conn)

// ...

|

Server:

1

2

3

4

| s := grpc.NewServer()

lis, _ := net.Listen("tcp", "localhost:50051")

// error handling omitted

s.Serve(lis)

|

With server authentication SSL/TLS 使用服务器身份验证 SSL/TLS

Client:

1

2

3

4

5

| creds, _ := credentials.NewClientTLSFromFile(certFile, "")

conn, _ := grpc.Dial("localhost:50051", grpc.WithTransportCredentials(creds))

// error handling omitted 省略错误处理

client := pb.NewGreeterClient(conn)

// ...

|

Server:

1

2

3

4

5

| creds, _ := credentials.NewServerTLSFromFile(certFile, keyFile)

s := grpc.NewServer(grpc.Creds(creds))

lis, _ := net.Listen("tcp", "localhost:50051")

// error handling omitted 省略错误处理

s.Serve(lis)

|

Authenticate with Google 使用 Google 进行身份验证

1

2

3

4

5

6

7

8

9

10

11

12

| pool, _ := x509.SystemCertPool()

// error handling omitted 省略错误处理

creds := credentials.NewClientTLSFromCert(pool, "")

perRPC, _ := oauth.NewServiceAccountFromFile("service-account.json", scope)

conn, _ := grpc.Dial(

"greeter.googleapis.com",

grpc.WithTransportCredentials(creds),

grpc.WithPerRPCCredentials(perRPC),

)

// error handling omitted 省略错误处理

client := pb.NewGreeterClient(conn)

// ...

|

Ruby

Base case - no encryption or authentication 基本情况 - 无加密或身份验证

1

2

| stub = Helloworld::Greeter::Stub.new('localhost:50051', :this_channel_is_insecure)

...

|

With server authentication SSL/TLS 使用服务器身份验证 SSL/TLS

1

2

| creds = GRPC::Core::ChannelCredentials.new(load_certs) # load_certs typically loads a CA roots file - load_certs 通常加载 CA 根证书文件

stub = Helloworld::Greeter::Stub.new('myservice.example.com', creds)

|

Authenticate with Google 使用 Google 进行身份验证

1

2

3

4

5

6

7

| require 'googleauth' # from http://www.rubydoc.info/gems/googleauth/0.1.0

...

ssl_creds = GRPC::Core::ChannelCredentials.new(load_certs) # load_certs typically loads a CA roots file - load_certs 通常加载 CA 根证书文件

authentication = Google::Auth.get_application_default()

call_creds = GRPC::Core::CallCredentials.new(authentication.updater_proc)

combined_creds = ssl_creds.compose(call_creds)

stub = Helloworld::Greeter::Stub.new('greeter.googleapis.com', combined_creds)

|

C++

Base case - no encryption or authentication 基本情况 - 无加密或身份验证

1

2

3

| auto channel = grpc::CreateChannel("localhost:50051", InsecureChannelCredentials());

std::unique_ptr<Greeter::Stub> stub(Greeter::NewStub(channel));

...

|

With server authentication SSL/TLS 使用服务器身份验证 SSL/TLS

1

2

3

4

| auto channel_creds = grpc::SslCredentials(grpc::SslCredentialsOptions());

auto channel = grpc::CreateChannel("myservice.example.com", channel_creds);

std::unique_ptr<Greeter::Stub> stub(Greeter::NewStub(channel));

...

|

Authenticate with Google 使用 Google 进行身份验证

1

2

3

4

| auto creds = grpc::GoogleDefaultCredentials();

auto channel = grpc::CreateChannel("greeter.googleapis.com", creds);

std::unique_ptr<Greeter::Stub> stub(Greeter::NewStub(channel));

...

|

Python

Base case - No encryption or authentication 基本情况 - 无加密或身份验证

1

2

3

4

5

| import grpc

import helloworld_pb2

channel = grpc.insecure_channel('localhost:50051')

stub = helloworld_pb2.GreeterStub(channel)

|

With server authentication SSL/TLS 使用服务器身份验证 SSL/TLS

Client:

1

2

3

4

5

6

7

| import grpc

import helloworld_pb2

with open('roots.pem', 'rb') as f:

creds = grpc.ssl_channel_credentials(f.read())

channel = grpc.secure_channel('myservice.example.com:443', creds)

stub = helloworld_pb2.GreeterStub(channel)

|

Server:

1

2

3

4

5

6

7

8

9

10

11

12

13

14

| import grpc

import helloworld_pb2

from concurrent import futures

server = grpc.server(futures.ThreadPoolExecutor(max_workers=10))

with open('key.pem', 'rb') as f:

private_key = f.read()

with open('chain.pem', 'rb') as f:

certificate_chain = f.read()

server_credentials = grpc.ssl_server_credentials( ( (private_key, certificate_chain), ) )

# Adding GreeterServicer to server omitted

server.add_secure_port('myservice.example.com:443', server_credentials)

server.start()

# Server sleep omitted

|

Authenticate with Google using a JWT 使用 Google 进行身份验证使用 JWT

1

2

3

4

5

6

7

8

9

10

11

12

13

| import grpc

import helloworld_pb2

from google import auth as google_auth

from google.auth import jwt as google_auth_jwt

from google.auth.transport import grpc as google_auth_transport_grpc

credentials, _ = google_auth.default()

jwt_creds = google_auth_jwt.OnDemandCredentials.from_signing_credentials(

credentials)

channel = google_auth_transport_grpc.secure_authorized_channel(

jwt_creds, None, 'greeter.googleapis.com:443')

stub = helloworld_pb2.GreeterStub(channel)

|

Authenticate with Google using an Oauth2 token 使用 Google 进行身份验证使用 OAuth2 令牌

1

2

3

4

5

6

7

8

9

10

11

12

| import grpc

import helloworld_pb2

from google import auth as google_auth

from google.auth.transport import grpc as google_auth_transport_grpc

from google.auth.transport import requests as google_auth_transport_requests

credentials, _ = google_auth.default(scopes=(scope,))

request = google_auth_transport_requests.Request()

channel = google_auth_transport_grpc.secure_authorized_channel(

credentials, request, 'greeter.googleapis.com:443')

stub = helloworld_pb2.GreeterStub(channel)

|

With server authentication SSL/TLS and a custom header with token 使用服务器身份验证 SSL/TLS 和带有令牌的自定义标头

Client:

1

2

3

4

5

6

7

8

9

10

11

12

13

14

15

16

17

18

19

20

21

22

23

24

| import grpc

import helloworld_pb2

class GrpcAuth(grpc.AuthMetadataPlugin):

def __init__(self, key):

self._key = key

def __call__(self, context, callback):

callback((('rpc-auth-header', self._key),), None)

with open('path/to/root-cert', 'rb') as fh:

root_cert = fh.read()

channel = grpc.secure_channel(

'myservice.example.com:443',

grpc.composite_channel_credentials(

grpc.ssl_channel_credentials(root_cert),

grpc.metadata_call_credentials(

GrpcAuth('access_key')

)

)

)

stub = helloworld_pb2.GreeterStub(channel)

|

Server:

1

2

3

4

5

6

7

8

9

10

11

12

13

14

15

16

17

18

19

20

21

22

23

24

25

26

27

28

29

30

31

32

33

34

35

| from concurrent import futures

import grpc

import helloworld_pb2

class AuthInterceptor(grpc.ServerInterceptor):

def __init__(self, key):

self._valid_metadata = ('rpc-auth-header', key)

def deny(_, context):

context.abort(grpc.StatusCode.UNAUTHENTICATED, 'Invalid key')

self._deny = grpc.unary_unary_rpc_method_handler(deny)

def intercept_service(self, continuation, handler_call_details):

meta = handler_call_details.invocation_metadata

if meta and meta[0] == self._valid_metadata:

return continuation(handler_call_details)

else:

return self._deny

server = grpc.server(

futures.ThreadPoolExecutor(max_workers=10),

interceptors=(AuthInterceptor('access_key'),)

)

with open('key.pem', 'rb') as f:

private_key = f.read()

with open('chain.pem', 'rb') as f:

certificate_chain = f.read()

server_credentials = grpc.ssl_server_credentials( ( (private_key, certificate_chain), ) )

# Adding GreeterServicer to server omitted

server.add_secure_port('myservice.example.com:443', server_credentials)

server.start()

# Server sleep omitted

|

Java

Base case - no encryption or authentication 基本情况 - 无加密或身份验证

1

2

3

4

| ManagedChannel channel = Grpc.newChannelBuilder(

"localhost:50051", InsecureChannelCredentials.create())

.build();

GreeterGrpc.GreeterStub stub = GreeterGrpc.newStub(channel);

|

With server authentication SSL/TLS 使用服务器身份验证 SSL/TLS

In Java we recommend that you use netty-tcnative with BoringSSL when using gRPC over TLS. You can find details about installing and using netty-tcnative and other required libraries for both Android and non-Android Java in the gRPC Java Security documentation.

在 Java 中,我们建议您在使用 TLS 的 gRPC 时使用 netty-tcnative 和 BoringSSL。您可以在 gRPC Java Security 文档中找到有关安装和使用 netty-tcnative 以及其他所需库的详细信息,适用于 Android 和非 Android Java。

To enable TLS on a server, a certificate chain and private key need to be specified in PEM format. Such private key should not be using a password. The order of certificates in the chain matters: more specifically, the certificate at the top has to be the host CA, while the one at the very bottom has to be the root CA. The standard TLS port is 443, but we use 8443 below to avoid needing extra permissions from the OS.

要在服务器上启用 TLS,需要以 PEM 格式指定证书链和私钥。此类私钥不应使用密码。证书链中证书的顺序很重要:具体来说,顶部的证书必须是主机 CA,而底部的证书必须是根 CA。标准的 TLS 端口是 443,但为了避免需要额外的操作系统权限,下面使用了 8443。

1

2

3

4

5

| ServerCredentials creds = TlsServerCredentials.create(certChainFile, privateKeyFile);

Server server = Grpc.newServerBuilderForPort(8443, creds)

.addService(TestServiceGrpc.bindService(serviceImplementation))

.build();

server.start();

|

If the issuing certificate authority is not known to the client then it can be configured using TlsChannelCredentials.newBuilder().

如果客户端不知道颁发证书的机构,则可以使用 TlsChannelCredentials.newBuilder() 进行配置。

On the client side, server authentication with SSL/TLS looks like this:

在客户端上,使用 SSL/TLS 进行服务器身份验证的代码如下:

1

2

3

4

5

6

7

8

9

10

11

12

13

| // With server authentication SSL/TLS

ManagedChannel channel = Grpc.newChannelBuilder(

"myservice.example.com:443", TlsChannelCredentials.create())

.build();

GreeterGrpc.GreeterStub stub = GreeterGrpc.newStub(channel);

// With server authentication SSL/TLS; custom CA root certificates

ChannelCredentials creds = TlsChannelCredentials.newBuilder()

.trustManager(new File("roots.pem"))

.build();

ManagedChannel channel = Grpc.newChannelBuilder("myservice.example.com:443", creds)

.build();

GreeterGrpc.GreeterStub stub = GreeterGrpc.newStub(channel);

|

Authenticate with Google 使用 Google 进行身份验证

The following code snippet shows how you can call the Google Cloud PubSub API using gRPC with a service account. The credentials are loaded from a key stored in a well-known location or by detecting that the application is running in an environment that can provide one automatically, e.g. Google Compute Engine. While this example is specific to Google and its services, similar patterns can be followed for other service providers.

以下代码片段显示了如何使用 gRPC 调用 Google Cloud PubSub API,并使用服务帐号进行身份验证。凭据从存储在众所周知的位置的密钥加载,或者通过检测应用程序在可以自动提供凭据的环境中运行(例如 Google Compute Engine)进行加载。尽管此示例特定于 Google 及其服务,但可以针对其他服务提供商采用类似的模式。

1

2

3

4

5

6

| ChannelCredentials creds = CompositeChannelCredentials.create(

TlsChannelCredentials.create(),

MoreCallCredentials.from(GoogleCredentials.getApplicationDefault()));

ManagedChannel channel = ManagedChannelBuilder.forTarget("greeter.googleapis.com", creds)

.build();

GreeterGrpc.GreeterStub stub = GreeterGrpc.newStub(channel);

|

Node.js

Base case - No encryption/authentication 基本情况 - 无加密/身份验证

1

| var stub = new helloworld.Greeter('localhost:50051', grpc.credentials.createInsecure());

|

With server authentication SSL/TLS 使用服务器身份验证 SSL/TLS

1

2

3

| const root_cert = fs.readFileSync('path/to/root-cert');

const ssl_creds = grpc.credentials.createSsl(root_cert);

const stub = new helloworld.Greeter('myservice.example.com', ssl_creds);

|

Authenticate with Google 使用 Google 进行身份验证

1

2

3

4

5

6

7

8

9

| // Authenticating with Google 使用 Google 进行身份验证

var GoogleAuth = require('google-auth-library'); // from https://www.npmjs.com/package/google-auth-library

...

var ssl_creds = grpc.credentials.createSsl(root_certs);

(new GoogleAuth()).getApplicationDefault(function(err, auth) {

var call_creds = grpc.credentials.createFromGoogleCredential(auth);

var combined_creds = grpc.credentials.combineChannelCredentials(ssl_creds, call_creds);

var stub = new helloworld.Greeter('greeter.googleapis.com', combined_credentials);

});

|

Authenticate with Google using Oauth2 token (legacy approach) 使用 OAuth2 令牌对 Google 进行身份验证(传统方法)

1

2

3

4

5

6

7

8

9

10

11

12

| var GoogleAuth = require('google-auth-library'); // from https://www.npmjs.com/package/google-auth-library

...

var ssl_creds = grpc.Credentials.createSsl(root_certs); // load_certs typically loads a CA roots file -load_certs 通常加载一个 CA 根证书文件

var scope = 'https://www.googleapis.com/auth/grpc-testing';

(new GoogleAuth()).getApplicationDefault(function(err, auth) {

if (auth.createScopeRequired()) {

auth = auth.createScoped(scope);

}

var call_creds = grpc.credentials.createFromGoogleCredential(auth);

var combined_creds = grpc.credentials.combineChannelCredentials(ssl_creds, call_creds);

var stub = new helloworld.Greeter('greeter.googleapis.com', combined_credentials);

});

|

With server authentication SSL/TLS and a custom header with token 使用服务器身份验证 SSL/TLS 和带有令牌的自定义标头

1

2

3

4

5

6

7

8

9

10

| const rootCert = fs.readFileSync('path/to/root-cert');

const channelCreds = grpc.credentials.createSsl(rootCert);

const metaCallback = (_params, callback) => {

const meta = new grpc.Metadata();

meta.add('custom-auth-header', 'token');

callback(null, meta);

}

const callCreds = grpc.credentials.createFromMetadataGenerator(metaCallback);

const combCreds = grpc.credentials.combineChannelCredentials(channelCreds, callCreds);

const stub = new helloworld.Greeter('myservice.example.com', combCreds);

|

PHP

Base case - No encryption/authorization 基本情况 - 无加密/授权

1

2

3

| $client = new helloworld\GreeterClient('localhost:50051', [

'credentials' => Grpc\ChannelCredentials::createInsecure(),

]);

|

With server authentication SSL/TLS 使用服务器身份验证 SSL/TLS

1

2

3

| $client = new helloworld\GreeterClient('myservice.example.com', [

'credentials' => Grpc\ChannelCredentials::createSsl(file_get_contents('roots.pem')),

]);

|

Authenticate with Google 使用 Google 进行身份验证

1

2

3

4

5

6

7

8

9

10

11

12

13

| function updateAuthMetadataCallback($context)

{

$auth_credentials = ApplicationDefaultCredentials::getCredentials();

return $auth_credentials->updateMetadata($metadata = [], $context->service_url);

}

$channel_credentials = Grpc\ChannelCredentials::createComposite(

Grpc\ChannelCredentials::createSsl(file_get_contents('roots.pem')),

Grpc\CallCredentials::createFromPlugin('updateAuthMetadataCallback')

);

$opts = [

'credentials' => $channel_credentials

];

$client = new helloworld\GreeterClient('greeter.googleapis.com', $opts);

|

Authenticate with Google using Oauth2 token (legacy approach) 使用 Google 进行身份验证,使用 OAuth2 令牌(传统方法)

1

2

3

4

5

6

7

8

| // the environment variable "GOOGLE_APPLICATION_CREDENTIALS" needs to be set

$scope = "https://www.googleapis.com/auth/grpc-testing";

$auth = Google\Auth\ApplicationDefaultCredentials::getCredentials($scope);

$opts = [

'credentials' => Grpc\Credentials::createSsl(file_get_contents('roots.pem'));

'update_metadata' => $auth->getUpdateMetadataFunc(),

];

$client = new helloworld\GreeterClient('greeter.googleapis.com', $opts);

|

Dart

Base case - no encryption or authentication 使用 Google 进行身份验证,使用 OAuth2 令牌(传统方法)

1

2

3

4

5

| final channel = new ClientChannel('localhost',

port: 50051,

options: const ChannelOptions(

credentials: const ChannelCredentials.insecure()));

final stub = new GreeterClient(channel);

|

With server authentication SSL/TLS 使用服务器身份验证 SSL/TLS

1

2

3

4

5

6

7

8

| // Load a custom roots file. 加载自定义的根证书文件。

final trustedRoot = new File('roots.pem').readAsBytesSync();

final channelCredentials =

new ChannelCredentials.secure(certificates: trustedRoot);

final channelOptions = new ChannelOptions(credentials: channelCredentials);

final channel = new ClientChannel('myservice.example.com',

options: channelOptions);

final client = new GreeterClient(channel);

|

Authenticate with Google 使用 Google 进行身份验证

1

2

3

4

5

6

7

| // Uses publicly trusted roots by default. 默认情况下使用公开信任的根证书。

final channel = new ClientChannel('greeter.googleapis.com');

final serviceAccountJson =

new File('service-account.json').readAsStringSync();

final credentials = new JwtServiceAccountAuthenticator(serviceAccountJson);

final client =

new GreeterClient(channel, options: credentials.toCallOptions);

|

Authenticate a single RPC call 对单个 RPC 调用进行身份验证

1

2

3

4

5

6

7

8

9

| // Uses publicly trusted roots by default. 默认情况下使用公开信任的根证书。

final channel = new ClientChannel('greeter.googleapis.com');

final client = new GreeterClient(channel);

...

final serviceAccountJson =

new File('service-account.json').readAsStringSync();

final credentials = new JwtServiceAccountAuthenticator(serviceAccountJson);

final response =

await client.sayHello(request, options: credentials.toCallOptions);

|

2 - 性能基准测试

Benchmarking 性能基准测试

gRPC is designed to support high-performance open-source RPCs in many languages. This page describes performance benchmarking tools, scenarios considered by tests, and the testing infrastructure.

gRPC旨在支持许多语言中高性能的开源RPC。本页面介绍了性能基准测试工具、测试中考虑的场景以及测试基础架构。

Overview 概述

gRPC is designed for both high-performance and high-productivity design of distributed applications. Continuous performance benchmarking is a critical part of the gRPC development workflow. Multi-language performance tests run every few hours against the master branch, and these numbers are reported to a dashboard for visualization.

gRPC旨在实现高性能和高生产力的分布式应用程序设计。持续的性能基准测试是gRPC开发工作流程的关键部分。每隔几个小时,多语言性能测试会针对主干分支运行,并将这些数据报告给仪表板进行可视化显示。

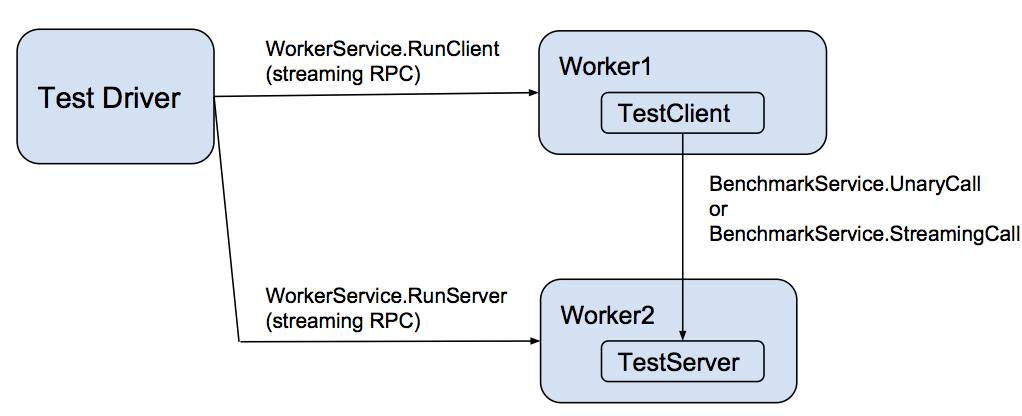

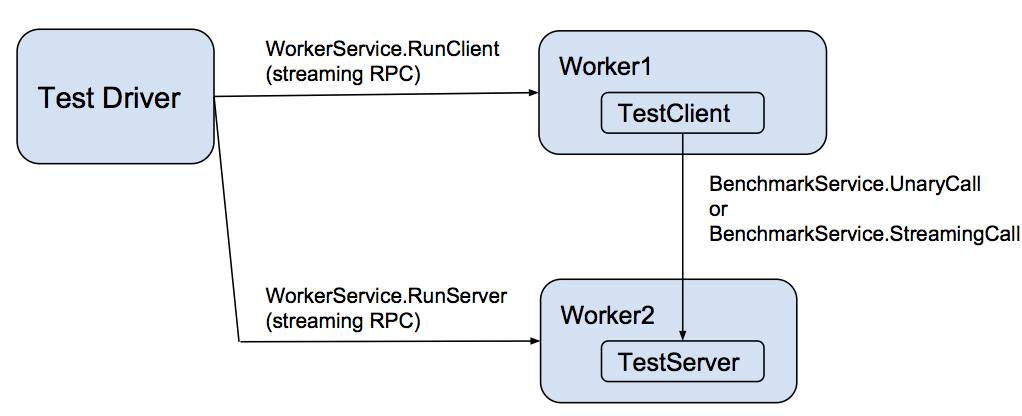

Each language implements a performance testing worker that implements a gRPC WorkerService. This service directs the worker to act as either a client or a server for the actual benchmark test, represented as BenchmarkService. That service has two methods:

每种语言都实现了一个性能测试工作器,它实现了一个gRPC WorkerService。该服务指示工作器在实际的基准测试中充当客户端或服务器,表示为BenchmarkService。该服务有两个方法:

- UnaryCall – a unary RPC of a simple request that specifies the number of bytes to return in the response.

- StreamingCall – a streaming RPC that allows repeated ping-pongs of request and response messages akin to the UnaryCall.

- UnaryCall - 一个简单请求的一元RPC,该请求指定要在响应中返回的字节数。

- StreamingCall - 允许重复的请求和响应消息之间的类似UnaryCall的ping-pong的流式RPC。

These workers are controlled by a driver that takes as input a scenario description (in JSON format) and an environment variable specifying the host:port of each worker process.

这些工作器由一个驱动程序控制,该驱动程序以JSON格式的场景描述和一个环境变量作为输入,该环境变量指定每个工作器进程的主机:端口。

Languages under test 测试的语言

The following languages have continuous performance testing as both clients and servers at master:

以下语言在主干分支上都具有连续的性能测试,既作为客户端也作为服务器:

- C++

- Java

- Go

- C#

- Node.js

- Python

- Ruby

In addition to running as both the client-side and server-side of performance tests, all languages are tested as clients against a C++ server, and as servers against a C++ client. This test aims to provide the current upper bound of performance for a given language’s client or server implementation without testing the other side.

除了作为性能测试的客户端和服务器的一侧外,所有语言都作为客户端针对C++服务器进行测试,并作为服务器针对C++客户端进行测试。此测试旨在为给定语言的客户端或服务器实现提供当前性能的上限,而不测试另一侧。

Although PHP or mobile environments do not support a gRPC server (which is needed for our performance tests), their client-side performance can be benchmarked using a proxy WorkerService written in another language. This code is implemented for PHP but is not yet in continuous testing mode.

虽然PHP或移动环境不支持gRPC服务器(这是我们性能测试所需的),但可以使用另一种语言编写的代理WorkerService来对其客户端性能进行基准测试。这段代码已经针对PHP实现,但尚未处于持续测试模式。

Scenarios under test 测试的场景

There are several important scenarios under test and displayed in the dashboards above, including the following:

上述仪表板中进行了几个重要的测试场景,包括以下内容:

- Contentionless latency – the median and tail response latencies seen with only 1 client sending a single message at a time using StreamingCall.

- QPS – the messages/second rate when there are 2 clients and a total of 64 channels, each of which has 100 outstanding messages at a time sent using StreamingCall.

- Scalability (for selected languages) – the number of messages/second per server core.

- 无竞争延迟 - 使用StreamingCall,只有一个客户端发送一条消息时的中位数和尾部响应延迟。

- QPS(每秒处理消息数) - 使用StreamingCall,当存在2个客户端和总共64个通道时,每个通道每次发送100个未完成的消息时的消息/秒率。

- 可扩展性(对于选择的语言)- 每个服务器核心的每秒处理消息数。

Most performance testing is using secure communication and protobufs. Some C++ tests additionally use insecure communication and the generic (non-protobuf) API to display peak performance. Additional scenarios may be added in the future.

大多数性能测试使用安全通信和protobuf。一些C++测试还使用不安全的通信和通用(非protobuf)API以显示峰值性能。未来可能会添加其他场景。

Testing infrastructure 测试基础架构

All performance benchmarks are run in our dedicated GKE cluster, where each benchmark worker (a client or a server) gets scheduled to different GKE node (and each GKE node is a separate GCE VM) in one of our worker pools. The source code for the benchmarking framework we use is publicly available in the test-infra github repository.

所有性能基准测试都在我们专用的GKE集群中运行,其中每个基准测试工作器(客户端或服务器)被调度到不同的GKE节点(每个GKE节点是一个单独的GCE VM)中的一个工作池。我们使用的基准测试框架的源代码可在test-infra GitHub存储库中公开获取。

Most test instances are 8-core systems, and these are used for both latency and QPS measurement. For C++ and Java, we additionally support QPS testing on 32-core systems. All QPS tests use 2 identical client machines for each server, to make sure that QPS measurement is not client-limited.

大多数测试实例都是8核系统,用于延迟和QPS测量。对于C++和Java,我们还支持在32核系统上进行QPS测试。所有QPS测试使用2台相同的客户机进行每个服务器的测试,以确保QPS测量不受客户端限制。

3 - 压缩

Compression 压缩

How to compress the data sent over the wire while using gRPC.

如何在使用gRPC时压缩通过网络发送的数据。

Overview 概述

Compression is used to reduce the amount of bandwidth used when communicating between peers and can be enabled or disabled based on call or message level for all languages. For some languages, it is also possible to control compression settings at the channel level. Different languages also support different compression algorithms, including a customized compressor.

压缩用于在对等方之间通信时减少带宽使用量,并且可以基于调用或消息级别在所有语言中启用或禁用。对于某些语言,还可以在通道级别上控制压缩设置。不同的语言还支持不同的压缩算法,包括自定义的压缩器。

Compression Method Asymmetry Between Peers 对等方之间的压缩方法不对称性

gRPC allows asymmetrically compressed communication, whereby a response may be compressed differently with the request, or not compressed at all. A gRPC peer may choose to respond using a different compression method to that of the request, including not performing any compression, regardless of channel and RPC settings (for example, if compression would result in small or negative gains).

gRPC允许不对称压缩通信,其中响应可以与请求以不同的方式进行压缩,或者根本不进行压缩。不论通道和RPC设置如何,gRPC对等方可以选择使用与请求不同的压缩方法来响应,包括不执行任何压缩(例如,如果压缩会导致较小或负面的收益)。

If a client message is compressed by an algorithm that is not supported by a server, the message will result in an UNIMPLEMENTED error status on the server. The server will include a grpc-accept-encoding header to the response which specifies the algorithms that the server accepts.

如果客户端消息使用服务器不支持的算法进行压缩,则服务器将在服务器上产生UNIMPLEMENTED错误状态。服务器将在响应中包含一个grpc-accept-encoding头,该头指定服务器接受的算法。

If the client message is compressed using one of the algorithms from the grpc-accept-encoding header and an UNIMPLEMENTED error status is returned from the server, the cause of the error won’t be related to compression.

如果客户端消息使用grpc-accept-encoding头中的算法之一进行压缩,并且从服务器返回UNIMPLEMENTED错误状态,则错误的原因与压缩无关。

Note that a peer may choose to not disclose all the encodings it supports. However, if it receives a message compressed in an undisclosed but supported encoding, it will include said encoding in the response’s grpc-accept-encoding header.

注意,对等方可能选择不披露其支持的所有编码。但是,如果它接收到使用未披露但受支持的编码进行压缩的消息,则会在响应的grpc-accept-encoding头中包含该编码。

For every message a server is requested to compress using an algorithm it knows the client doesn’t support (as indicated by the last grpc-accept-encoding header received from the client), it will send the message uncompressed.

对于服务器被请求使用其知道客户端不支持的算法进行压缩的每个消息,服务器将以未压缩的形式发送该消息,这是根据从客户端接收到的最后一个grpc-accept-encoding头指示的。

Specific Disabling of Compression 明确禁用压缩

If the user requests to disable compression, the next message will be sent uncompressed. This is instrumental in preventing BEAST and CRIME attacks. This applies to both the unary and streaming cases.

如果用户请求禁用压缩,下一条消息将以未压缩的形式发送。这对于防止BEAST和CRIME攻击至关重要。这适用于一元和流式的情况。

Language guides and examples 语言指南和示例

Additional Resources 其他资源

4 - 自定义负载均衡策略

Custom Load Balancing Policies 自定义负载均衡策略

https://grpc.io/docs/guides/custom-load-balancing/

Explains how custom load balancing policies can help optimize load balancing under unique circumstances.

解释了如何使用自定义负载均衡策略在特定情况下优化负载均衡。

Overview 概述

One of the key features of gRPC is load balancing, which allows requests from clients to be distributed across multiple servers. This helps prevent any one server from becoming overloaded and allows the system to scale up by adding more servers.

gRPC的关键功能之一是负载均衡,它允许来自客户端的请求分布到多个服务器上。这有助于防止任何一个服务器过载,并允许通过添加更多服务器来扩展系统。

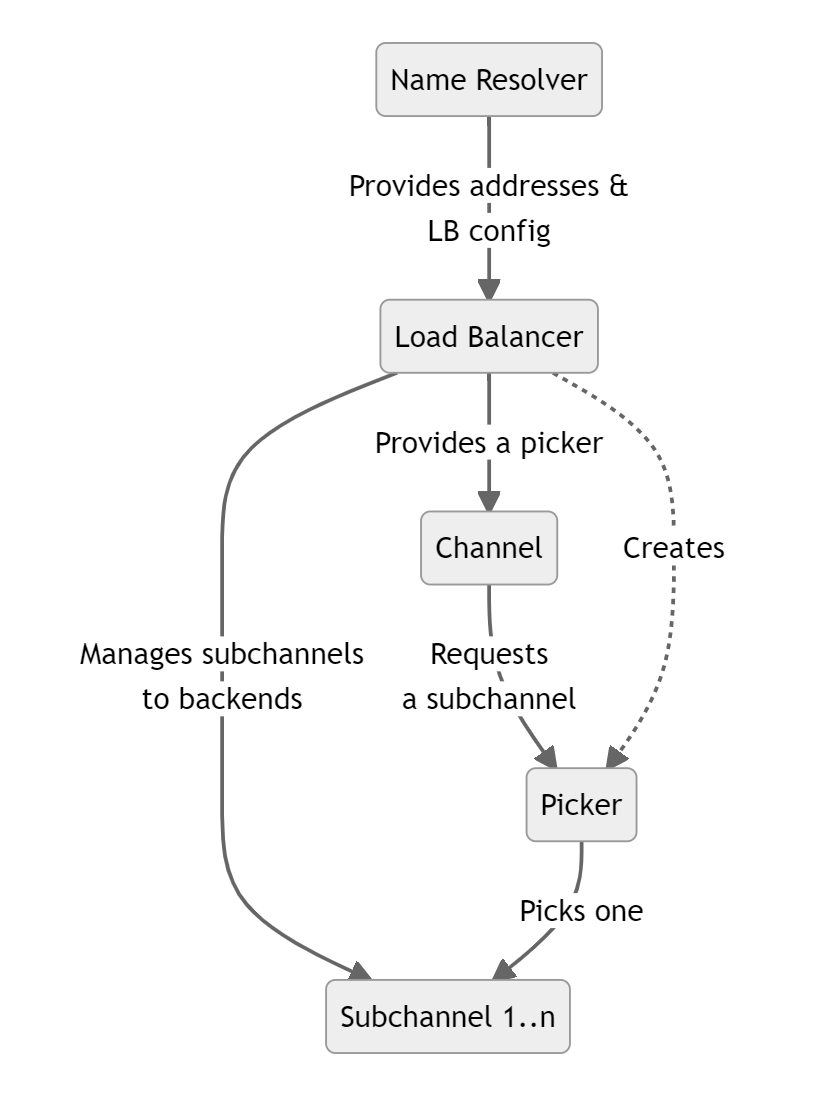

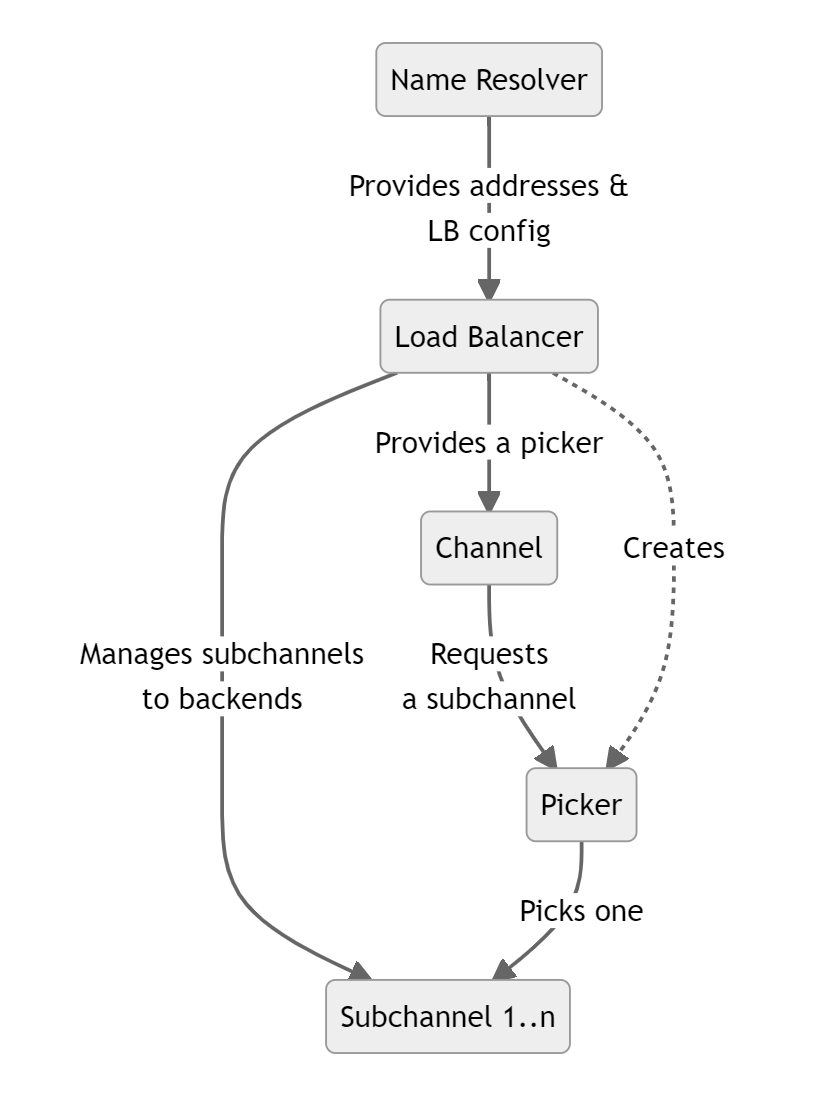

A gRPC load balancing policy is given a list of server IP addresses by the name resolver. The policy is responsible for maintaining connections (subchannels) to the servers and picking a connection to use when an RPC is sent.

gRPC负载均衡策略由名称解析器提供一个服务器IP地址列表。该策略负责维护与服务器的连接(子通道),并在发送RPC时选择要使用的连接。

Implementing Your Own Policy 实现自定义策略

By default the pick_first policy will be used. This policy actually does no load balancing but just tries each address it gets from the name resolver and uses the first one it can connect to. By updating the gRPC service config you can also switch to using round_robin that connects to every address it gets and rotates through the connected backends for each RPC. There are also some other load balancing policies available, but the exact set varies by language. If the built-in policies do not meet your needs you can also implement you own custom policy.

默认情况下,将使用pick_first策略。该策略实际上不进行负载均衡,只是尝试连接名称解析器获取的每个地址,并使用其中第一个可连接的地址。通过更新gRPC服务配置,还可以切换到使用round_robin策略,该策略连接获取到的每个地址,并为每个RPC在已连接的后端之间进行轮询。还提供了其他一些负载均衡策略,但具体的可用策略因语言而异。如果内置的策略无法满足您的需求,您还可以实现自定义策略。

This involves implementing a load balancer interface in the language you are using. At a high level, you will have to:

这涉及在您使用的语言中实现一个负载均衡器接口。在高层上,您需要:

- Register your implementation in the load balancer registry so that it can be referred to from the service config

- Parse the JSON configuration object of your implementation. This allows your load balancer to be configured in the service config with any arbitrary JSON you choose to support

- Manage what backends to maintain a connection with

- Implement a

picker that will choose which backend to connect to when an RPC is made. Note that this needs to be a fast operation as it is on the RPC call path - To enable your load balancer, configure it in your service config

- 在负载均衡器注册表中注册您的实现,以便可以从服务配置中引用它

- 解析您的实现的JSON配置对象。这允许您的负载均衡器在服务配置中以您选择支持的任意JSON进行配置

- 管理要与之保持连接的后端

- 实现一个

picker,在进行RPC调用时选择要连接的后端。请注意,这必须是一个快速的操作,因为它在RPC调用路径上进行 - 要启用您的负载均衡器,请在服务配置中进行配置

The exact steps vary by language, see the language support section for some concrete examples in your language.

具体的步骤因语言而异,请参阅语言支持部分,了解您所使用的语言中的一些具体示例。

Backend Metrics 后端指标

What if your load balancing policy needs to know what is going on with the backend servers in real-time? For this you can rely on backend metrics. You can have metrics provided to you either in-band, in the backend RPC responses, or out-of-band as separate RPCs from the backends. Standard metrics like CPU and memory utilization are provided but you can also implement your own, custom metrics.

如果您的负载均衡策略需要实时了解后端服务器的情况怎么办?为此,您可以依赖后端指标。您可以通过内部通道,在后端RPC响应中提供指标,或者作为来自后端的单独RPC进行提供。提供了标准指标,如CPU和内存利用率,但您也可以实现自己的自定义指标。

For more information on this, please see the custom backend metrics guide (TBD)

有关详细信息,请参阅自定义后端指标指南(待定)。

Service Mesh 服务网格

If you have a service mesh setup where a central control plane is coordinating the configuration of your microservices, you cannot configure your custom load balancer directly via the service config. But support is provided to do this with the xDS protocol that your control plane uses to communicate with your gRPC clients. Please refer to your control plane documentation to determine how custom load balancing configuration is supported.

如果您设置了一个服务网格,其中一个中央控制平面协调您的微服务的配置,您不能直接通过服务配置来配置您的自定义负载均衡器。但是,通过xDS协议提供了支持,该协议是您的控制平面用于与gRPC客户端通信的。请参阅您的控制平面文档,了解如何支持自定义负载均衡配置。

For more details, please see gRPC proposal A52.

有关详细信息,请参阅gRPC的提案A52。

Language Support 语言支持

| 语言 | 示例 | 注释 |

|---|

| Java | Java 示例 | |

| Go | | 示例和xDS支持即将推出 |

| C++ | | 尚未支持 |

5 - 截止时间

Deadlines 截止时间

https://grpc.io/docs/guides/deadlines/

Explains how deadlines can be used to effectively deal with unreliable backends.

解释了如何使用截止时间有效地处理不可靠的后端。

Overview 概述

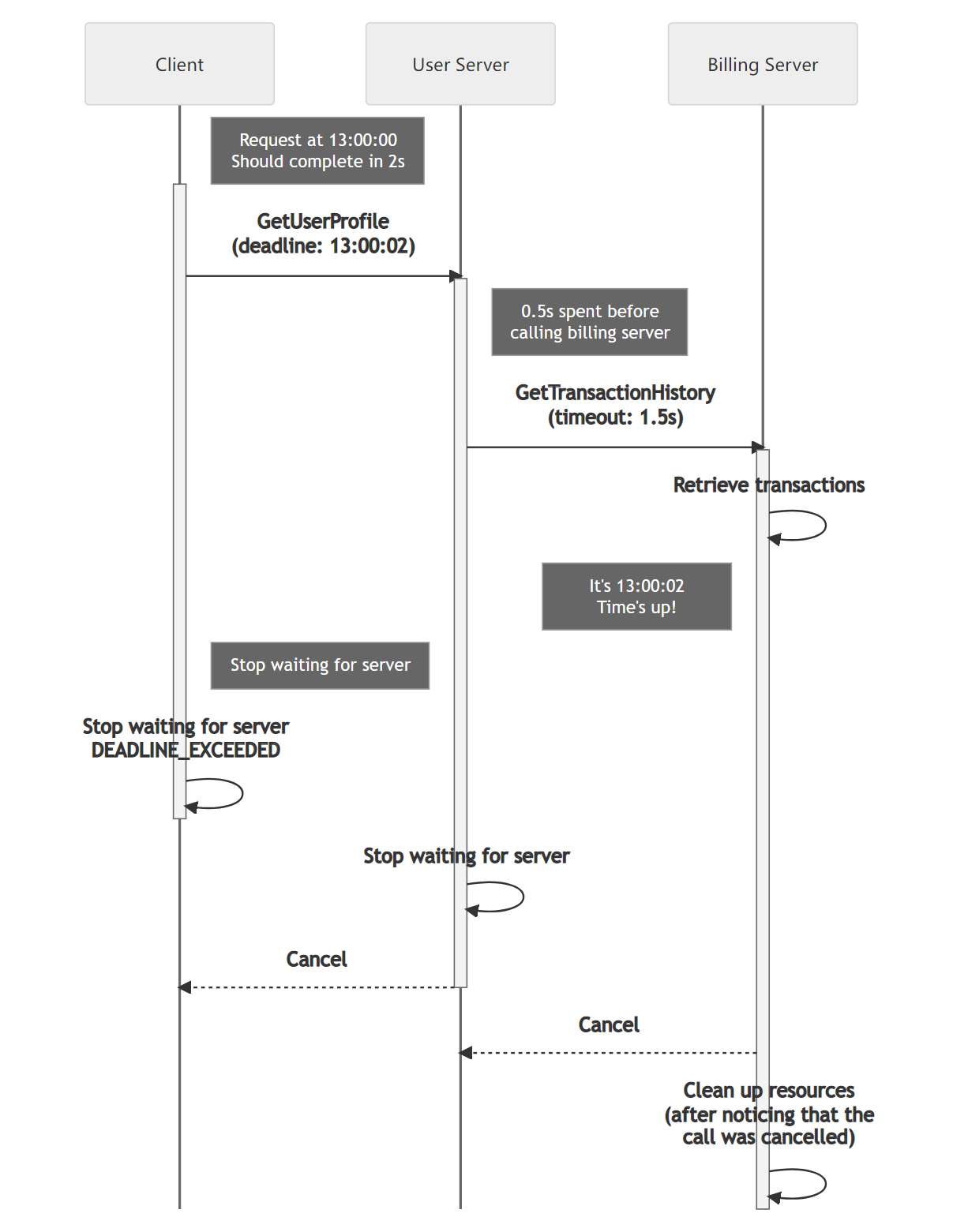

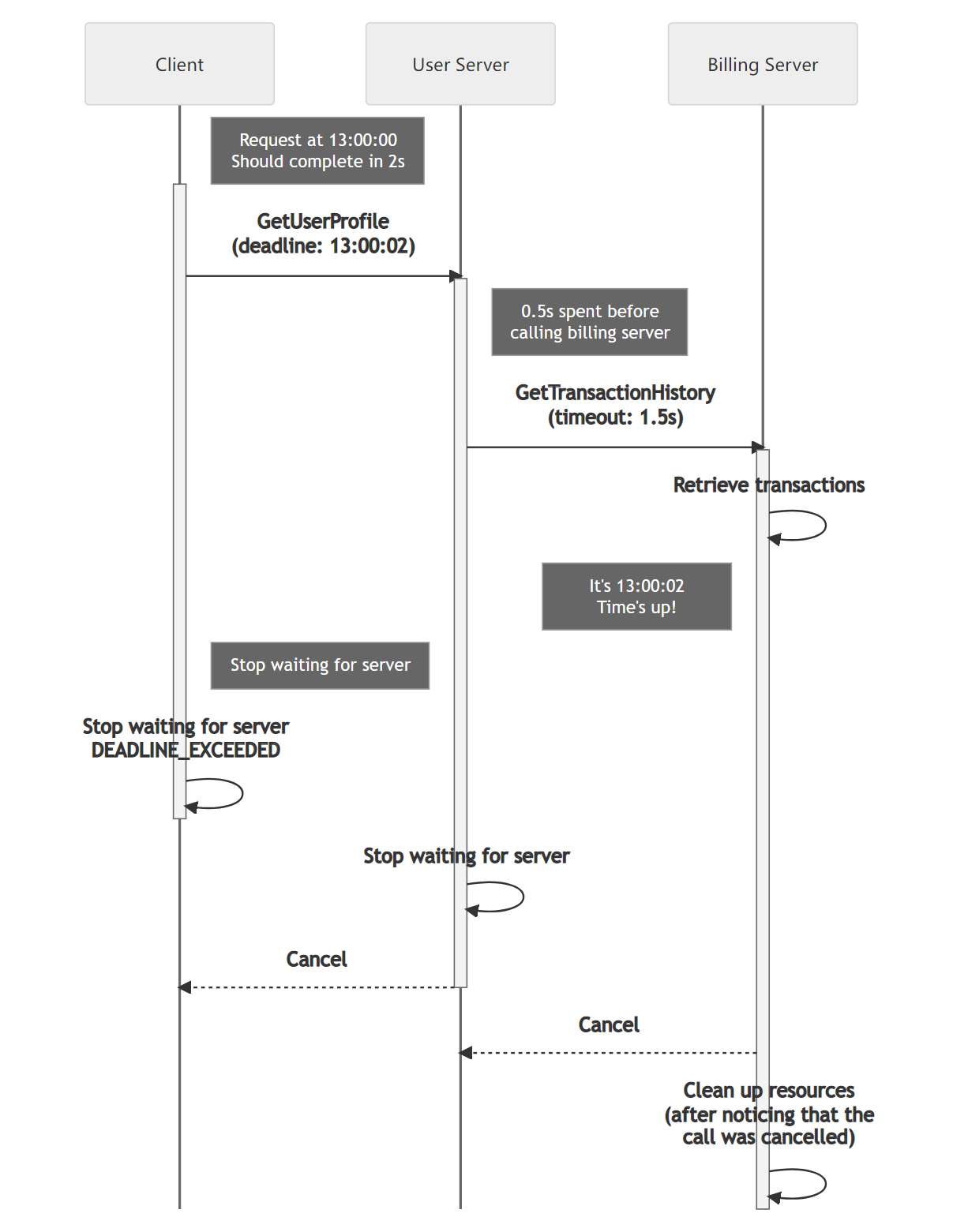

A deadline is used to specify a point in time past which a client is unwilling to wait for a response from a server. This simple idea is very important in building robust distributed systems. Clients that do not wait around unnecessarily and servers that know when to give up processing requests will improve the resource utilization and latency of your system.

截止时间用于指定客户端在此时间点之后不愿意等待来自服务器的响应。这个简单的概念在构建健壮的分布式系统中非常重要。不必要地等待的客户端和知道何时放弃处理请求的服务器将改善系统的资源利用率和延迟。

Note that while some language APIs have the concept of a deadline, others use the idea of a timeout. When an API asks for a deadline, you provide a point in time which the request should not go past. A timeout is the max duration of time that the request can take. For simplicity, we will only refer to deadline in this document.

请注意,尽管某些语言的API中有截止时间的概念,但其他语言使用超时的概念。当API要求截止时间时,您提供了请求不应超过的时间点。超时是请求可以花费的最长时间。为了简单起见,本文档中将仅使用截止时间一词。

Deadlines on the Client 客户端的截止时间

By default, gRPC does not set a deadline which means it is possible for a client to end up waiting for a response effectively forever. To avoid this you should always explicitly set a realistic deadline in your clients. To determine the appropriate deadline you would ideally start with an educated guess based on what you know about your system (network latency, server processing time, etc.), validated by some load testing.

默认情况下,gRPC不设置截止时间,这意味着客户端有可能无限期地等待响应。为了避免这种情况,您应该始终在客户端显式设置一个合理的截止时间。为确定适当的截止时间,您应该根据对系统的了解(网络延迟、服务器处理时间等)进行有根据的猜测,并通过一些负载测试进行验证。

If a server has gone past the deadline when processing a request, the client will give up and fail the RPC with the DEADLINE_EXCEEDED status.

如果服务器在处理请求时超过了截止时间,客户端将放弃并以“DEADLINE_EXCEEDED”状态失败该RPC。

Deadlines on the Server 服务器的截止时间

A server might receive requests from a client with an unrealistically short deadline that would not give the server enough time to ever respond in time. This would result in the server just wasting valuable resources and in the worst case scenario, crash the server. A gRPC server deals with this situation by automatically cancelling a call (CANCELLED status) once a deadline set by the client has passed.

服务器可能会接收到具有不切实际短截止时间的客户端请求,这将不给服务器足够的时间来及时响应。这将导致服务器浪费宝贵的资源,并且在最坏的情况下可能导致服务器崩溃。gRPC服务器通过在客户端设置的截止时间过去后自动取消调用(“CANCELLED”状态)来处理这种情况。

Please note that the server application is responsible for stopping any activity it has spawned to service the request. If your application is running a long-running process you should periodically check if the request that initiated it has been cancelled and if so, stop the processing.

请注意,服务器应用程序负责停止为服务请求而产生的任何活动。如果您的应用程序运行了一个长时间运行的进程,您应该定期检查是否已取消发起该进程的请求,如果是,则停止处理。

Deadline Propagation 截止时间传播

Your server might need to call another server to produce a response. In these cases where your server also acts as a client you would want to honor the deadline set by the original client. Automatically propagating the deadline from an incoming request to an outgoing one is supported by some gRPC implementations. In some languages this behavior needs to be explicitly enabled (e.g. C++) and in others it is enabled by default (e.g. Java and Go). Using this capability lets you avoid the error-prone approach of manually including the deadline for each outgoing RPC.

您的服务器可能需要调用另一个服务器来生成响应。在这些情况下,当您的服务器充当客户端时,您希望遵守原始客户端设置的截止时间。一些gRPC实现支持自动将来自传入请求的截止时间传播到传出请求。在某些语言中,需要显式启用此行为(例如C++),而在其他语言中,它默认启用(例如Java和Go)。使用此功能可以避免手动为每个传出的RPC单独包含截止时间的错误做法。

Since a deadline is set point in time, propagating it as-is to a server can be problematic as the clocks on the two servers might not be synchronized. To address this gRPC converts the deadline to a timeout from which the already elapsed time is already deducted. This shields your system from any clock skew issues.

由于截止时间是设置的一个时间点,直接将其传播给服务器可能会有问题,因为两个服务器上的时钟可能不同步。为解决这个问题,gRPC将截止时间转换为已经过去的超时时间。这样可以避免系统受到任何时钟偏差问题的影响。

语言支持

其他资源

6 - 错误处理

Error handling 错误处理

https://grpc.io/docs/guides/error/

How gRPC deals with errors, and gRPC error codes.

介绍了gRPC如何处理错误以及gRPC的错误代码。

Standard error model 标准错误模型

As you’ll have seen in our concepts document and examples, when a gRPC call completes successfully the server returns an OK status to the client (depending on the language the OK status may or may not be directly used in your code). But what happens if the call isn’t successful?

正如您在我们的概念文档和示例中所看到的,当gRPC调用成功完成时,服务器将向客户端返回一个OK状态(根据语言,OK状态可能会或可能不会直接在您的代码中使用)。但是如果调用不成功会发生什么呢?

If an error occurs, gRPC returns one of its error status codes instead, with an optional string error message that provides further details about what happened. Error information is available to gRPC clients in all supported languages.

如果发生错误,gRPC会返回其中一个错误状态码,并可选地提供一个字符串错误消息,该消息提供了更多关于发生的情况的详细信息。所有支持的语言的gRPC客户端都可以获取错误信息。

Richer error model 更丰富的错误模型

The error model described above is the official gRPC error model, is supported by all gRPC client/server libraries, and is independent of the gRPC data format (whether protocol buffers or something else). You may have noticed that it’s quite limited and doesn’t include the ability to communicate error details.

上述的错误模型是官方的gRPC错误模型,由所有gRPC客户端/服务器库支持,并且与gRPC的数据格式(无论是协议缓冲区还是其他格式)无关。您可能已经注意到它相当有限,并且没有包含传递错误详细信息的能力。

If you’re using protocol buffers as your data format, however, you may wish to consider using the richer error model developed and used by Google as described here. This model enables servers to return and clients to consume additional error details expressed as one or more protobuf messages. It further specifies a standard set of error message types to cover the most common needs (such as invalid parameters, quota violations, and stack traces). The protobuf binary encoding of this extra error information is provided as trailing metadata in the response.

然而,如果您正在使用协议缓冲区作为数据格式,您可能希望考虑使用由Google开发和使用的更丰富的错误模型,详细信息可以在这里中找到。该模型使得服务器能够返回并且客户端能够消费表示为一个或多个protobuf消息的额外错误详细信息。它进一步指定了一组标准的错误消息类型,以涵盖最常见的需求(例如无效参数、配额超限和堆栈跟踪)。该额外的错误信息的protobuf二进制编码作为响应中的尾部元数据提供。

This richer error model is already supported in the C++, Go, Java, Python, and Ruby libraries, and at least the grpc-web and Node.js libraries have open issues requesting it. Other language libraries may add support in the future if there’s demand, so check their github repos if interested. Note however that the grpc-core library written in C will not likely ever support it since it is purposely data format agnostic.

这种更丰富的错误模型已经在C++、Go、Java、Python和Ruby库中得到支持,至少grpc-web和Node.js库存在请求支持的问题。如果有需求,其他语言库可能在将来添加支持,所以如果感兴趣的话可以查看它们的GitHub存储库。但请注意,以C语言编写的grpc-core库很可能永远不会支持它,因为它有意地与数据格式无关。

You could use a similar approach (put error details in trailing response metadata) if you’re not using protocol buffers, but you’d likely need to find or develop library support for accessing this data in order to make practical use of it in your APIs.

如果您不使用协议缓冲区,您可以采用类似的方法(将错误详细信息放在尾部的响应元数据中),但您可能需要找到或开发库来访问这些数据,以便在API中实际使用它。

There are important considerations to be aware of when deciding whether to use such an extended error model, however, including:

然而,在决定是否使用此扩展错误模型时,有一些重要的注意事项需要注意,包括:

- Library implementations of the extended error model may not be consistent across languages in terms of requirements for and expectations of the error details payload

- Existing proxies, loggers, and other standard HTTP request processors don’t have visibility into the error details and thus wouldn’t be able to leverage them for monitoring or other purposes

- Additional error detail in the trailers interferes with head-of-line blocking, and will decrease HTTP/2 header compression efficiency due to more frequent cache misses

- Larger error detail payloads may run into protocol limits (like max headers size), effectively losing the original error

- 扩展错误模型的库实现在错误详细信息的要求和期望方面可能在不同的语言之间不一致

- 现有的代理、日志记录器和其他标准HTTP请求处理器无法获取错误详细信息,因此无法利用它们进行监控或其他目的

- 尾部中的附加错误详细信息会干扰首部阻塞,并且由于更频繁的缓存未命中,会降低HTTP/2首部压缩的效率

- 较大的错误详细信息负载可能会超过协议限制(如最大头大小),从而丢失原始错误信息

Error status codes 错误状态码

Errors are raised by gRPC under various circumstances, from network failures to unauthenticated connections, each of which is associated with a particular status code. The following error status codes are supported in all gRPC languages.

gRPC在各种情况下引发错误,从网络故障到未经身份验证的连接,每种情况都与特定的状态码相关联。以下错误状态码在所有gRPC语言中都受支持。

General errors 通用错误

| 案例 | 状态码 |

|---|

| 客户端应用程序取消了请求 | GRPC_STATUS_CANCELLED |

| 截止时间在服务器返回状态之前过期 | GRPC_STATUS_DEADLINE_EXCEEDED |

| 服务器上找不到该方法 | GRPC_STATUS_UNIMPLEMENTED |

| 服务器关闭中 | GRPC_STATUS_UNAVAILABLE |

| 服务器抛出异常(或执行了其他操作而不是返回状态码来终止RPC) | GRPC_STATUS_UNKNOWN |

Network failures 网络故障

| 案例 | 状态码 |

|---|

| 截止时间到期之前未传输任何数据。也适用于在截止时间到期之前传输了一些数据且未检测到其他故障的情况 | GRPC_STATUS_DEADLINE_EXCEEDED |

| 在连接断开之前传输了一些数据(例如,请求元数据已写入TCP连接) | GRPC_STATUS_UNAVAILABLE |

协议错误

| 案例 | 状态码 |

|---|

| 无法解压缩,但支持压缩算法 | GRPC_STATUS_INTERNAL |

| 客户端使用的压缩机制不被服务器支持 | GRPC_STATUS_UNIMPLEMENTED |

| 流量控制资源限制已达到 | GRPC_STATUS_RESOURCE_EXHAUSTED |

| 流量控制协议违规 | GRPC_STATUS_INTERNAL |

| 解析返回的状态时出错 | GRPC_STATUS_UNKNOWN |

| 未经身份验证:凭据未能获取元数据 | GRPC_STATUS_UNAUTHENTICATED |

| 在授权元数据中设置了无效的主机 | GRPC_STATUS_UNAUTHENTICATED |

| 解析响应协议缓冲区时出错 | GRPC_STATUS_INTERNAL |

| 解析请求协议缓冲区时出错 | GRPC_STATUS_INTERNAL |

Sample code 示例代码

For sample code illustrating how to handle various gRPC errors, see the grpc-errors repo.

有关如何处理各种gRPC错误的示例代码,请参阅grpc-errors存储库。

7 - 流量控制

Flow Control 流量控制

https://grpc.io/docs/guides/flow-control/

Explains what flow control is and how you can manually control it.

解释了什么是流量控制,以及如何手动控制流量。

Overview 概述

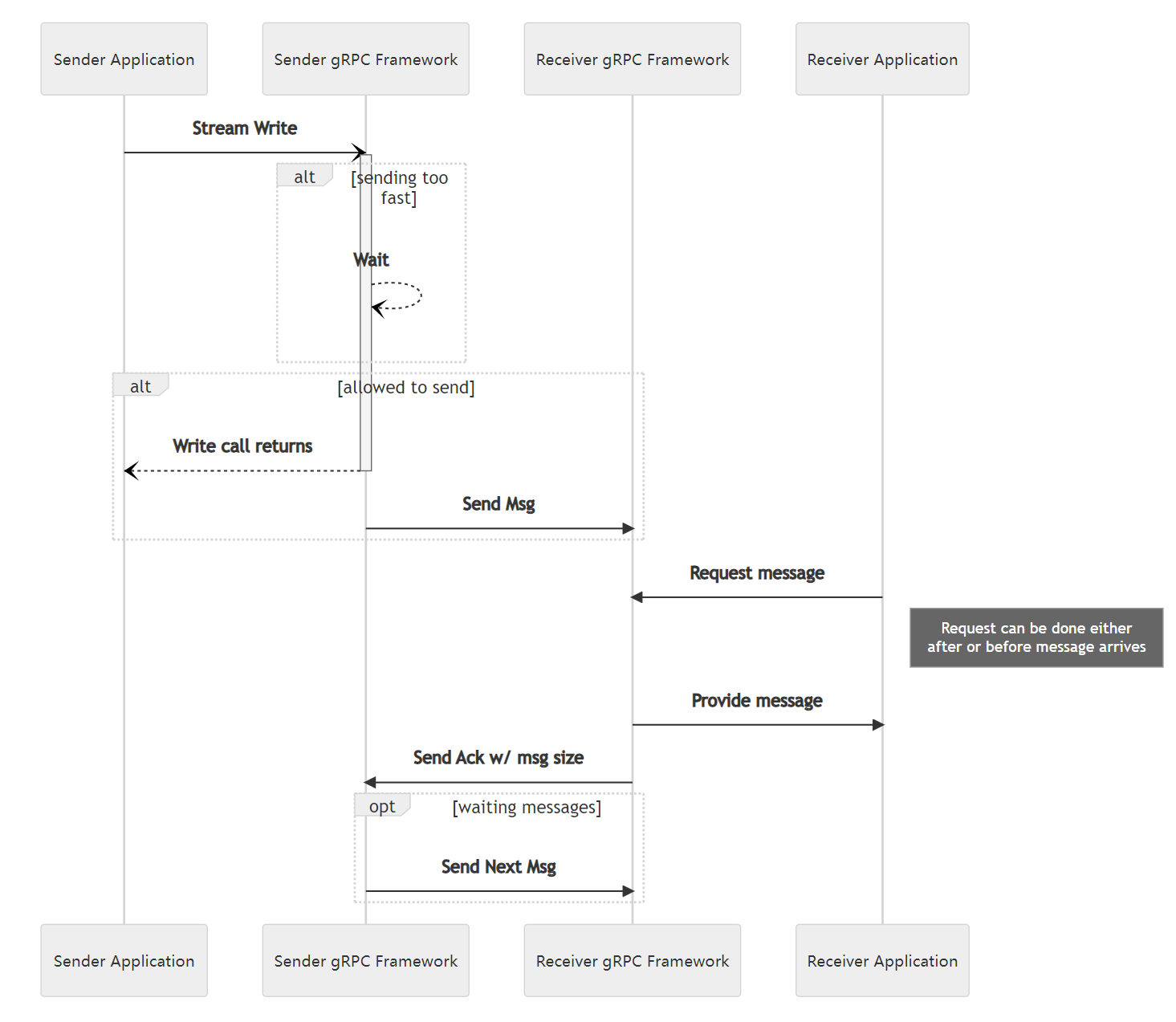

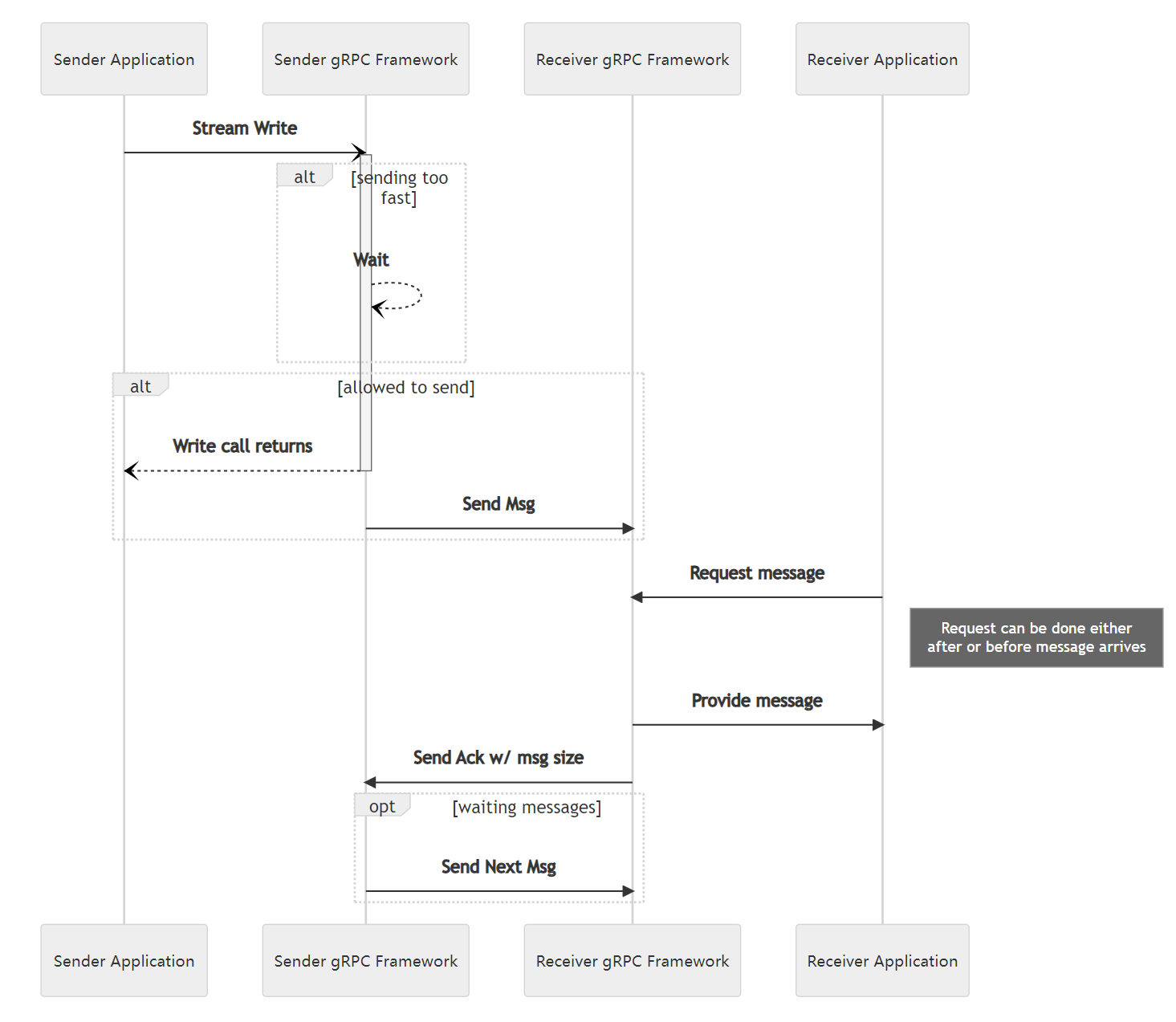

Flow control is a mechanism to ensure that a receiver of messages does not get overwhelmed by a fast sender. Flow control prevents data loss, improves performance and increases reliability. It applies to streaming RPCs and is not relevant for unary RPCs. By default, gRPC handles the interactions with flow control for you, though some languages allow you to take override the default behavior and take explicit control.

流量控制是一种机制,确保消息的接收方不会被快速发送方压垮。流量控制可以防止数据丢失,提高性能和可靠性。它适用于流式RPC,并且对于一元RPC来说不相关。默认情况下,gRPC会为您处理流量控制的交互,但某些语言允许您覆盖默认行为并显式控制。

gRPC utilizes the underlying transport to detect when it is safe to send more data. As data is read on the receiving side, an acknowledgement is returned to the sender letting it know that the receiver has more capacity.

gRPC利用底层传输来检测何时可以安全地发送更多数据。当在接收端读取数据时,会向发送方返回确认,告知接收方还有更多的容量。

As needed, the gRPC framework will wait before returning from a write call. In gRPC, when a value is written to a stream, that does not mean that it has gone out over the network. Rather, that it has been passed to the framework which will now take care of the nitty gritty details of buffering it and sending it to the OS on its way over the network.

根据需要,gRPC框架将在写入调用返回之前等待。在gRPC中,当将值写入流时,并不意味着它已经通过网络发送出去。相反,它已经传递给框架,框架将负责处理细节,对其进行缓冲并将其发送到操作系统以便通过网络发送。

Note 注意

The flow is the same for writing from a Server to a Client as when a Client writes to a Server

从服务器向客户端写入的流程与客户端向服务器写入的流程相同。

Warning 警告

There is the potential for a deadlock if both the client and server are doing synchronous reads or using manual flow control and both try to do a lot of writing without doing any reads.

如果客户端和服务器都在进行同步读取或使用手动流量控制,并且都试图在不进行任何读取的情况下进行大量写入,可能会导致死锁。

语言支持

8 - 保持连接

Keepalive 保持连接

https://grpc.io/docs/guides/keepalive/

How to use HTTP/2 PING-based keepalives in gRPC.

如何在 gRPC 中使用基于 HTTP/2 PING 的保持连接。

Overview 概述

HTTP/2 PING-based keepalives are a way to keep an HTTP/2 connection alive even when there is no data being transferred. This is done by periodically sending a PING frame to the other end of the connection. HTTP/2 keepalives can improve performance and reliability of HTTP/2 connections, but it is important to configure the keepalive interval carefully.

基于 HTTP/2 PING 的保持连接是一种在没有数据传输时保持 HTTP/2 连接活跃的方式。通过定期向连接的另一端发送 PING 帧 来实现。HTTP/2 保持连接可以提高 HTTP/2 连接的性能和可靠性,但需要仔细配置保持连接间隔。

Note 注意

There is a related but separate concern called [Health Checking]. Health checking allows a server to signal whether a service is healthy while keepalive is only about the connection.

还有一个相关但独立的问题,称为健康检查。健康检查允许服务器表示一个服务是否健康,而保持连接只涉及连接。

Background 背景

TCP keepalive is a well-known method of maintaining connections and detecting broken connections. When TCP keepalive was enabled, either side of the connection can send redundant packets. Once ACKed by the other side, the connection will be considered as good. If no ACK is received after repeated attempts, the connection is deemed broken.

TCP keepalive 是一种常用的维护连接和检测断开连接的方法。启用 TCP keepalive 后,连接的任一方都可以发送冗余数据包。一旦得到另一方的确认(ACK),连接将被视为正常。如果经过多次尝试仍未收到确认,连接将被视为断开。

Unlike TCP keepalive, gRPC uses HTTP/2 which provides a mandatory PING frame which can be used to estimate round-trip time, bandwidth-delay product, or test the connection. The interval and retry in TCP keepalive don’t quite apply to PING because the transport is reliable, so they’re replaced with timeout (equivalent to interval * retry) in gRPC PING-based keepalive implementation.

与 TCP keepalive 不同,gRPC 使用的是 HTTP/2 协议,该协议提供了一个强制性的 PING 帧,可以用于估算往返时间、带宽延迟乘积或测试连接。由于传输是可靠的,TCP keepalive 中的间隔和重试并不适用于 PING,因此在 gRPC 的基于 PING 的保持连接实现中,使用超时(等效于间隔 * 重试次数)来取代。

Note 注意

It’s not required for service owners to support keepalive. Client authors must coordinate with service owners for whether a particular client-side setting is acceptable. Service owners decide what they are willing to support, including whether they are willing to receive keepalives at all (If the service does not support keepalive, the first few keepalive pings will be ignored, and the server will eventually send a GOAWAY message with debug data equal to the ASCII code for too_many_pings).

服务所有者并非必须支持保持连接。客户端作者必须与服务所有者协商,确定特定的客户端设置是否可接受。服务所有者决定他们愿意支持的内容,包括是否愿意接收保持连接的请求(如果服务不支持保持连接,则前几个保持连接的 PING 将被忽略,服务器最终将发送带有“too_many_pings” ASCII 编码的调试数据的 GOAWAY 消息)。

How configuring keepalive affects a call 配置保持连接如何影响调用

Keepalive is less likely to be triggered for unary RPCs with quick replies. Keepalive is primarily triggered when there is a long-lived RPC, which will fail if the keepalive check fails and the connection is closed.

对于具有快速响应的一元 RPC,不太可能触发保持连接。保持连接主要在存在长时间运行的 RPC 时触发,如果保持连接检查失败并关闭连接,该 RPC 将失败。

For streaming RPCs, if the connection is closed, any in-progress RPCs will fail. If a call is streaming data, the stream will also be closed and any data that has not yet been sent will be lost.

对于流式 RPC,如果连接关闭,任何正在进行中的 RPC 都将失败。如果调用正在流式传输数据,流也将关闭,并且尚未发送的任何数据将丢失。

Warning 警告

To avoid DDoSing, it’s important to take caution when setting the keepalive configurations. Thus, it is recommended to avoid enabling keepalive without calls and for clients to avoid configuring their keepalive much below one minute.

为了避免 DDoS 攻击,请在设置保持连接配置时要谨慎。因此,建议在没有调用的情况下避免启用保持连接,并且客户端应避免将保持连接配置得太短,不要低于一分钟。

Common situations where keepalives can be useful 保持连接可用的常见情况

gRPC HTTP/2 keepalives can be useful in a variety of situations, including but not limited to:

gRPC 的 HTTP/2 保持连接可在多种情况下发挥作用,包括但不限于以下情况:

- When sending data over a long-lived connection which might be considered as idle by proxy or load balancers.

- When the network is less reliable (For example, mobile applications).

- When using a connection after a long period of inactivity.

- 在长时间运行的连接上发送数据,可能会被代理或负载均衡器视为空闲连接。

- 在网络不太可靠时(例如移动应用程序)。

- 在长时间不活动后重新使用连接时。

Keepalive configuration specification 保持连接配置规范

| 选项 | 可用性 | 描述 | 客户端默认值 | 服务器默认值 |

|---|

KEEPALIVE_TIME | 客户端和服务器 | PING 帧之间的间隔(以毫秒为单位)。 | INT_MAX(禁用) | 27200000(2 小时) |

KEEPALIVE_TIMEOUT | 客户端和服务器 | PING 帧被确认的超时时间(以毫秒为单位)。如果在此时间内发送方未收到确认,将关闭连接。 | 20000(20 秒) | 20000(20 秒) |

KEEPALIVE_WITHOUT_CALLS | 客户端 | 客户端在没有未完成的流的情况下是否可以发送保持连接的 PING。 | 0(false) | N/A |

PERMIT_KEEPALIVE_WITHOUT_CALLS | 服务器 | 服务器在没有未完成的流的情况下是否可以发送保持连接的 PING。 | N/A | 0(false) |

PERMIT_KEEPALIVE_TIME | 服务器 | 服务器在连续接收到 PING 帧而未发送任何数据/头帧之间允许的最小时间间隔。 | N/A | 300000(5 分钟) |

MAX_CONNECTION_IDLE | 服务器 | 通道在没有未完成的 RPC 的情况下允许存在的最长时间,超过该时间后服务器将关闭连接。 | N/A | INT_MAX(无限制) |

MAX_CONNECTION_AGE | 服务器 | 通道允许存在的最长时间。 | N/A | INT_MAX(无限制) |

MAX_CONNECTION_AGE_GRACE | 服务器 | 在通道达到最长存活时间后的宽限期。 | N/A | INT_MAX(无限制) |

注意

Some languages may provide additional options, please refer to language examples and additional resource for more details.

某些语言可能提供其他选项,请参考语言示例和其他资源以获取更多详细信息。

语言指南和示例

其他资源

9 - 性能最佳实践

https://grpc.io/docs/guides/performance/

A user guide of both general and language-specific best practices to improve performance.

本文介绍了通用和特定语言的性能最佳实践,以提高性能。

通用

Always re-use stubs and channels when possible.

本文介绍了一般性和特定语言的性能最佳实践,以提高性能。

Use keepalive pings to keep HTTP/2 connections alive during periods of inactivity to allow initial RPCs to be made quickly without a delay (i.e. C++ channel arg GRPC_ARG_KEEPALIVE_TIME_MS).

在不活动期间使用保持连接的 ping,以保持 HTTP/2 连接处于活动状态,以便可以快速进行初始 RPC 调用,而无需延迟(例如 C++ 中的通道参数 GRPC_ARG_KEEPALIVE_TIME_MS)。

Use streaming RPCs when handling a long-lived logical flow of data from the client-to-server, server-to-client, or in both directions. Streams can avoid continuous RPC initiation, which includes connection load balancing at the client-side, starting a new HTTP/2 request at the transport layer, and invoking a user-defined method handler on the server side.

在处理长时间的客户端到服务器、服务器到客户端或双向数据逻辑流时,使用流式 RPC。流可以避免连续的 RPC 启动,其中包括客户端的连接负载均衡,在传输层开始新的 HTTP/2 请求,并在服务器端调用用户定义的方法处理程序。

Streams, however, cannot be load balanced once they have started and can be hard to debug for stream failures. They also might increase performance at a small scale but can reduce scalability due to load balancing and complexity, so they should only be used when they provide substantial performance or simplicity benefit to application logic. Use streams to optimize the application, not gRPC.

然而,一旦流开始,就无法进行负载均衡,并且对于流故障的调试可能会很困难。它们在小规模上可能会提高性能,但由于负载均衡和复杂性,可能会降低可扩展性,因此只有在对应用程序逻辑提供实质性性能或简化方面有益时才应使用。使用流来优化应用程序,而不是 gRPC。

Side note: This does not apply to Python (see Python section for details).

注: 这不适用于 Python(请参考 Python 部分了解详细信息)。

(Special topic) Each gRPC channel uses 0 or more HTTP/2 connections and each connection usually has a limit on the number of concurrent streams. When the number of active RPCs on the connection reaches this limit, additional RPCs are queued in the client and must wait for active RPCs to finish before they are sent. Applications with high load or long-lived streaming RPCs might see performance issues because of this queueing. There are two possible solutions:

(特殊主题) 每个 gRPC 通道使用 0 个或多个 HTTP/2 连接,每个连接通常对并发流的数量有限制。当连接上的活动 RPC 数量达到此限制时,额外的 RPC 将在客户端排队,并在等待活动的 RPC 完成后才会发送。具有高负载或长时间运行的流式 RPC 的应用程序可能会因此排队而出现性能问题。有两种可能的解决方案:

- Create a separate channel for each area of high load in the application.

- 在应用程序中的每个高负载区域创建一个单独的通道。

- Use a pool of gRPC channels to distribute RPCs over multiple connections (channels must have different channel args to prevent re-use so define a use-specific channel arg such as channel number).

- 使用一组 gRPC 通道将 RPC 分布到多个连接上(通道必须具有不同的通道参数,以防止重用,因此定义一个特定于使用的通道参数,如通道编号)。

Side note: The gRPC team has plans to add a feature to fix these performance issues (see grpc/grpc#21386 for more info), so any solution involving creating multiple channels is a temporary workaround that should eventually not be needed.

注: gRPC 团队计划添加一个功能来解决这些性能问题(有关详细信息,请参阅 grpc/grpc#21386),因此涉及创建多个通道的解决方案是一个临时解决方法,最终可能不再需要。

C++

Do not use Sync API for performance sensitive servers. If performance and/or resource consumption are not concerns, use the Sync API as it is the simplest to implement for low-QPS services.

对于对性能敏感的服务器,不要使用同步 API。如果性能和/或资源消耗不是问题,可以使用同步 API,因为它是实现低 QPS 服务最简单的方式。

Favor callback API over other APIs for most RPCs, given that the application can avoid all blocking operations or blocking operations can be moved to a separate thread. The callback API is easier to use than the completion-queue async API but is currently slower for truly high-QPS workloads.

对于大多数 RPC,优先使用回调 API,前提是应用程序可以避免所有阻塞操作,或者阻塞操作可以移到单独的线程中。回调 API 比完成队列异步 API 更易于使用,但在真正高 QPS 的工作负载中,它目前速度较慢。

If having to use the async completion-queue API, the best scalability trade-off is having numcpu’s threads. The ideal number of completion queues in relation to the number of threads can change over time (as gRPC C++ evolves), but as of gRPC 1.41 (Sept 2021), using 2 threads per completion queue seems to give the best performance.

如果必须使用异步完成队列 API,则在可扩展性和性能之间进行最佳平衡的方法是使用 numcpu 个线程。关于完成队列数量与线程数之间的理想关系可能会随时间而变化(随着 gRPC C++ 的发展),但截至 gRPC 1.41(2021 年 9 月),每个完成队列使用 2 个线程似乎可以获得最佳性能。

For the async completion-queue API, make sure to register enough server requests for the desired level of concurrency to avoid the server continuously getting stuck in a slow path that results in essentially serial request processing.

对于异步完成队列 API,请确保为所需的并发级别注册足够的服务器请求,以避免服务器持续陷入导致实质上的串行请求处理的慢路径。

(Special topic) Enable write batching in streams if message k + 1 does not rely on responses from message k by passing a WriteOptions argument to Write with buffer_hint set:

(特殊主题) 如果第 k + 1 个消息不依赖于第 k 个消息的响应,则在流中启用写批处理,通过传递带有设置了 buffer_hint 的 WriteOptions 参数来实现:

stream_writer->Write(message, WriteOptions().set_buffer_hint());

(Special topic) gRPC::GenericStub can be useful in certain cases when there is high contention / CPU time spent on proto serialization. This class allows the application to directly send raw gRPC::ByteBuffer as data rather than serializing from some proto. This can also be helpful if the same data is being sent multiple times, with one explicit proto-to-ByteBuffer serialization followed by multiple ByteBuffer sends.

(特殊主题) 在某些情况下,当存在高竞争/花费 CPU 时间用于 proto 序列化时,可以使用gRPC::GenericStub。该类允许应用程序直接发送原始的 gRPC::ByteBuffer 作为数据,而不是从某个 proto 进行序列化。如果同样的数据被多次发送,通过一个显式的 proto-to-ByteBuffer 序列化,然后进行多次 ByteBuffer 发送,可以提供帮助。

Java

- Use non-blocking stubs to parallelize RPCs.

- **使用非阻塞存根(stubs)**以并行处理 RPC。

- Provide a custom executor that limits the number of threads, based on your workload (cached (default), fixed, forkjoin, etc).

- 提供自定义执行器,根据工作负载限制线程数(缓存(默认),固定,forkjoin 等)。

Python

- Streaming RPCs create extra threads for receiving and possibly sending the messages, which makes streaming RPCs much slower than unary RPCs in gRPC Python, unlike the other languages supported by gRPC.

- 流式 RPC 会创建额外的线程来接收和可能发送消息,这使得在 gRPC Python 中流式 RPC 比一元 RPC 慢得多,而其他由 gRPC 支持的语言不会出现这个问题。

- Using asyncio could improve performance.

- 使用 asyncio 可以改善性能。

- Using the future API in the sync stack results in the creation of an extra thread. Avoid the future API if possible.

- 在同步堆栈中使用 future API 会创建额外的线程。尽可能避免使用 future API。

- (Experimental) An experimental single-threaded unary-stream implementation is available via the SingleThreadedUnaryStream channel option, which can save up to 7% latency per message.

- (实验性) 可通过SingleThreadedUnaryStream 通道选项获得实验性的单线程一元流实现,可节省每个消息高达 7% 的延迟。

10 - 等待就绪

Wait-for-Ready 等待就绪

https://grpc.io/docs/guides/wait-for-ready/

Explains how to configure RPCs to wait for the server to be ready before sending the request.

解释了如何配置 RPC,在发送请求之前等待服务器就绪。

Overview 概述

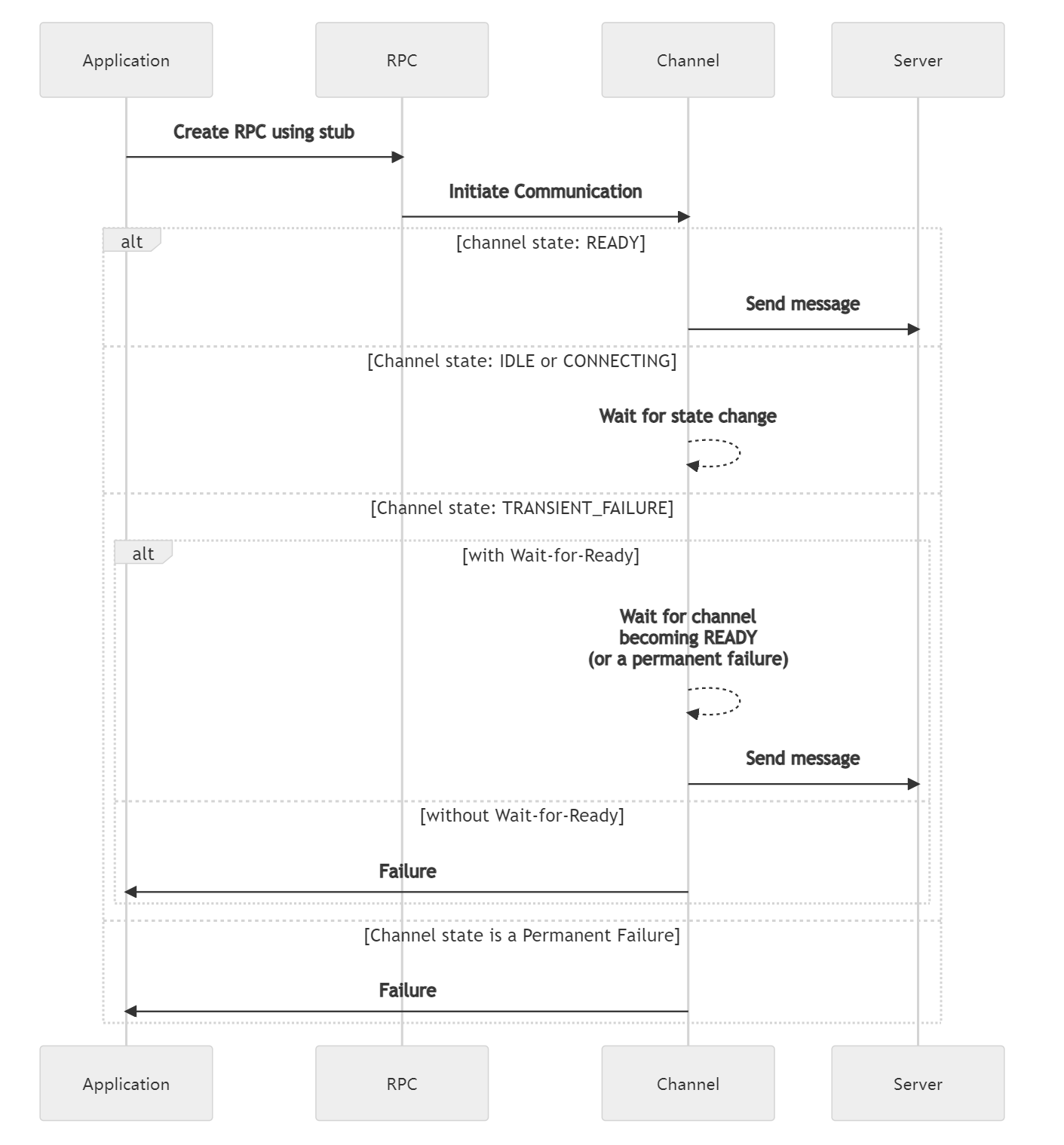

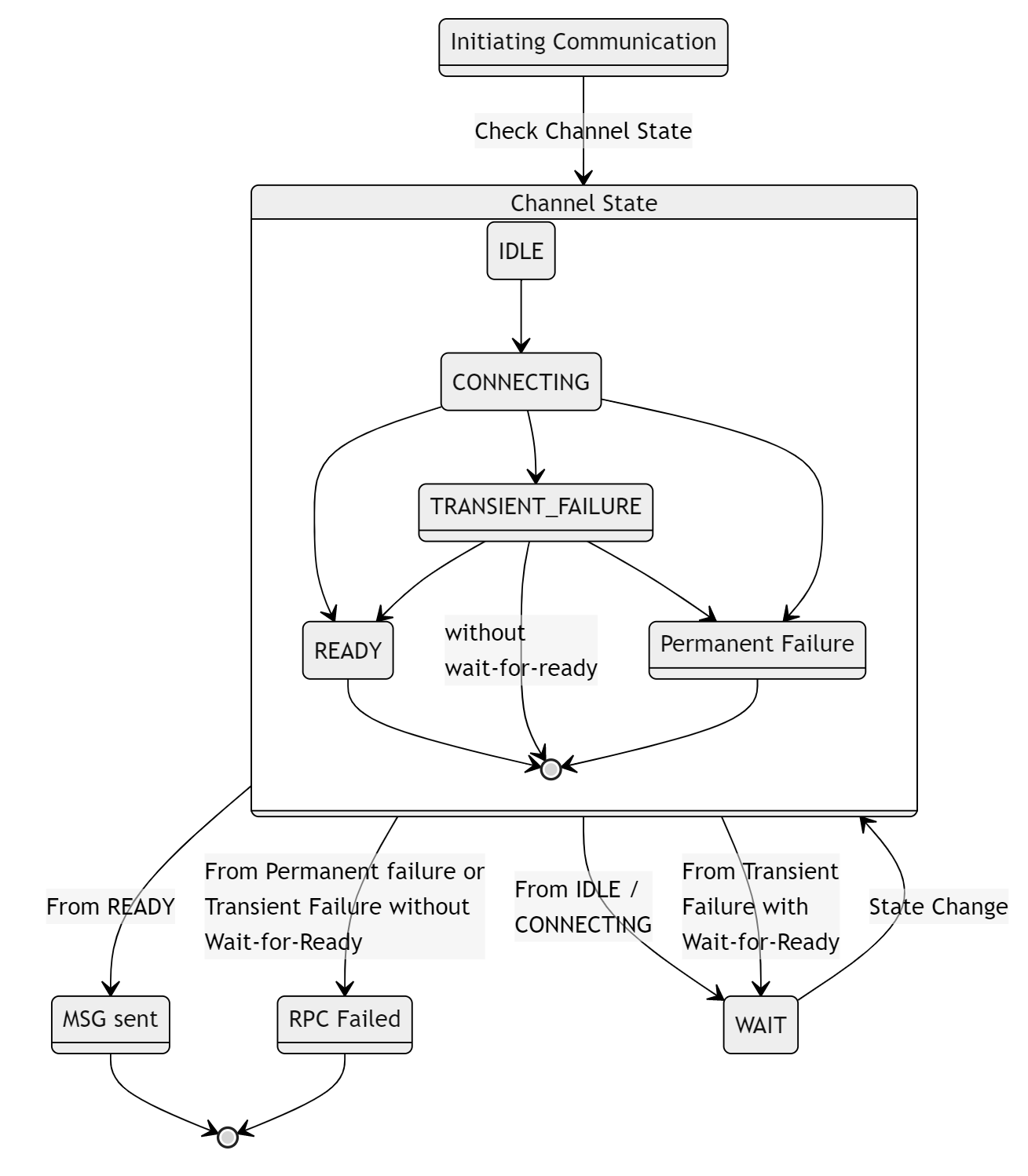

This is a feature which can be used on a stub which will cause the RPCs to wait for the server to become available before sending the request. This allows for robust batch workflows since transient server problems won’t cause failures. The deadline still applies, so the wait will be interrupted if the deadline is passed.

这是一个可以用于存根(stub)的特性,它会导致 RPC 在发送请求之前等待服务器可用。这样可以实现稳健的批处理工作流,因为临时的服务器问题不会导致失败。仍然适用截止时间(deadline),所以如果超过截止时间,等待将被中断。

When an RPC is created when the channel has failed to connect to the server, without Wait-for-Ready it will immediately return a failure; with Wait-for-Ready it will simply be queued until the connection becomes ready. The default is without Wait-for-Ready.

当通道无法连接到服务器时创建 RPC,如果没有设置等待就绪(Wait-for-Ready),它会立即返回失败;如果设置了等待就绪,它将被简单地排队,直到连接就绪。默认情况下是不使用等待就绪。

For detailed semantics see this.

有关详细的语义,请参阅这个文档。

How to use Wait-for-Ready 如何使用等待就绪

You can specify for a stub whether or not it should use Wait-for-Ready, which will automatically be passed along when an RPC is created.

您可以为存根指定是否应该使用等待就绪,当创建 RPC 时,它会自动传递。

Note 注意

The RPC can still fail for other reasons besides the server not being ready, so error handling is still necessary.

除了服务器未就绪之外,RPC 仍然可能因其他原因而失败,因此仍然需要进行错误处理。

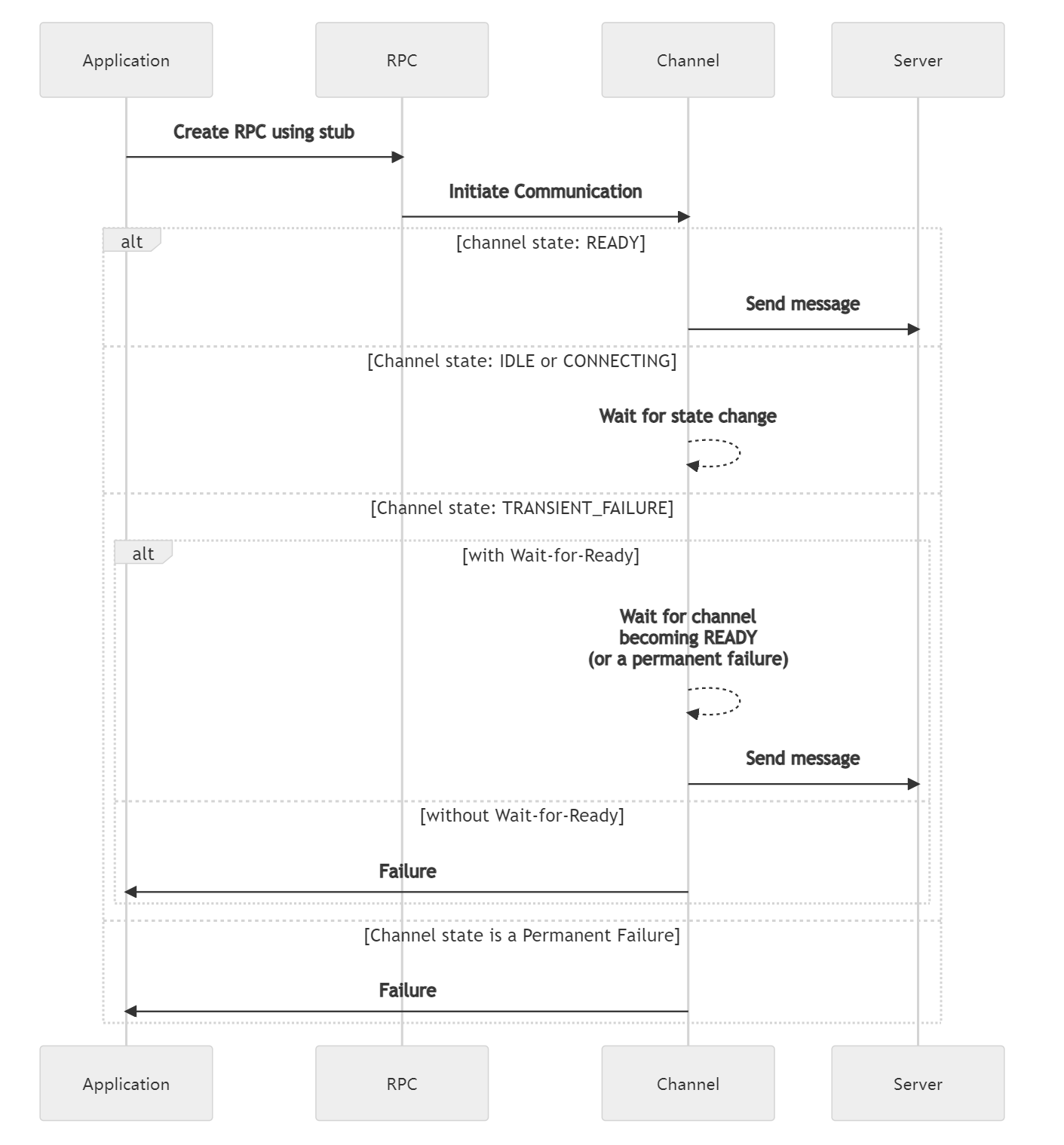

The following shows the sequence of events that occur, when a client sends a message to a server, based upon channel state and whether or not Wait-for-Ready is set.

以下是基于通道状态和是否设置了等待就绪的客户端向服务器发送消息时发生的事件序列。

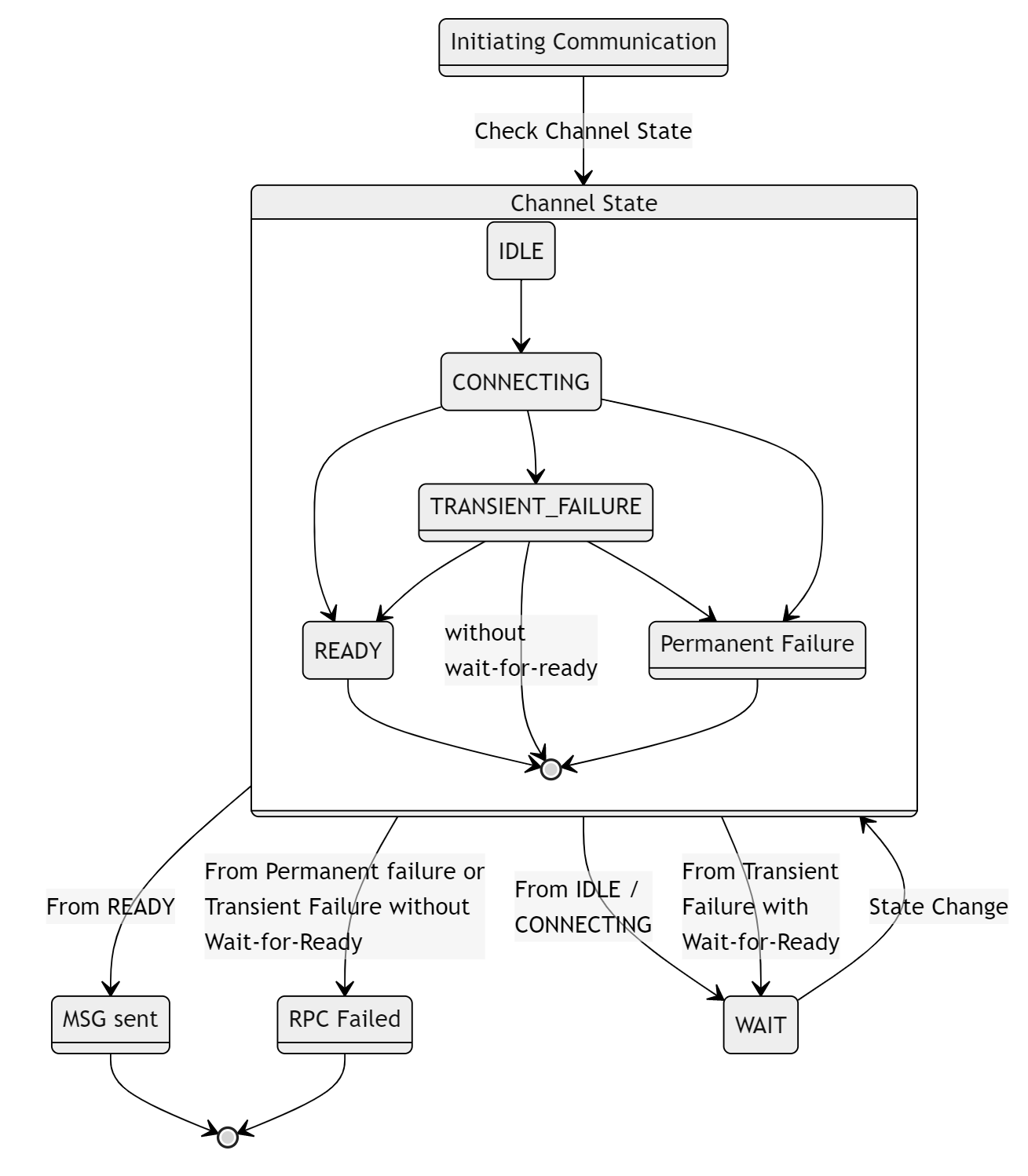

The following is a state based view

以下是基于状态的视图

Alternatives 替代方案

- Loop (with exponential backoff) until the RPC stops returning transient failures.

- 循环(使用指数退避)直到 RPC 不再返回临时失败。

- This could be combined, for efficiency, with implementing an

onReady Handler (for languages that support this). - 这可以与实现

onReady处理程序(适用于支持此功能的语言)结合使用以提高效率。

- Accept failures that might have been avoided by waiting because you want to fail fast

- 忽略可能因等待而避免的故障,因为您希望尽快失败。

语言支持